User Manual¶

Introduction¶

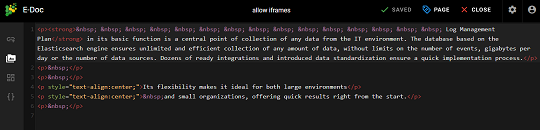

ITRS Log Analytics is an innovation solution allowing for centralized IT systems events. It allows for an immediate review, analysis, and reporting of system logs - the amount of data does not matter. ITRS Log Analytics is a response to the huge demand for the storage and analysis of large amounts of data from IT systems.ITRS Log Analytics is an innovation solution that responds to the need to effectively process large amounts of data coming from the IT environments of today’s organizations. Based on the open-source project Elasticsearch valued on the market, we have created an efficient solution with powerful data storage and searching capabilities. The System has been enriched with functionality that ensures the security of stored information, verification of users, data correlation and visualization, alerting, and reporting.

ITRS Log Analytics project was created to centralize events of all IT areas in the organization. We focused on creating a tool that functionality is most expected by IT departments. Because an effective licensing model has been applied, the solution can be implemented in the scope expected by the customer even with a very large volume of data. At the same time, the innovation architecture allows for servicing a large portion of data, which cannot be dedicated to solutions with limited scalability.

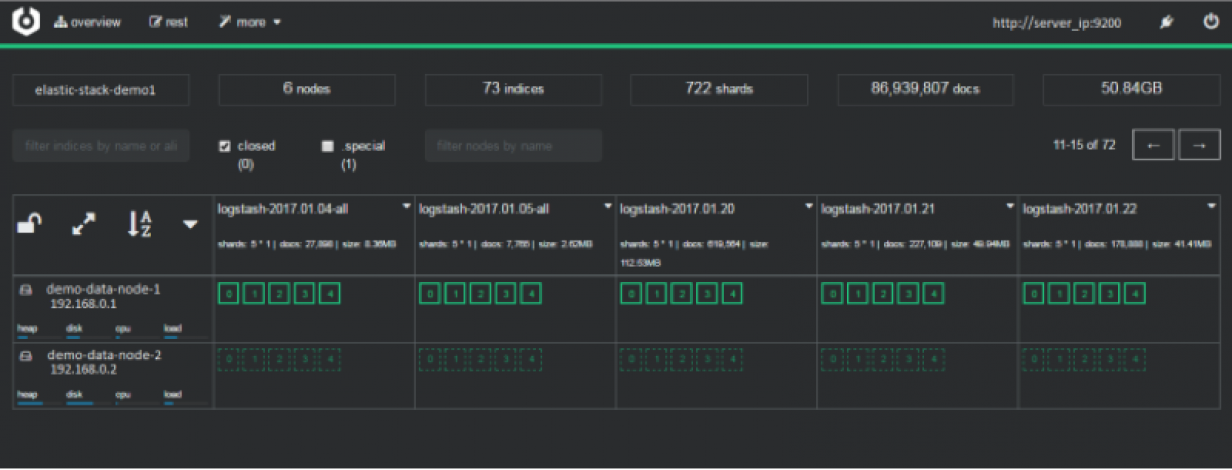

Elasticsearch¶

Elasticsearch is a NoSQL database solution that is the heart of our system. Text information sent to the system, application, and system logs are processed by Logstash filters and directed to Elasticsearch. This storage environment creates, based on the received data, their respective layout in a binary form, called a data index. The Index is kept on Elasticsearch nodes, implementing the appropriate assumptions from the configuration, such as:

- Replication index between nodes,

- Distribution index between nodes.

The Elasticsearch environment consists of nodes:

- Data node - responsible for storing documents in indexes,

- Master node - responsible for the supervision of nodes,

- Client node - responsible for cooperation with the client.

Data, Master, and Client elements are found even in the smallest Elasticsearch installations, therefore often the environment is referred to as a cluster, regardless of the number of nodes configured. Within the cluster, Elasticsearch decides which data portions are held on a specific node.

Index layout, their name, and set of fields are arbitrary and depend on the form of system usage. It is common practice to put data of a similar nature to the same type of index that has a permanent first part of the name. The second part of the name often remains the date the index was created, which in practice means that the new index is created every day. This practice, however, is conventional and every index can have its rotation convention, name convention, construction scheme, and its own set of other features. As a result of passing the document through the

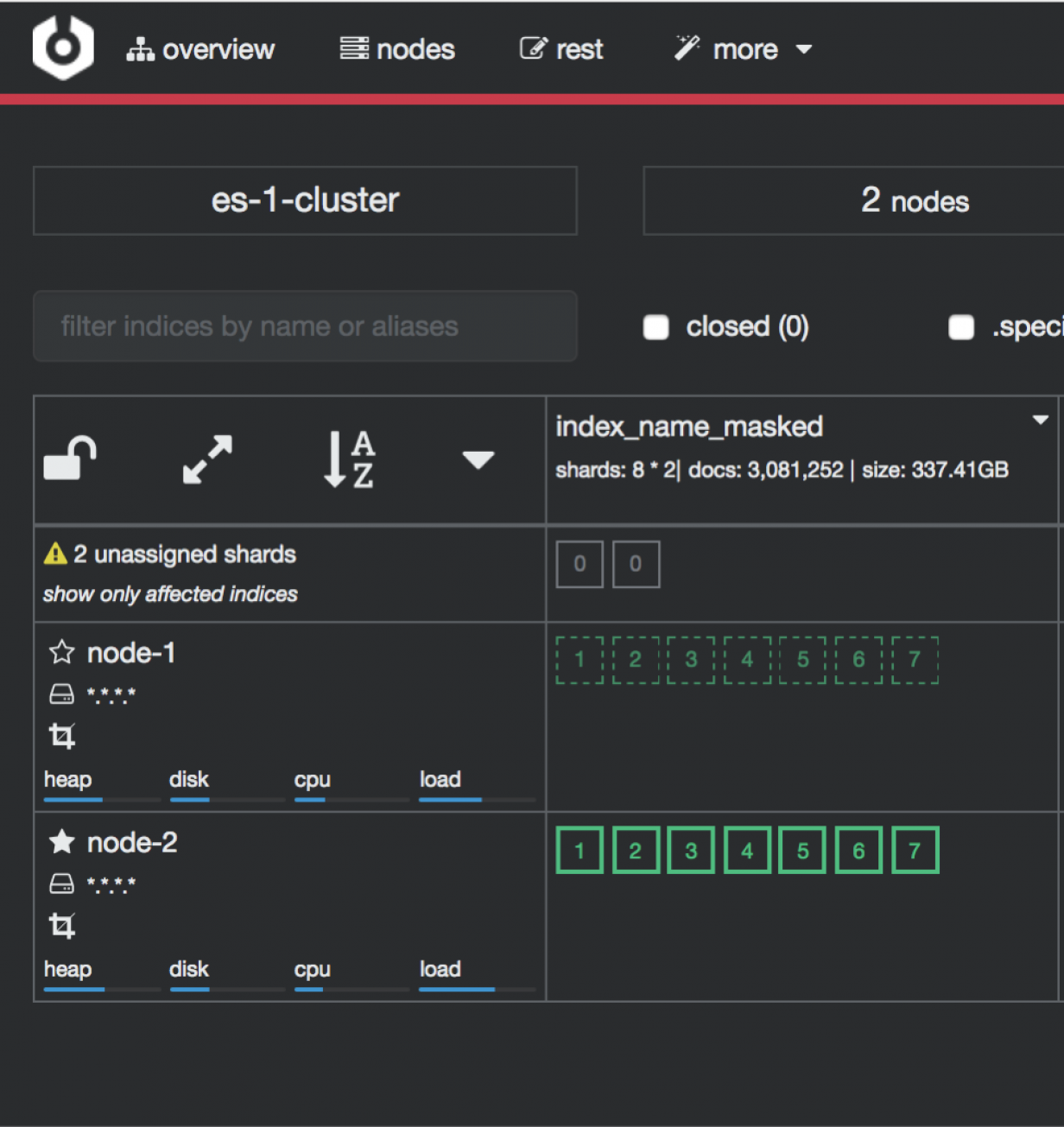

The Indexes are built with elementary parts called shards. It is good practice to create Indexes with the number of shards that is the multiple of the Elasticsearch data nodes number. Elasticsearch in the 7.x version has a new feature called Sequence IDs that guarantees more successful and efficient shard recovery. \

Elasticsearch uses mapping to describe the fields or properties that documents of that type may have. Elasticsearch in the 7.x version restricts indices to a single type.

Kibana¶

Kibana lets you visualize your Elasticsearch data and navigate the Elastic Stack. Kibana gives you the freedom to select the way you give shape to your data. And you don’t always have to know what you’re looking for. Kibana core ships with the classics: histograms, line graphs, pie charts, sunbursts, and more. Plus, you can use Vega grammar to design your visualizations. All leverage the full aggregation capabilities of Elasticsearch. Perform advanced time series analysis on your Elasticsearch data with our curated time series UIs. Describe queries, transformations, and visualizations with powerful, easy-to-learn expressions. Kibana 7.x has two new features - a new “Full-screen” mode for viewing dashboards, and a new “Dashboard-only” mode which enables administrators to share dashboards safely.

Logstash¶

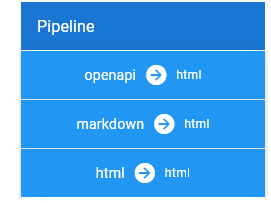

Logstash is an open-source data collection engine with real-time pipelining capabilities. Logstash can dynamically unify data from disparate sources and normalize the data into destinations of your choice. Cleanse and democratize all your data for diverse advanced downstream analytics and visualization use cases.

While Logstash originally drove innovation in log collection, its capabilities extend well beyond that use case. Any type of event can be enriched and transformed with a broad array of input, filter, and output plugins, with many native codecs further simplifying the ingestion process. Logstash accelerates your insights by harnessing a greater volume and variety of data.

Logstash 7.x version supports native support for multiple pipelines. These pipelines are defined in a pipelines.yml file which is loaded by default. Users will be able to manage multiple pipelines within Kibana. This solution uses Elasticsearch to store pipeline configurations and allows for on-the-fly reconfiguration of Logstash pipelines.

ELK¶

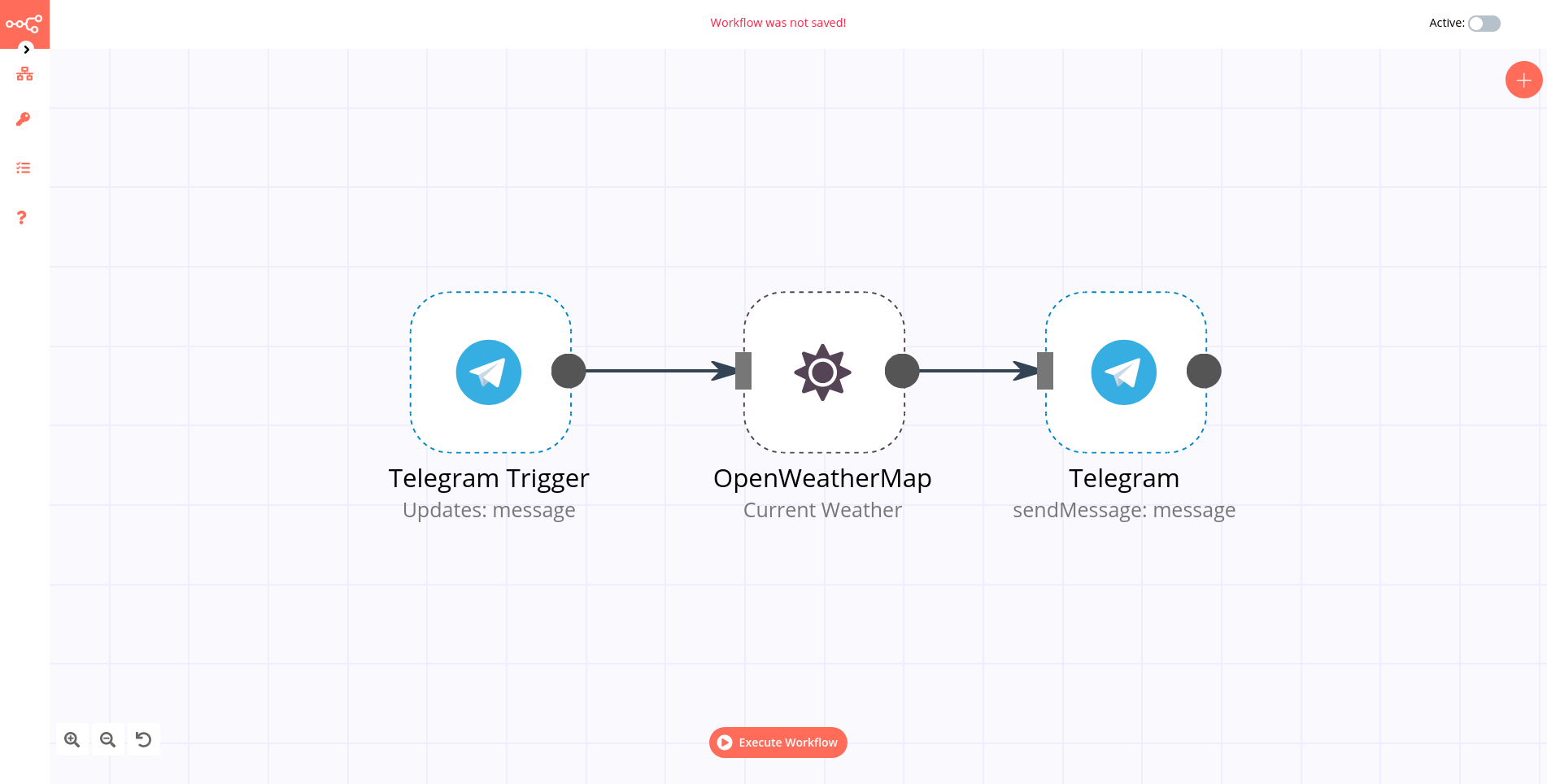

“ELK” is the acronym for three open-source projects: Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a server‑side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a “stash” like Elasticsearch. Kibana lets users visualize data with charts and graphs in Elasticsearch. The Elastic Stack is the next evolution of the ELK Stack.

Data source¶

Where does the data come from?

ITRS Log Analytics is a solution allowing effective data processing from the IT environment that exists in the organization.

The Elsasticsearch engine allows the building database in which large amounts of data are stored in ordered indexes. The Logstash module is responsible for loading data into Indexes, whose function is to collect data on specific TCP/UDP ports, filter them, normalize them, and place them in the appropriate index. Additional plugins, that we can use in Logstash reinforce the work of the module and increase its efficiency, enabling the module to quickly interpret data and parse it.

Below is an example of several of the many available Logstash plugins:

exec - receive an output of the shell function as an event;

imap - read email from IMAP servers;

jdbc - create events based on JDC data;

jms - create events from Jms broker;

Both Elasticsearch and Logstash are free Open-Source solutions.

More information about the Elasticsearch module can be found at: https://github.com/elastic/elasticsearch

List of available Logstash plugins: https://github.com/elastic/logstash-docs/tree/master/docs/plugins

System services¶

For proper operation, ITRS Log Analytics requires starting the following system services:

- elasticsearch.service - we can run it with a command:

systemctl start elasticsearch.service

we can check its status with a command:

systemctl status elasticsearch.service

- kibana.service - we can run it with a command:

systemctl start kibana.service

we can check its status with a command:

systemctl status kibana.service

- logstash.service - we can run it with a command:

systemctl start logstash.service

we can check its status with a command:

systemctl status logstash.service

First login¶

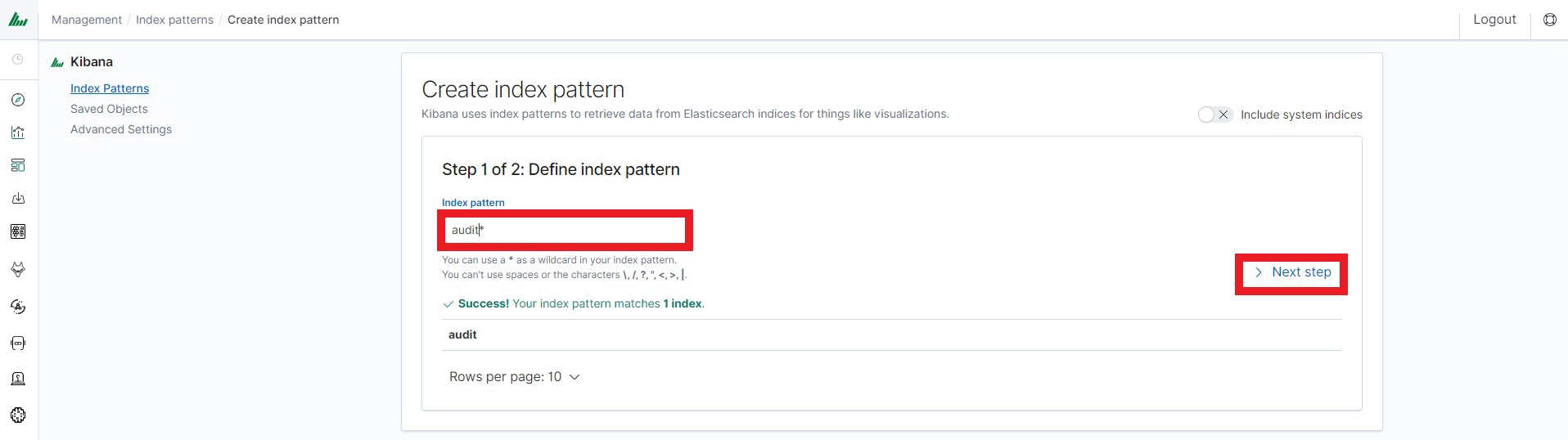

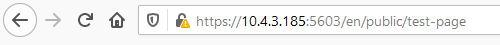

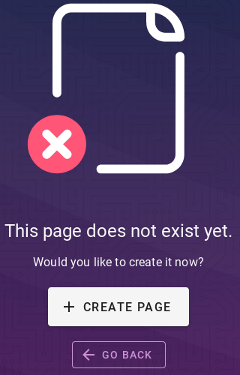

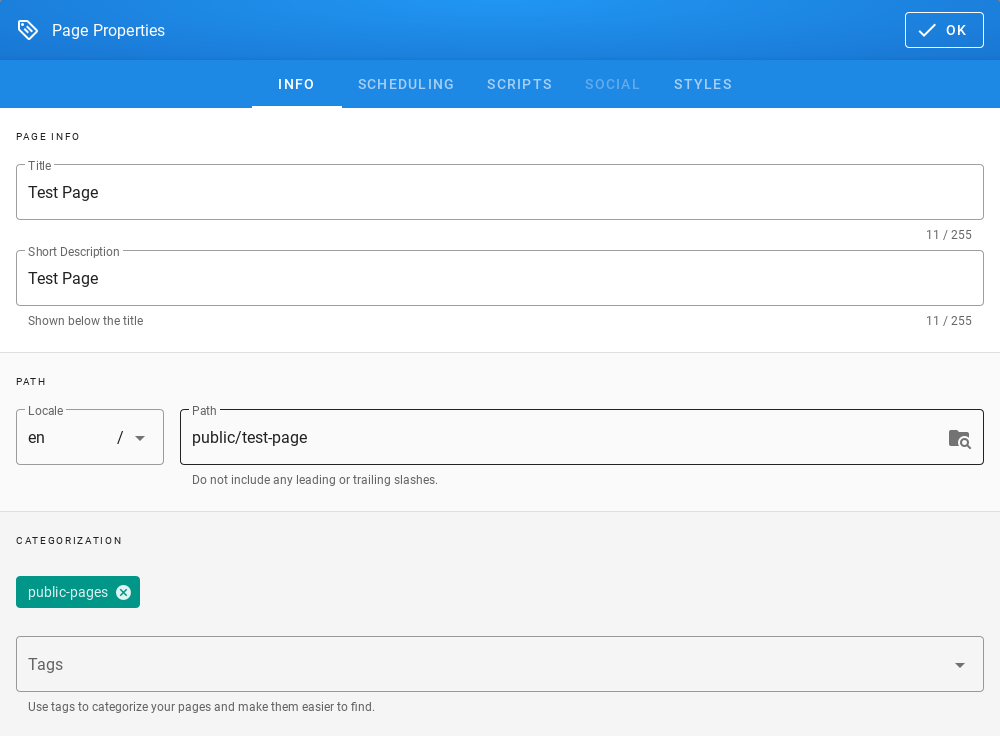

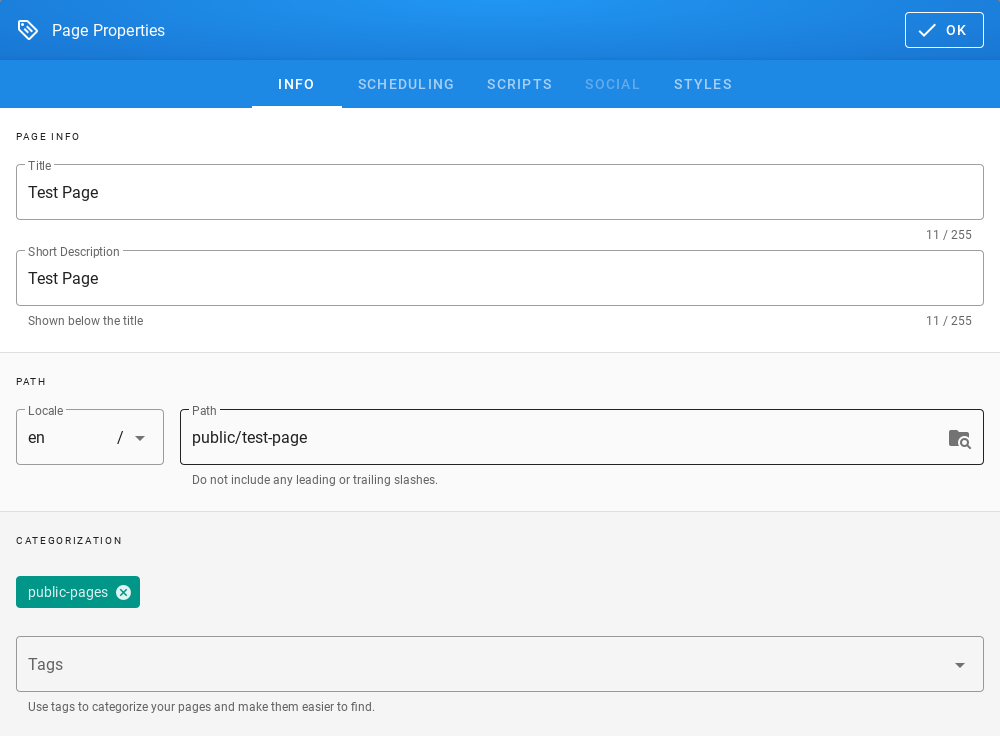

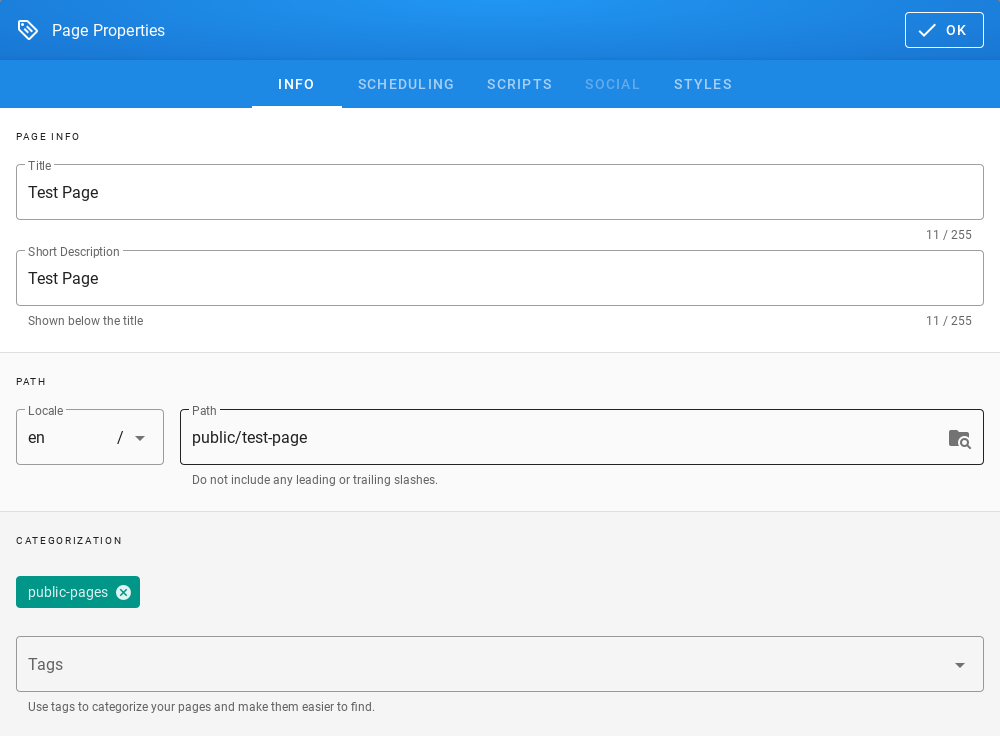

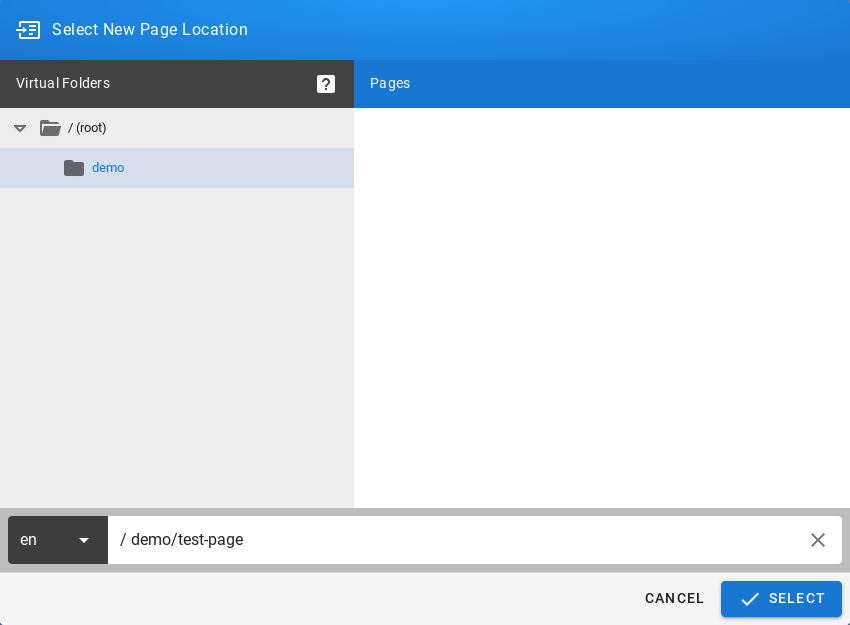

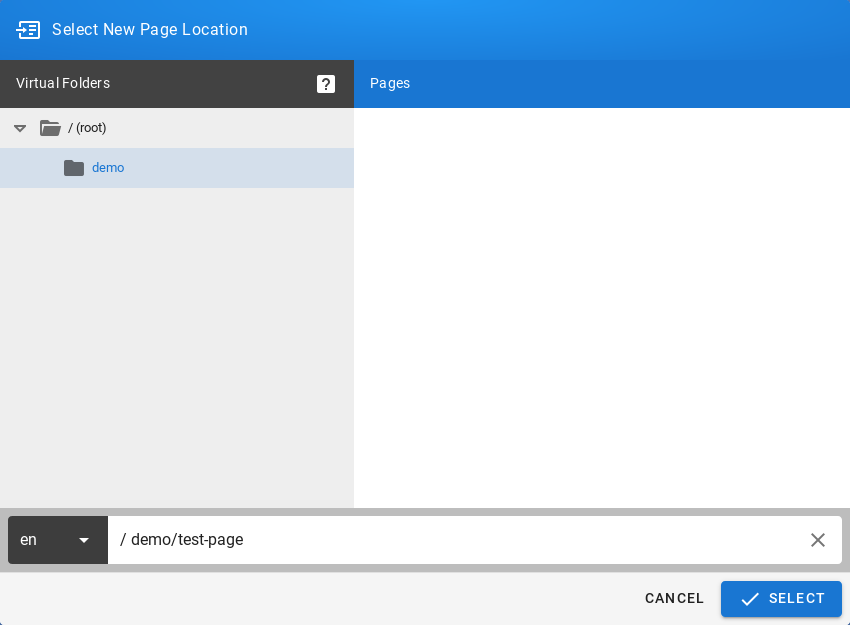

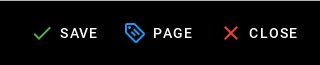

If you log in to ITRS Log Analytics for the first time, you must specify the Index to be searched. We have the option of entering the name of your index, indicating a specific index from a given day, or using the asterisk () to indicate all of them matching a specific index pattern. Therefore, to start working with the ITRS Log Analytics application, we log in to it (by default the user: logserver/password:logserver).

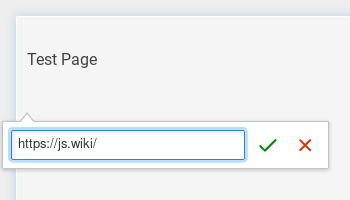

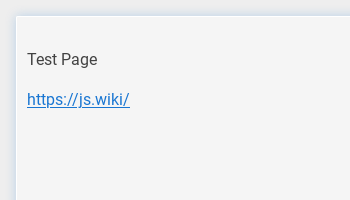

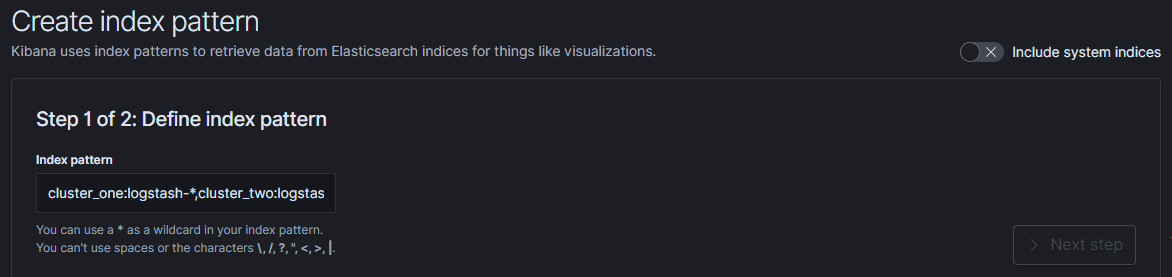

After logging in to the application click the button “Set up index pattern” to add a new index pattern in Kibana:

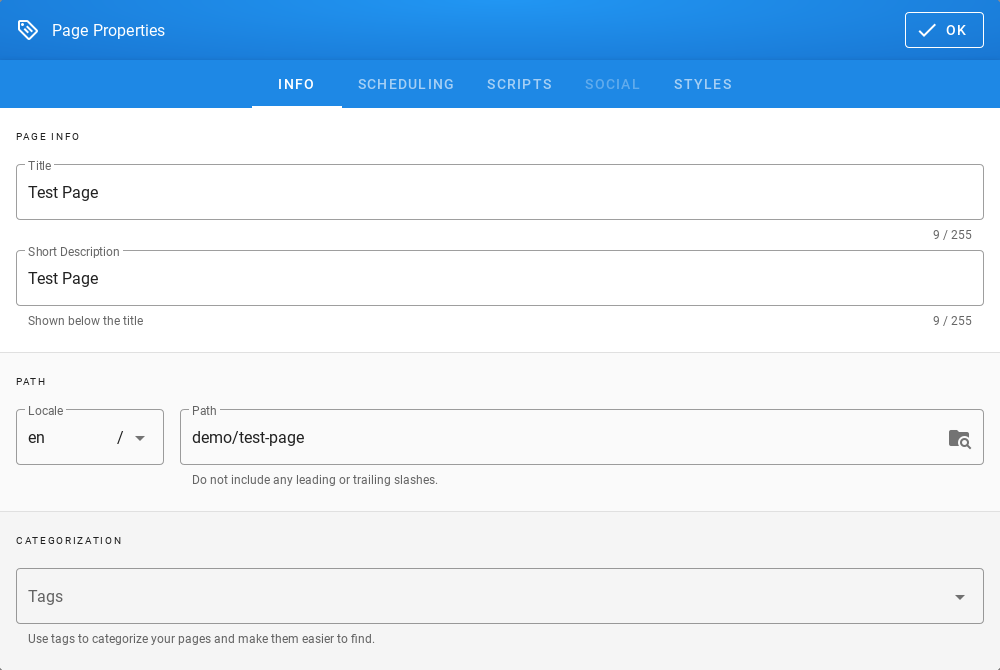

In the “Index pattern” field enter the name of the index or index pattern (after confirming that the index or sets of indexes exist) and click the “Next step” button.

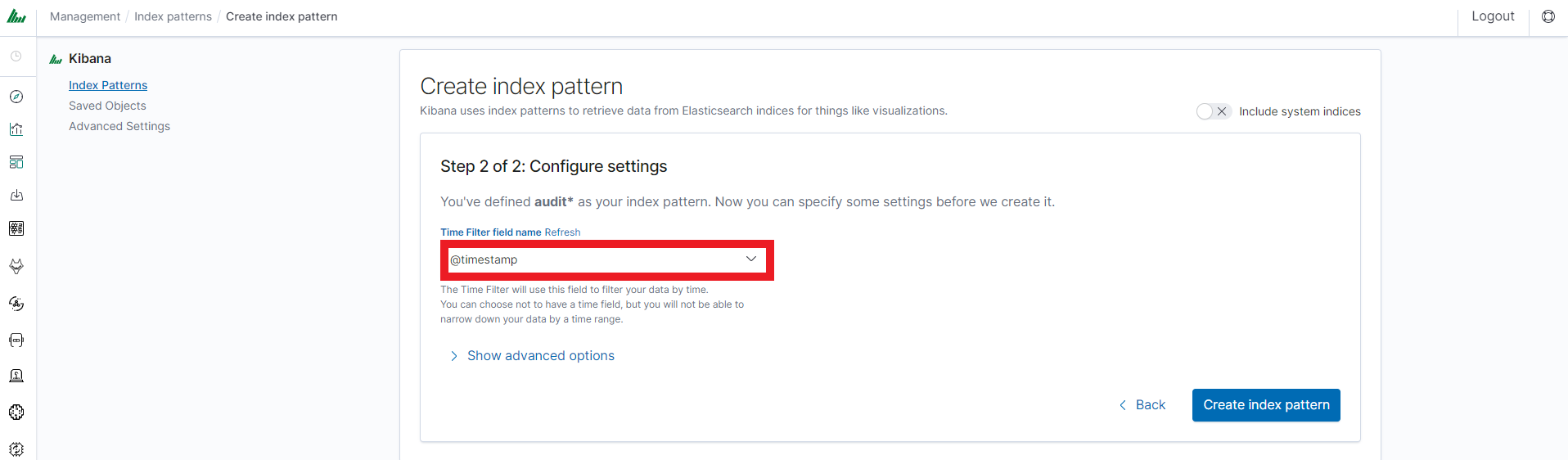

In the next step, from a drop-down menu select the “Time filter field name”, after which individual event (events) should be sorted. By default the timestamp is set, which is the time of occurrence of the event, but depending on the preferences. It may also be the time of the indexing or other selected based on the fields indicated on the event.

At any time, you can add more indexes or index patterns by going to the main tab selecting „Management” and next selecting „Index Patterns”.

Index selection¶

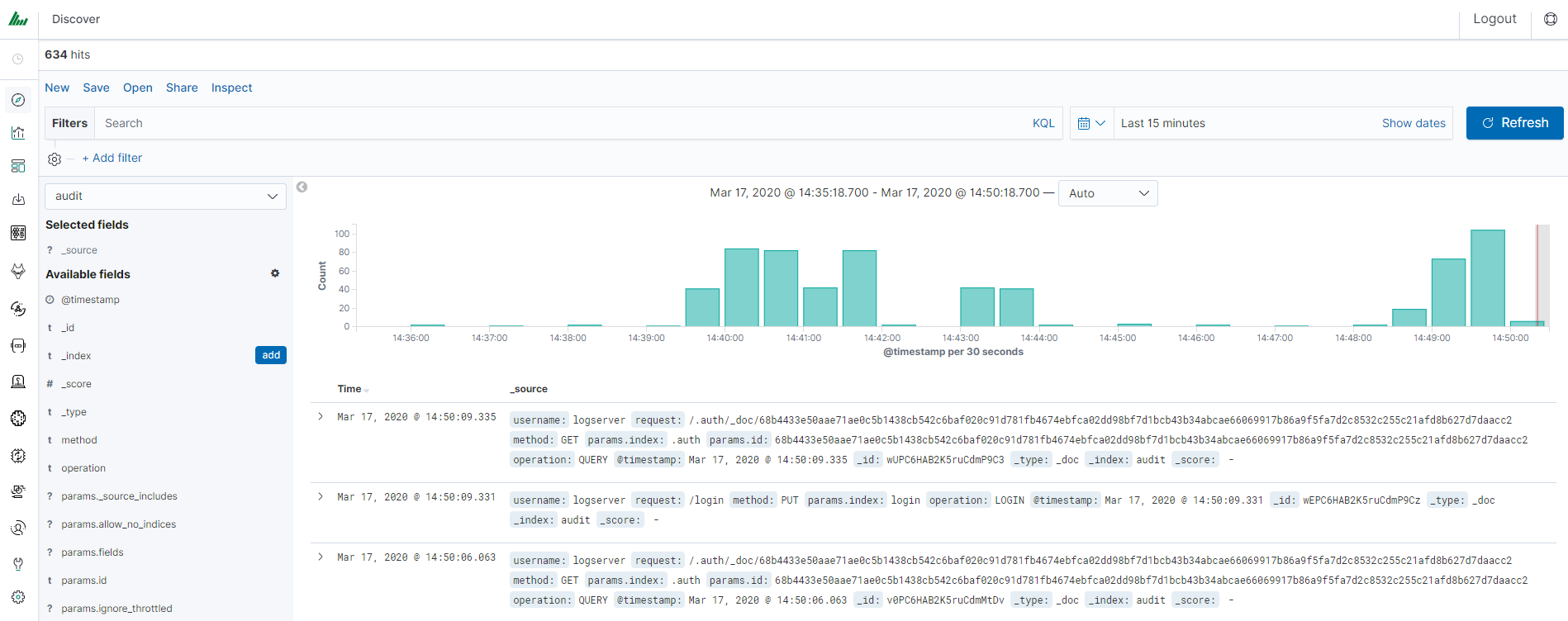

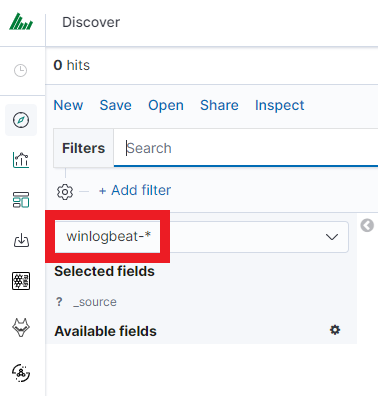

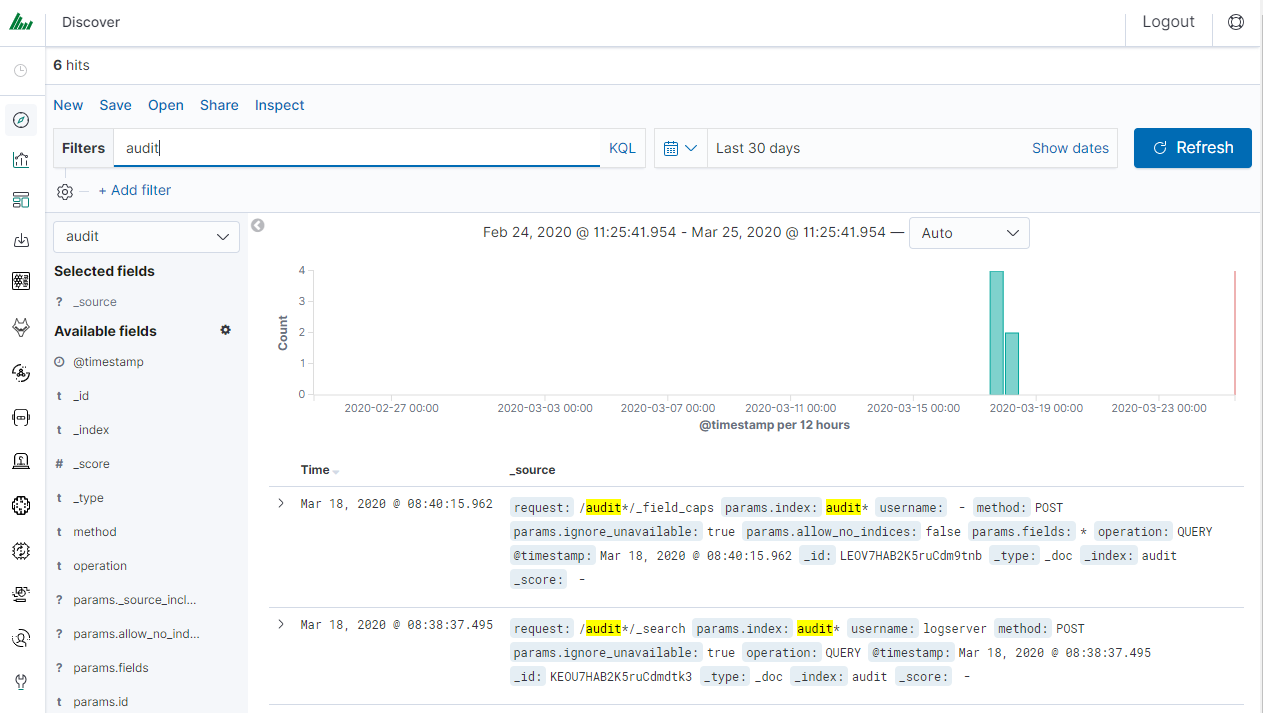

After logging into ITRS Log Analytics, you will be going to the „Discover” tab, where you can interactively explore your data. You have access to every document in every index that matches the selected index patterns.

If you want to change the selected index, drop-down menu with the name of the current object in the left panel. Clicking on the object from the expanded list of previously created index patterns will change the searched index.

Discovery¶

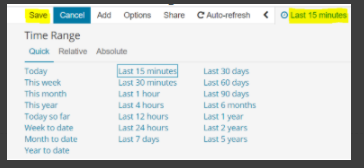

Time settings and refresh¶

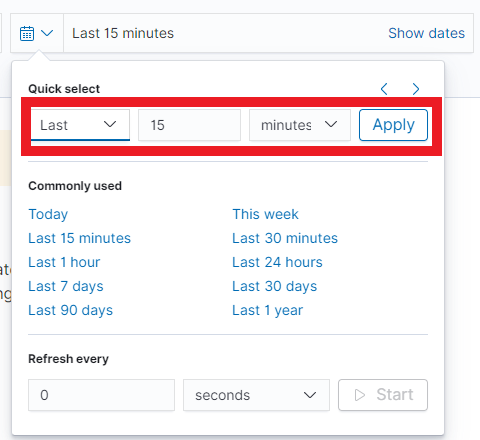

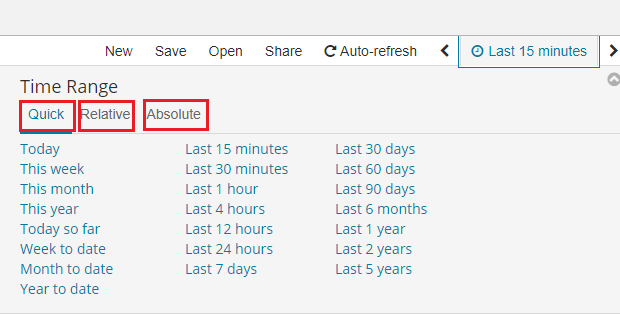

In the upper right corner, there is a section that defines the range of time that ITRS Log Analytics will search in terms of conditions contained in the search bar. The default value is the last 15 minutes.

After clicking this selection, we can adjust the scope of the search by selecting one of the three tabs in the drop-down window:

- Quick: contains several predefined ranges that should be clicked.

- Relative: in this window specify the day from which ITRS Log Analytics should search for data.

- Absolute: using two calendars we define the time range for which the search results are to be returned.

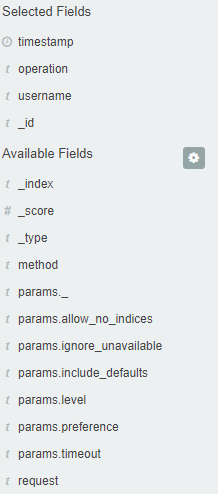

Fields¶

ITRS Log Analytics in the body of searched events, recognize fields

that can be used to create more precision queries. The extracted

fields are visible in the left panel. They are divided into three types:

timestamp, marked on the clock icon; text, marked with the letter “t” , and digital, marked with a hashtag

, and digital, marked with a hashtag .

.

Pointing to them and clicking on an icon , they are automatically transferred to the „Selected Fields” column and in the place of events, a table with selected columns is created regularly. In the “Selected Fields” selection you can also delete specific fields from the table by clicking

, they are automatically transferred to the „Selected Fields” column and in the place of events, a table with selected columns is created regularly. In the “Selected Fields” selection you can also delete specific fields from the table by clicking on the selected element.

on the selected element.

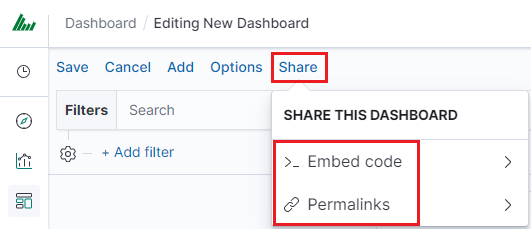

Filtering and syntax building¶

We use the query bar to search for interesting events. For example, after entering the word „error”, all events that contain the word will be displayed, additional highlighting them with a yellow background.

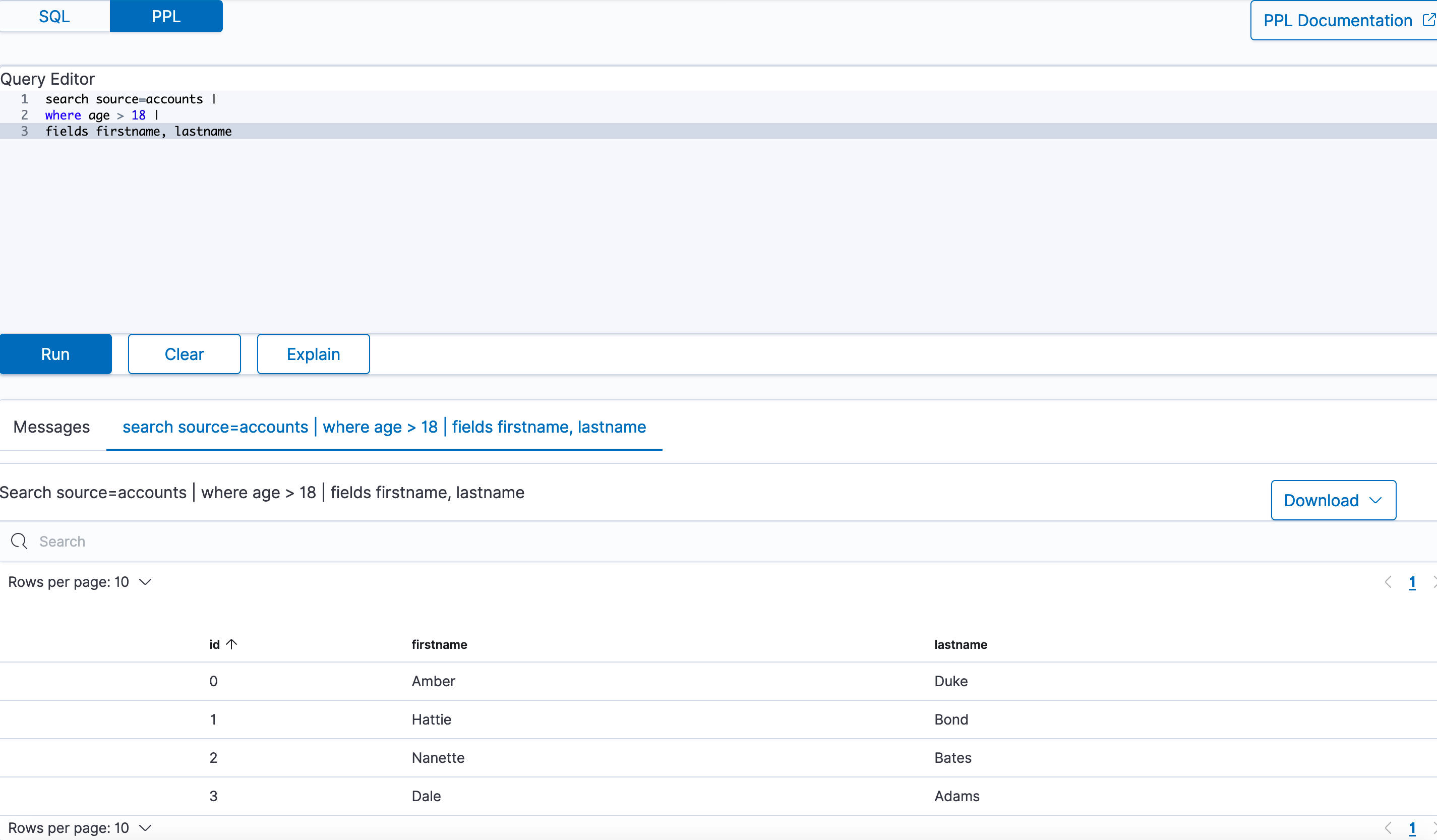

Syntax¶

Fields can be used similarly by defining conditions that interest us. The syntax of such queries is:

fields_name:<fields_value>

Example:

status:500

This query will display all events that contain the „status” fields with a value of 500.

Filters¶

The field value does not have to be a single, specific value. For digital fields we can specify a range in the following scheme:

fields_name:[<range_from TO <range_to]

Example:

status:[500 TO 599]

This query will return events with status fields that are in the range 500 to 599.

Operators¶

The search language used in ITRS Log Analytics allows to you use logical operators „AND”, „OR” and „NOT”, which are key and necessary to build more complex queries.

AND is used to combine expressions, e.g.

error AND "access denied". If an event contains only one expression or the worderroranddeniedbut not the word access, then it will not be displayed.OR is used to search for the events that contain one OR other expression, e.g.

status:500 OR denied. This query will display events that contain the word „denied” or a status field value of 500. ITRS Log Analytics uses this operator by default, so query„status:500" "denied"would return the same results.NOT is used to exclude the following expression e.g. „status:[500 TO 599] NOT status:505” will display all events that have a status field, and the value of the field is between 500 and 599 but will eliminate from the result events whose status field value is exactly 505.

The above methods can be combined by building even more complex queries. Understanding how they work and joining it, is the basis for effective searching and full use of ITRS Log Analytics.

Example of query built from connected logical operations:

status:[500 TO 599] AND („access denied" OR error) NOT status:505

Returns in the results all events for which the value of status fields are in the range of 500 to 599, simultaneously contain the word „access denied” or „error”, omitting those events for which the status field value is 505.

Wildcards¶

Wildcard searches can be run on individual terms, using ? to replace a single character, and * to replace zero or more characters:

qu?ck bro*

Be aware that wildcard queries can use an enormous amount of memory and perform very badly — just think how many terms need to be queried to match the query string “a* b* c*”.

Regular expressions¶

Regular expression patterns can be embedded in the query string by wrapping them in forward-slashes (”/”):

name:/joh?n(ath[oa]n)/

The supported regular expression syntax is explained in Regular expression syntax https://www.elastic.co/guide/en/elasticsearch/reference/7.10/regexp-syntax.html

Fuzziness¶

You can run fuzzy queries using the ~ operator:

quikc~ brwn~ foks~

For these queries, the query string is normalized. If present, only certain filters from the analyzer are applied. For a list of applicable filters, see Normalizers.

The query uses the Damerau-Levenshtein distance to find all terms with a maximum of two changes, where a change is the insertion, deletion, or substitution of a single character or transposition of two adjacent characters.

The default edit distance is 2, but an edit distance of 1 should be sufficient to catch 80% of all human misspellings. It can be specified as:

quikc~1

Proximity searches¶

While a phrase query (e.g. “john smith”) expects all of the terms in the same order, a proximity query allows the specified words to be further apart or in a different order. In the same way that fuzzy queries can specify a maximum edit distance for characters in a word, a proximity search allows us to specify a maximum edit distance of words in a phrase:

"fox quick"~5

The closer the text in a field is to the original order specified in the query string, the more relevant that document is considered to be. When compared to the above example query, the phrase “quick fox” would be considered more relevant than “quick brown fox”.

Ranges¶

Ranges can be specified for date, numeric, or string fields. Inclusive ranges are specified with square brackets [min TO max] and exclusive ranges with curly brackets {min TO max}.

All days in 2012:

date:[2012-01-01 TO 2012-12-31]Numbers 1..5

count:[1 TO 5]Tags between alpha and omega, excluding alpha and omega:

tag:{alpha TO omega}Numbers from 10 upwards

count:[10 TO *]Dates before 2012

date:{* TO 2012-01-01}

Curly and square brackets can be combined:

Numbers from 1 up to but not including 5

count:[1 TO 5}Ranges with one side unbounded can use the following syntax:

age:>10

age:>=10

age:<10

age:<=10

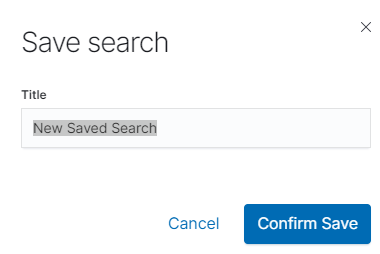

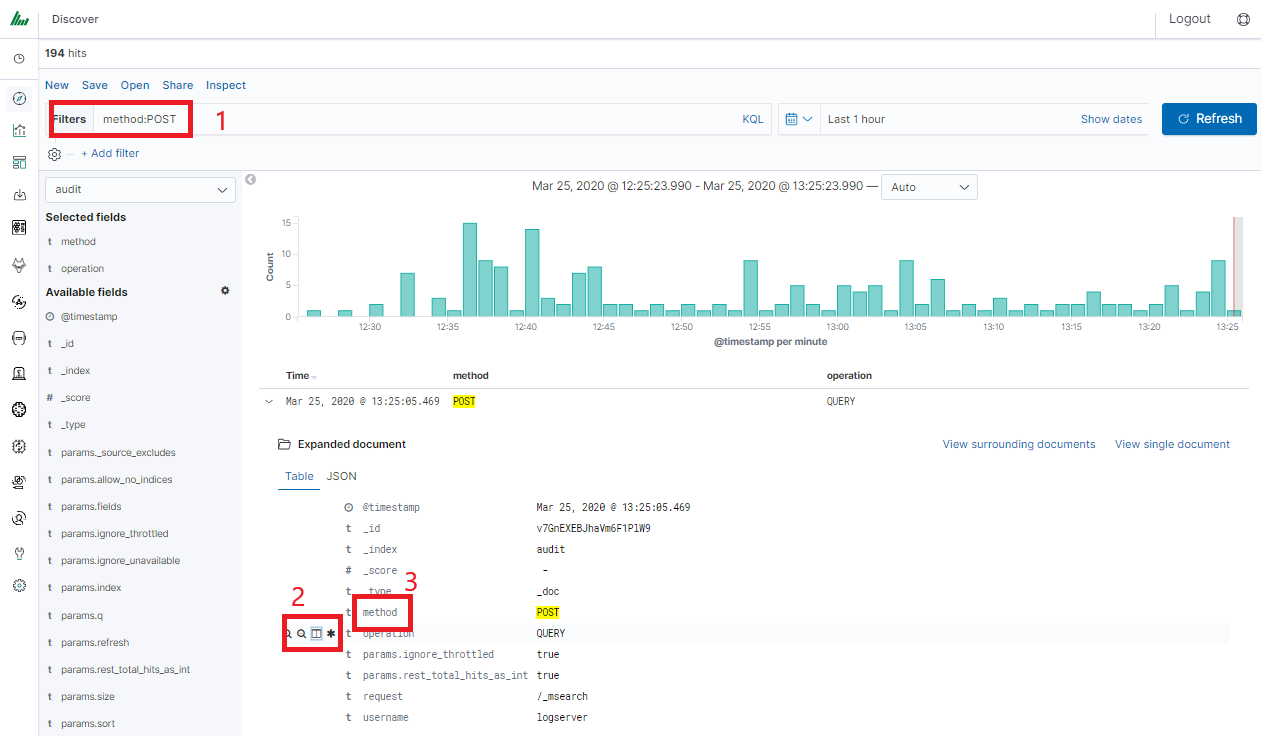

Saving and deleting queries¶

Saving queries enables you to reload and use them in the future.

Save query¶

To save the query, click on the “Save” button under the query bar:

This will bring up a window in which we give the query a name and then

click the button .

.

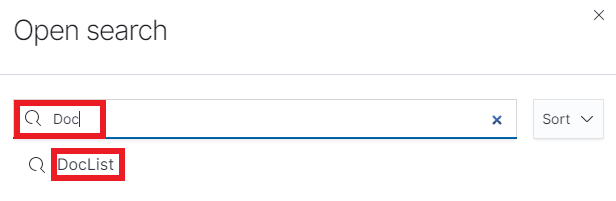

Saved queries can be opened by going to „Open” from the main menu at the top of the page, and selecting saved search from the search list:

Additionally, you can use “Saved Searchers Filter..” to filter the search list.

Open query¶

To open a saved query from the search list, you can click on the name of the query you are interested in.

After clicking on the icon on the name of the saved query and choosing “Edit Query DSL”, we will gain access to the advanced editing mode, so that we can change the query at a lower level.

on the name of the saved query and choosing “Edit Query DSL”, we will gain access to the advanced editing mode, so that we can change the query at a lower level.

It is a powerful tool designed for advanced users, designed to modify the query and the way it is presented by ITRS Log Analytics.

Delete query¶

To delete a saved query, open it from the search list, and

then click on the button .

.

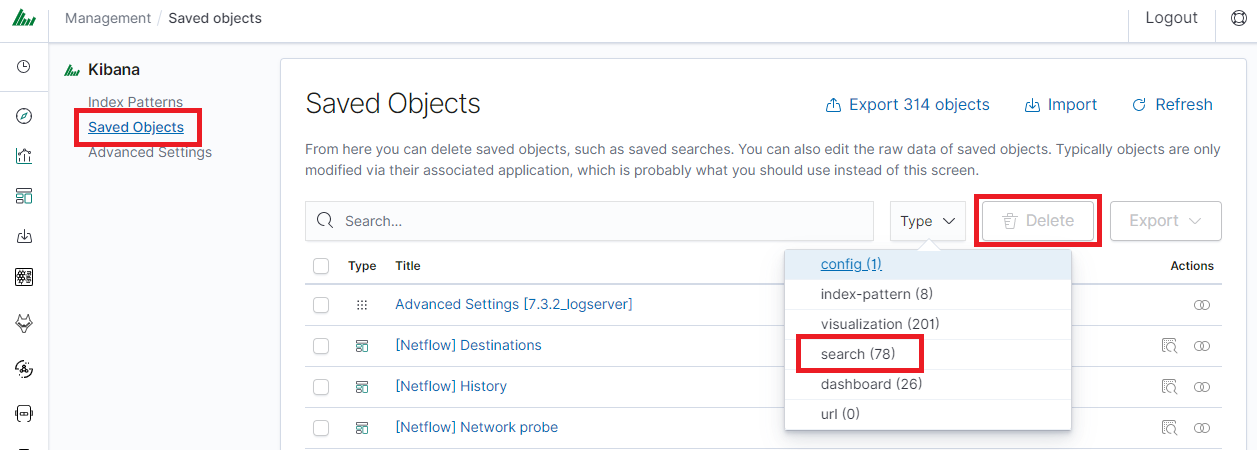

If you want to delete many saved queries simultaneously go to the “Management Object”

-> “Saved Object” -> “Searches” select it in the list (the icon to the left of the query name), and then click the “Delete” button.

to the left of the query name), and then click the “Delete” button.

From this level, you can also export saved queries in the same way. To

do this, you need to click on and choose the save location. The file will be saved in .json format. If you then want to import such a file to ITRS Log Analytics, click on a button

and choose the save location. The file will be saved in .json format. If you then want to import such a file to ITRS Log Analytics, click on a button , at the top of the page and select the desired file.

, at the top of the page and select the desired file.

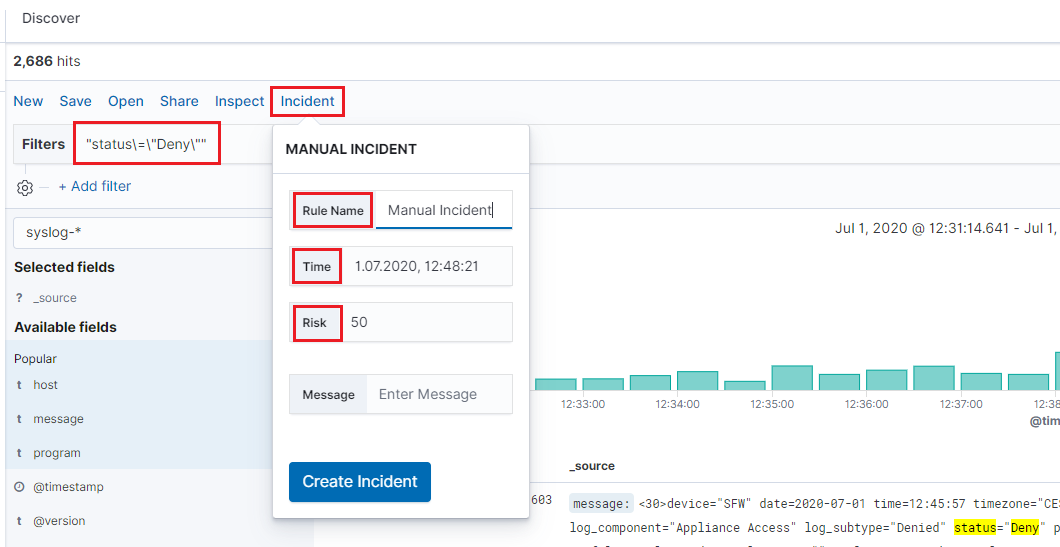

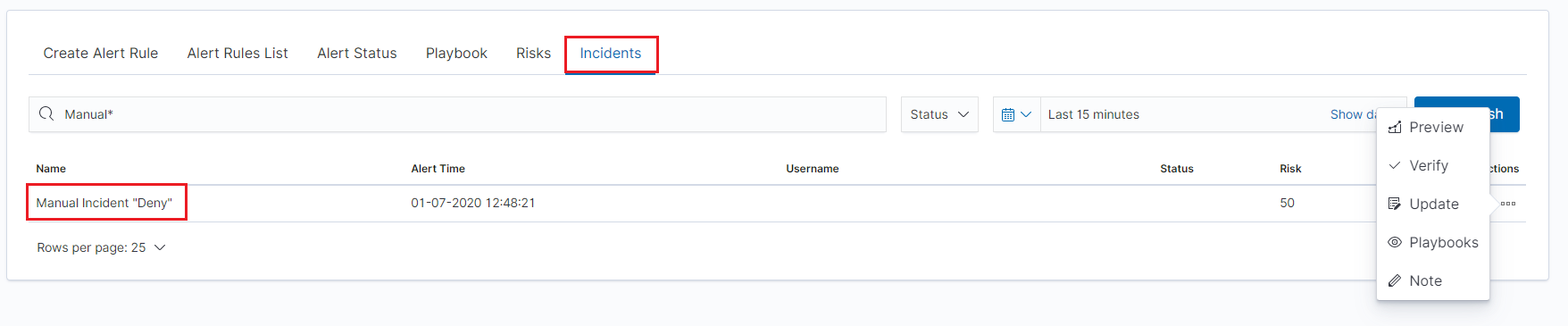

Manual incident¶

The Discovery module allows you to manually create incidents that are saved in the Incidents tab of the Alerts module. Manual incidents are based on search results or filtering.

For a manual incident, you can save the following parameters:

- Rule name

- Time

- Risk

- Message

After saving the manual incident, you can go to the Incident tab in the Alert module to perform the incident handling procedure.

Change the default width of columns¶

To improve the readability of values in Discovery columns, you can set a minimum column width. The column width setting is in the CSS style files:

/usr/share/kibana/built_assets/css/plugins/kibana/index.dark.css

/usr/share/kibana/built_assets/css/plugins/kibana/index.light.css

To set the minimum width for the columns, e.g. 150px, add the following entry min-width: 150px in the CSS style files:

.kbnDocTableCell__dataField {

min-width: 150px;

white-space: pre-wrap; }

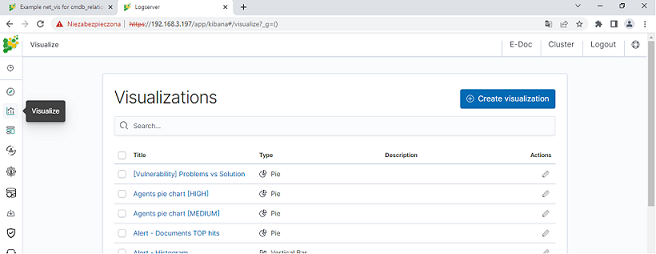

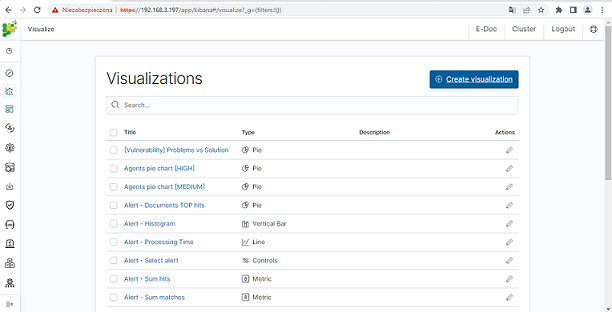

Visualizations¶

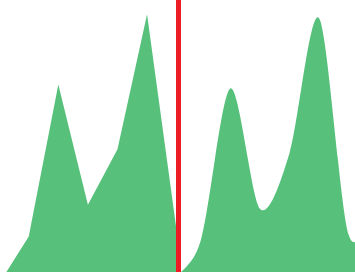

Visualize enables you to create visualizations of the data in your ITRS Log Analytics indices. You can then build dashboards that display related visualizations. Visualizations are based on ITRS Log Analytics queries. By using a series of ITRS Log Analytics aggregations to extract and process your data, you can create charts that show you the trends, spikes, and dips.

Creating visualization¶

Create¶

To create a visualization, go to the „Visualize” tab from the main menu. A new page will appear where you can create or load visualization.

Load¶

To load previously created and saved visualization, you must select it from the list.

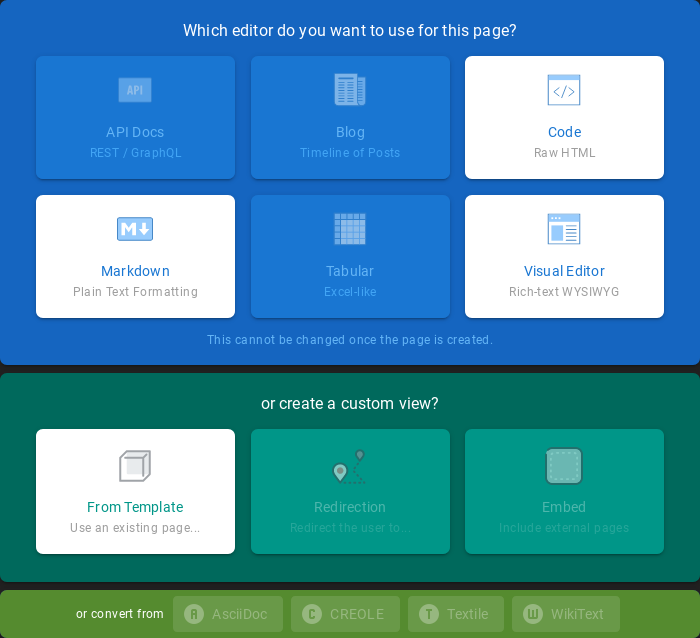

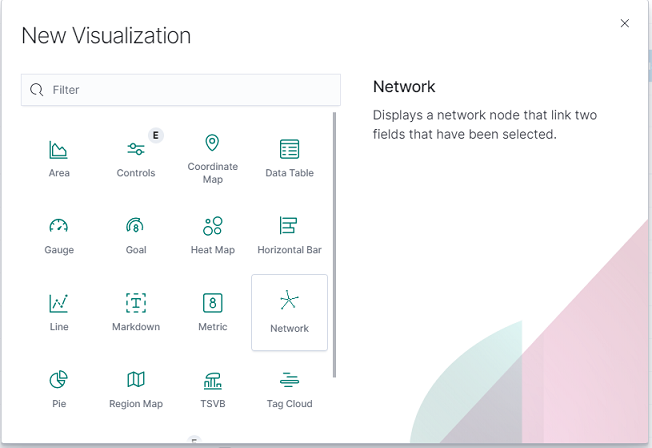

To create a new visualization, you should choose the preferred method of data presentation.

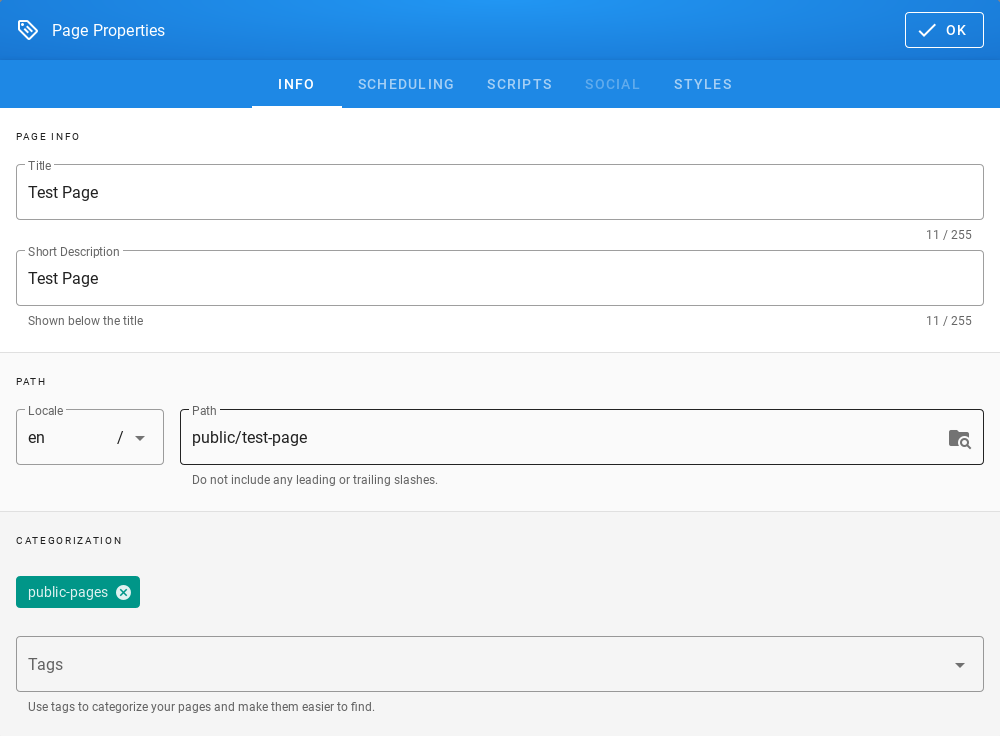

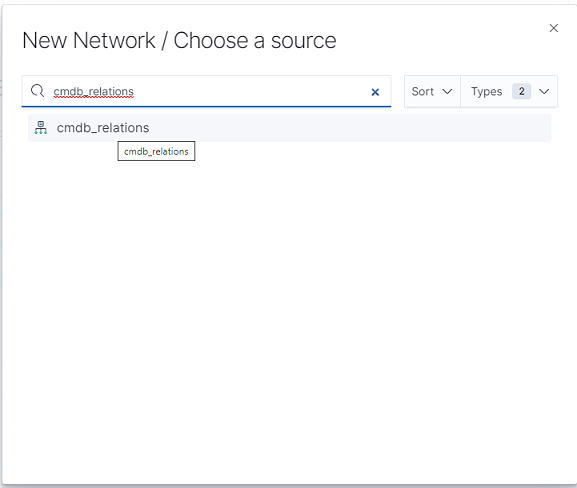

Next, specify whether the created visualization will be based on a new or previously saved query. If on a new one, select the index whose visualization should concern. If visualization is created from a saved query, you just need to select the appropriate query from the list, or (if there are many saved searches) search for them by name.

Visualization types¶

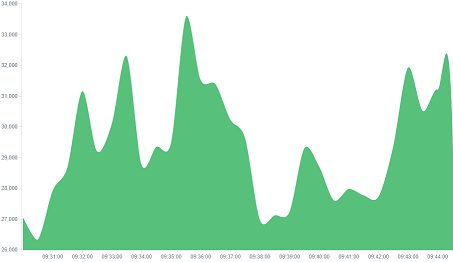

Before the data visualization will be created, first you have to choose the presentation method from an existing list. Currently, there are five groups of visualization types. Each of them serves different purposes. If you want to see only the current number of products sold, it is best to choose „Metric”, which presents one value.

However, if we would like to see user activity trends on pages at different hours and days, a better choice will be the „Area chart”, which displays a chart with time division.

The „Markdown widget” view is used to place text e.g. information about the dashboard, explanations, and instructions on how to navigate. Markdown language was used to format the text (the most popular use is GitHub). More information and instructions can be found at this link: https://help.github.com/categories/writing-on-github/

Edit visualization and saving¶

Editing¶

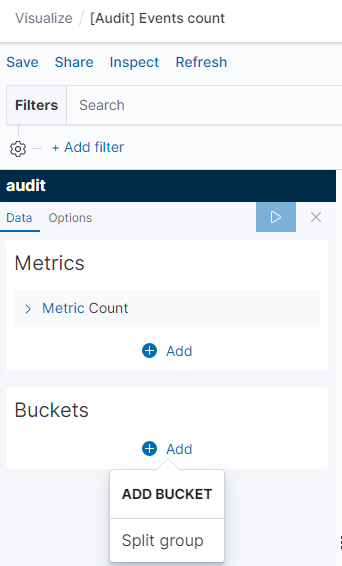

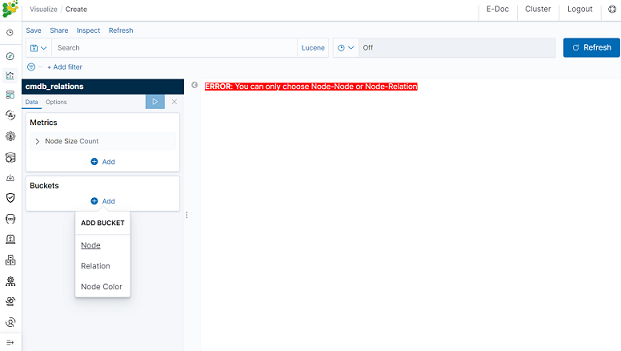

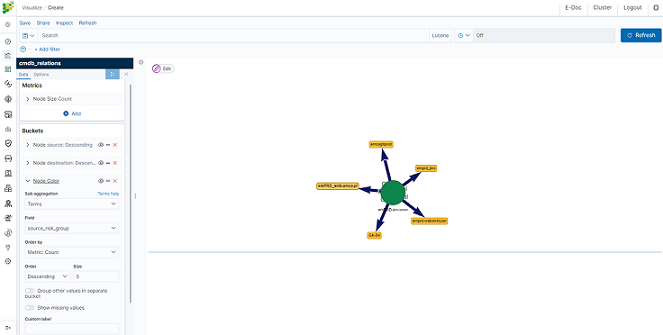

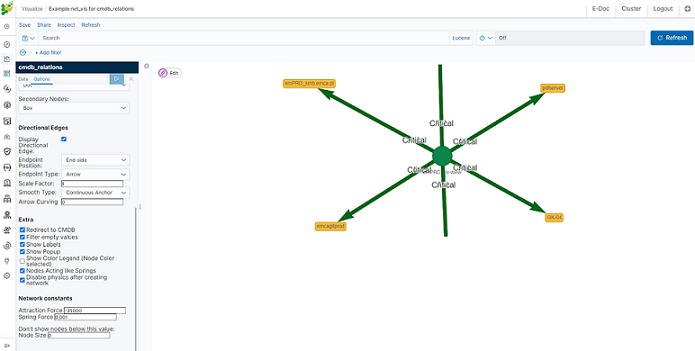

Editing a saved visualization enables you to directly modify the object definition. You can change the object title, add a description, and modify the JSON that defines the object properties. After selecting the index and the method of data presentation, you can enter the editing mode. This will open a new window with an empty visualization.

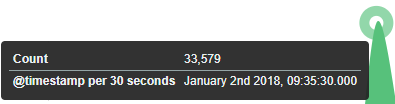

At the very top, there is a bar of queries that can be edited throughout the creation of the visualization. It works in the same way as in the “Discover” tab, which means searching the raw data, but instead of the data being displayed, the visualization will be edited. The following example will be based on the „Area chart”. The visualization modification panel on the left is divided into three tabs: „Data”, “Metric & Axes” and „Panel Settings”.

In the „Data” tab, you can modify the elements responsible for which data and how should be presented. In this tab, there are two sectors: “metrics”, in which we set what data should be displayed, and „buckets” in which we specify how they should be presented.

Select the Metrics & Axes tab to change the way each metric is shown on the chart. The data series are styled in the Metrics section, while the axes are styled in the X and Y axis sections.

In the „Panel Settings” tab, there are settings relating mainly to visual aesthetics. Each type of visualization has separate options.

To create the first graph in the char modification panel, in the „Data” tab we add X-Axis in the “buckets” sections. In „Aggregation” choose „Histogram”, in „Field” should automatically be located “timestamp” and “interval”: “Auto” (if not, this is how we set it). Click on the icon on the panel. Now our first graph should show up.

Some of the options for „Area Chart” are:

Smooth Lines - is used to smooth the graph line.

Current time marker – places a vertical line on the graph that determines the current time.

Set Y-Axis Extents – allows you to set minimum and maximum values for the Y axis, which increases the readability of the graphs. This is useful, if we know that the data will never be less than (the minimum value), or to indicate the goals of the company (maximum value).

Show Tooltip – option for displaying the information window under the mouse cursor, after pointing to the point on the graph.

Saving¶

To save the visualization, click on the “Save” button under the query bar:

give it a name and click the button

give it a name and click the button .

.

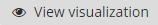

Load¶

To load the visualization, go to the “Management Object”

-> “Saved Object” -> “Visualizations” and select it from the list. From this place,

we can also go into advanced editing mode. To view the visualization

use button.

button.

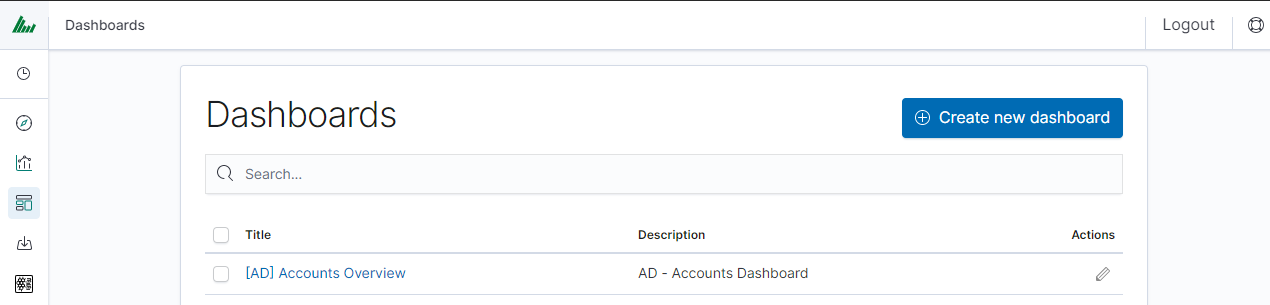

Dashboards¶

Dashboard is a collection of several visualizations or searches. Depending on how it is built and what visualization it contains, it can be designed for different teams e.g.:

- SOC - which is responsible for detecting failures or threats in the company;

- business - which thanks to the listings can determine the popularity of products and define the strategy of future sales and promotions;

- managers and directors - who may immediately have access to information about the performance units or branches.

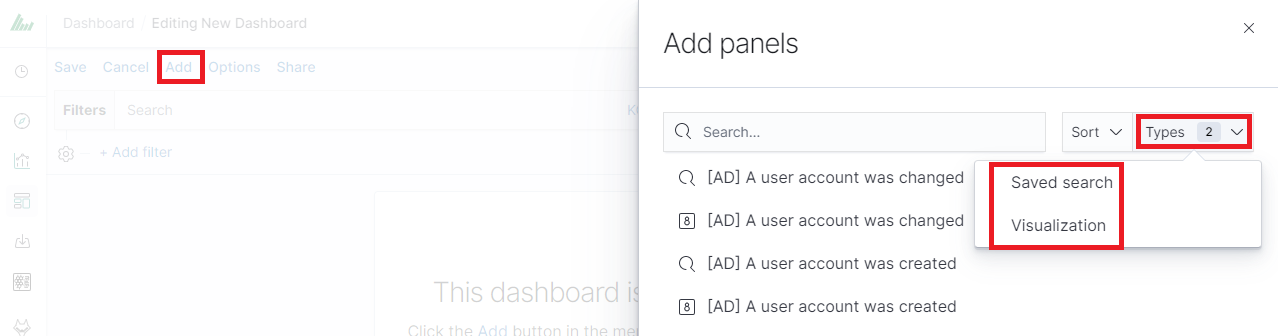

Create¶

To create a dashboard from previously saved visualizations and queries, go to the „Dashboard” tab in the main menu. When you open it, a new page will appear.

Clicking on the icon “Add” at the top of the page select the “Visualization” or “Saved Search” tab.

and selecting a saved query and/or visualization from the list will add them to the dashboard. If, there are a large number of saved objects, use the bar to search for them by name.

Elements of the dashboard can be enlarged arbitrarily (by clicking on the right bottom corner of the object and dragging the border) and moving (by clicking on the title bar of the object and moving it).

Saving¶

You may change the time period of your dashboard.

At the upper right-hand corner, you may choose the time range of your dashboard.

Click save and choose ‘Store time with dashboard’ if you are editing an existing dashboard. Otherwise, you may choose ‘Save as a new dashboard’ to create a new dashboard with the new time range.

To save a dashboard, click on the “Save” button at the up of the query bar and give it a name.

Load¶

To load the Dashboard, go to the “Management Object”

-> “Saved Object” -> “Dashboard” and select it from the list. From this place,

we can also go into advanced editing mode. To view the visualization

use button.

button.

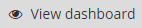

Sharing dashboards¶

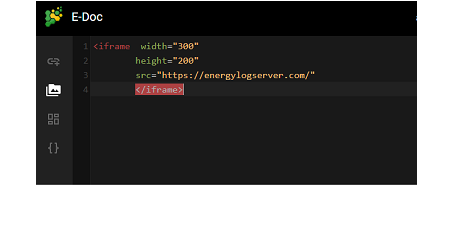

The dashboard can be shared with other ITRS Log Analytics users as well as on any page - by placing a snippet of code. Provided that it can retrieve information from ITRS Log Analytics.

To do this, create a new dashboard or open the saved dashboard and click on “Share” at the top of the page. A window will appear with the generated two URLs. The content of the first one “Embaded iframe” is used to provide the dashboard in the page code, and the second “Link” is a link that can be passed on to another user. There are two options for each, the first is to shorten the length of the link, and the second is to copy to the clipboard the content of the given bar.

Dashboard drill down¶

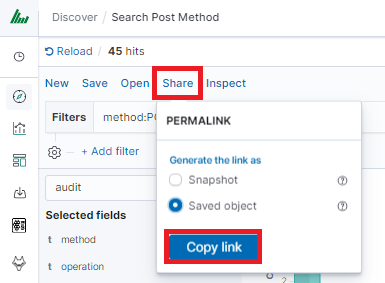

In the discovery tab search for a message of Your interest

Save Your search

Check Your „Shared link” and copy it

! ATTENTION ! Do not copy „?_g=()” at the end.

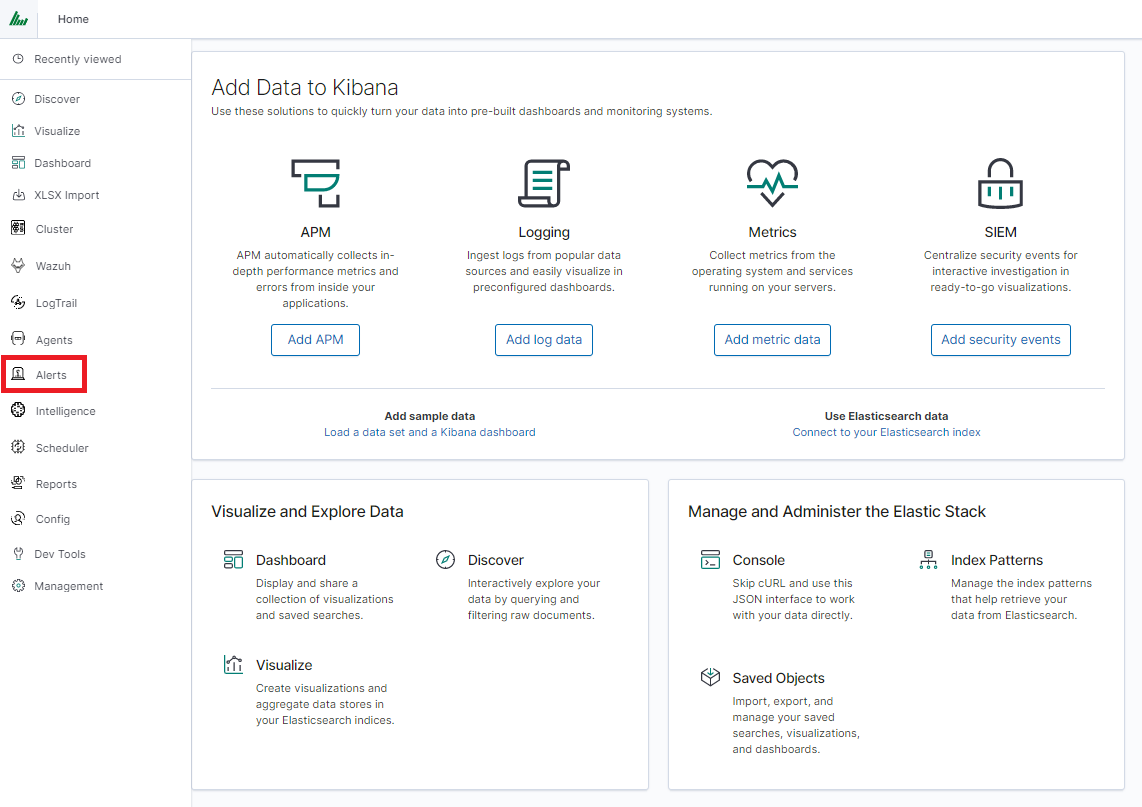

Select Alerting module

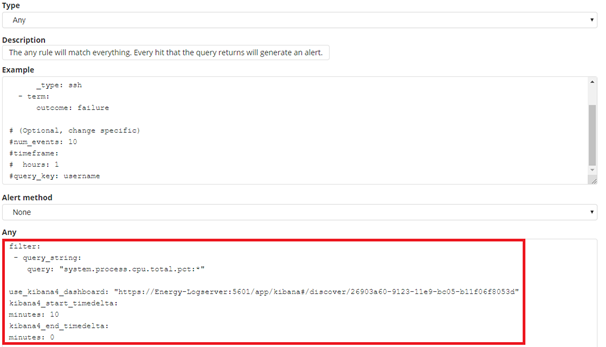

Once Alert is created use ANY frame to add the following directives:

Use_kibana4_dashboard: paste Your „shared link” here

use_kibana_dashboard: - The name of a Kibana dashboard to link to. Instead of generating a dashboard from a template, Alert can use an existing dashboard. It will set the time range on the dashboard to around the match time, upload it as a temporary dashboard, add a filter to the query_key of the alert if applicable, and put the URL to the dashboard in the alert. (Optional, string, no default).

Kibana4_start_timedelta

kibana4_start_timedelta: Defaults to 10 minutes. This option allows you to specify the start time for the generated kibana4 dashboard. This value is added in front of the event. For example,

kibana4_start_timedelta: minutes: 2

Kibana4_end_timedelta

kibana4_end_timedelta: Defaults to 10 minutes. This option allows you to specify the end time for the generated kibana4 dashboard. This value is added to the back of the event. For example,

kibana4_end_timedelta: minutes: 2

Sample:

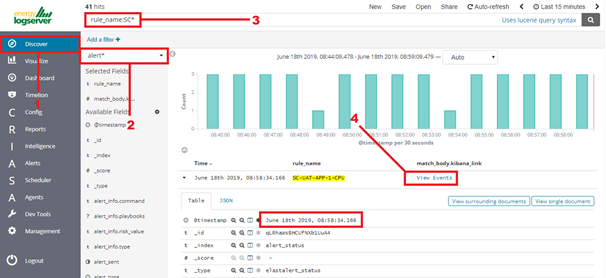

Search for triggered alerts in the Discovery tab.

Use alert* search pattern.

Refresh the alert that should contain url for the dashboard. Once available, the kibana_dashboard field can be exposed to dashboards giving You a real drill-down feature.

Reports¶

CSV Report¶

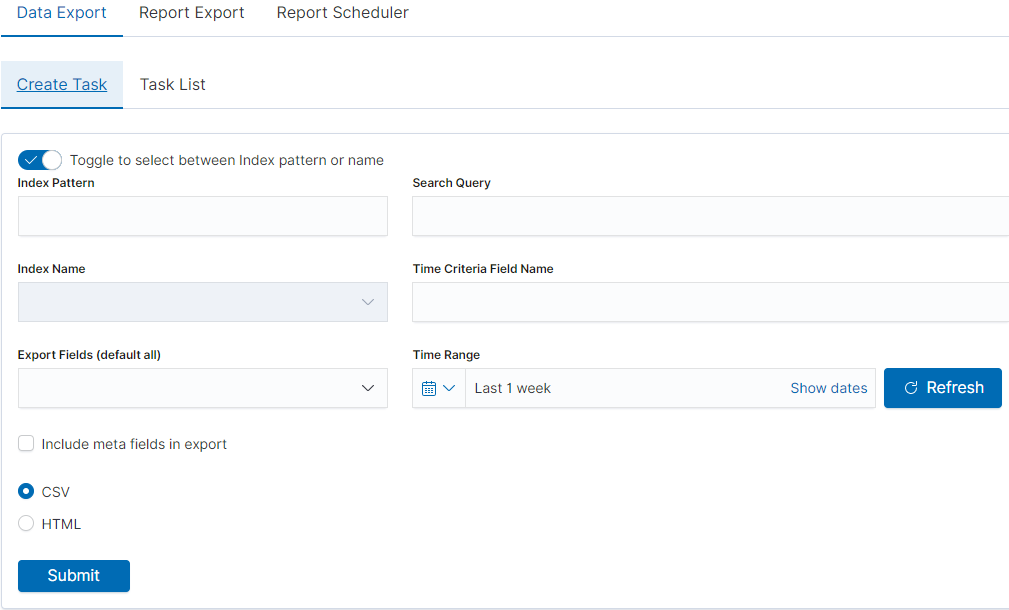

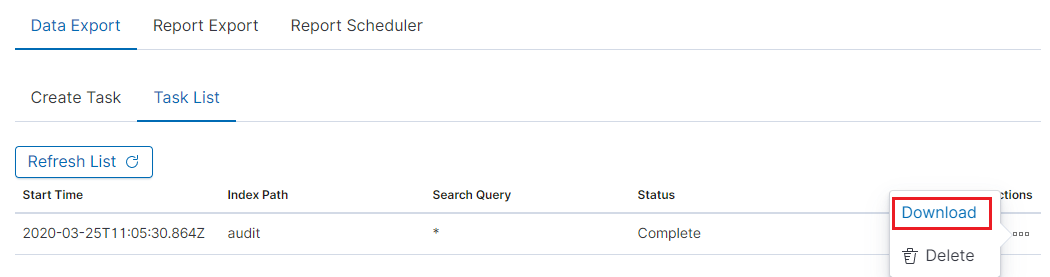

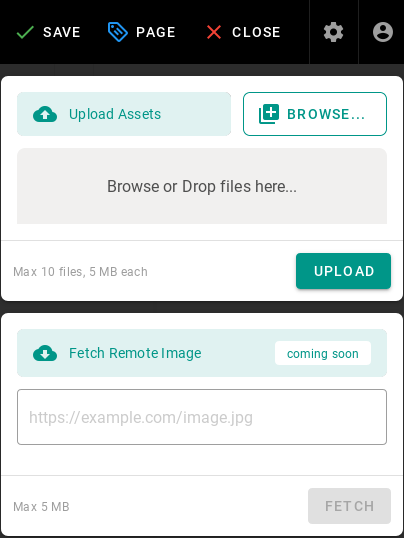

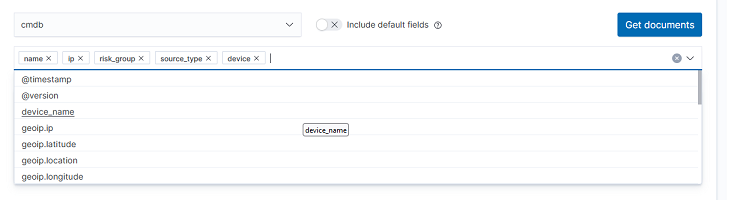

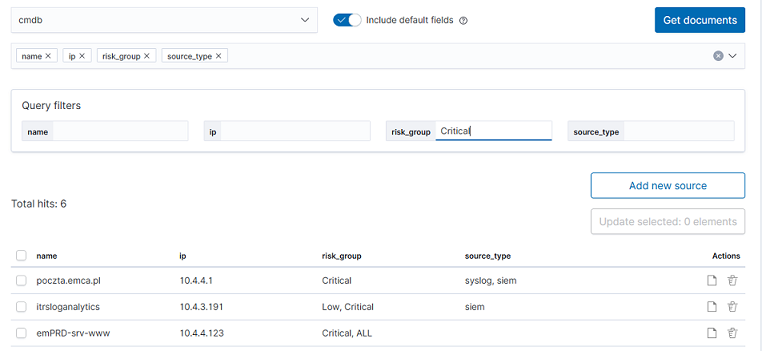

To export data to CSV Report click the Reports icon, you immediately go to the first tab - Export Data

In this tab, we have the opportunity to specify the source from which

we want to export. It can be an index pattern. After selecting it,

we confirm the selection with the Submit button, and a report is

created at the moment. The symbol

can refresh the list of reports and see

what its status is.

can refresh the list of reports and see

what its status is.

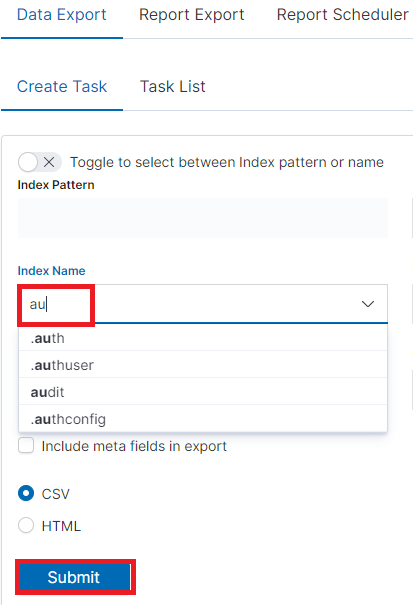

We can also create a report by pointing to a specific index from the drop-down list of indexes.

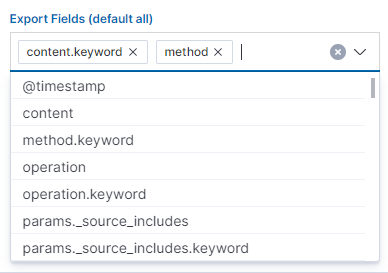

We can also check which fields are to be included in the report. The selection is confirmed by the Submit button.

When the process of generating the report (Status: Completed) is finished, we can download it (Download button) or delete it (Delete button). The downloaded report in the form of a *.csv file can be opened in the browser or saved to the disk.

In this tab, the downloaded data has a format that we can import into other systems for further analysis.

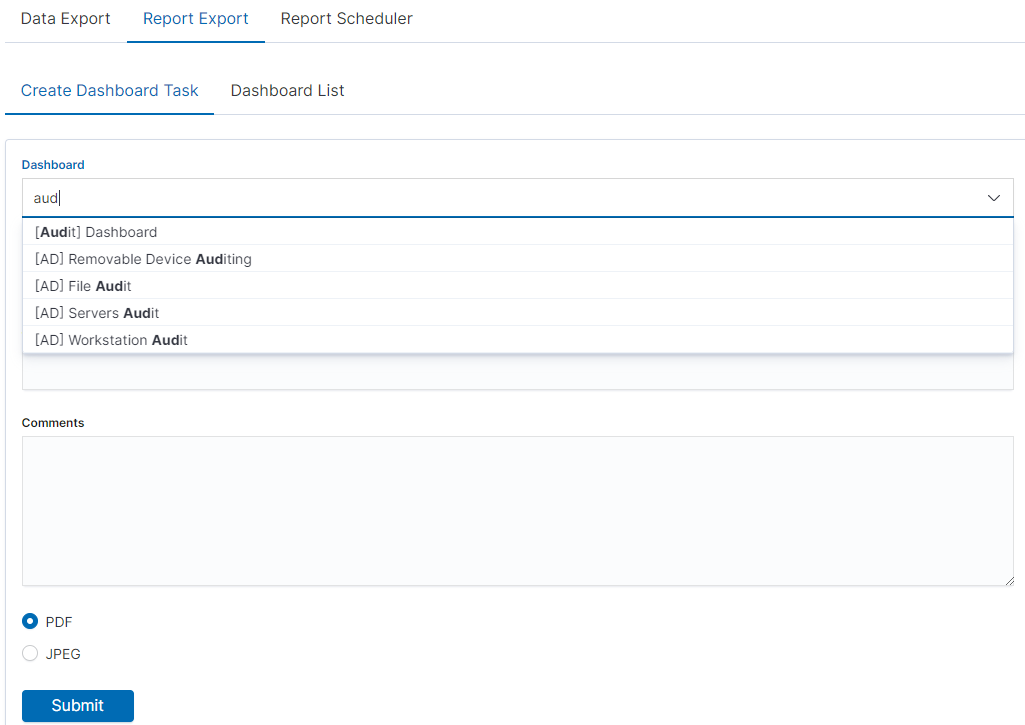

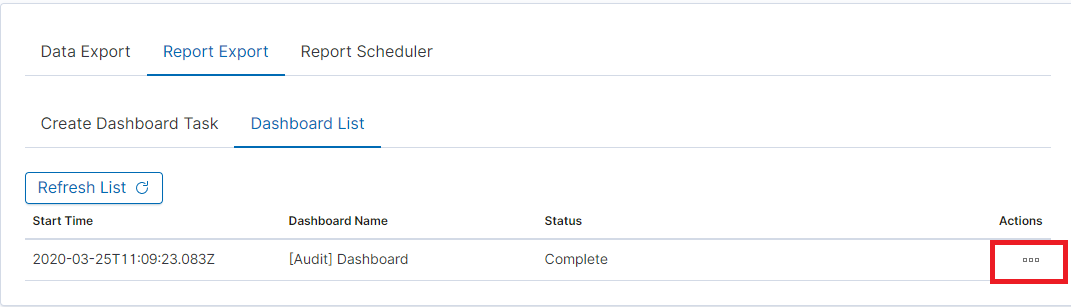

PDF Report¶

In the Export Dashboard tab, we can create graphic reports in PDF files. To create such a report, just from the drop-down list of previously created and saved Dashboards, indicate the one we are interested in, and then confirm the selection with the Submit button. A newly created export with the Processing status will appear on the list under Dashboard Name. When the processing is completed, the Status changes to Complete and it will be possible to download the report.

By clicking the Download button, the report is downloaded to the disk or we can open it in the PDF file browser. There is also to option of deleting the report with the Delete button.

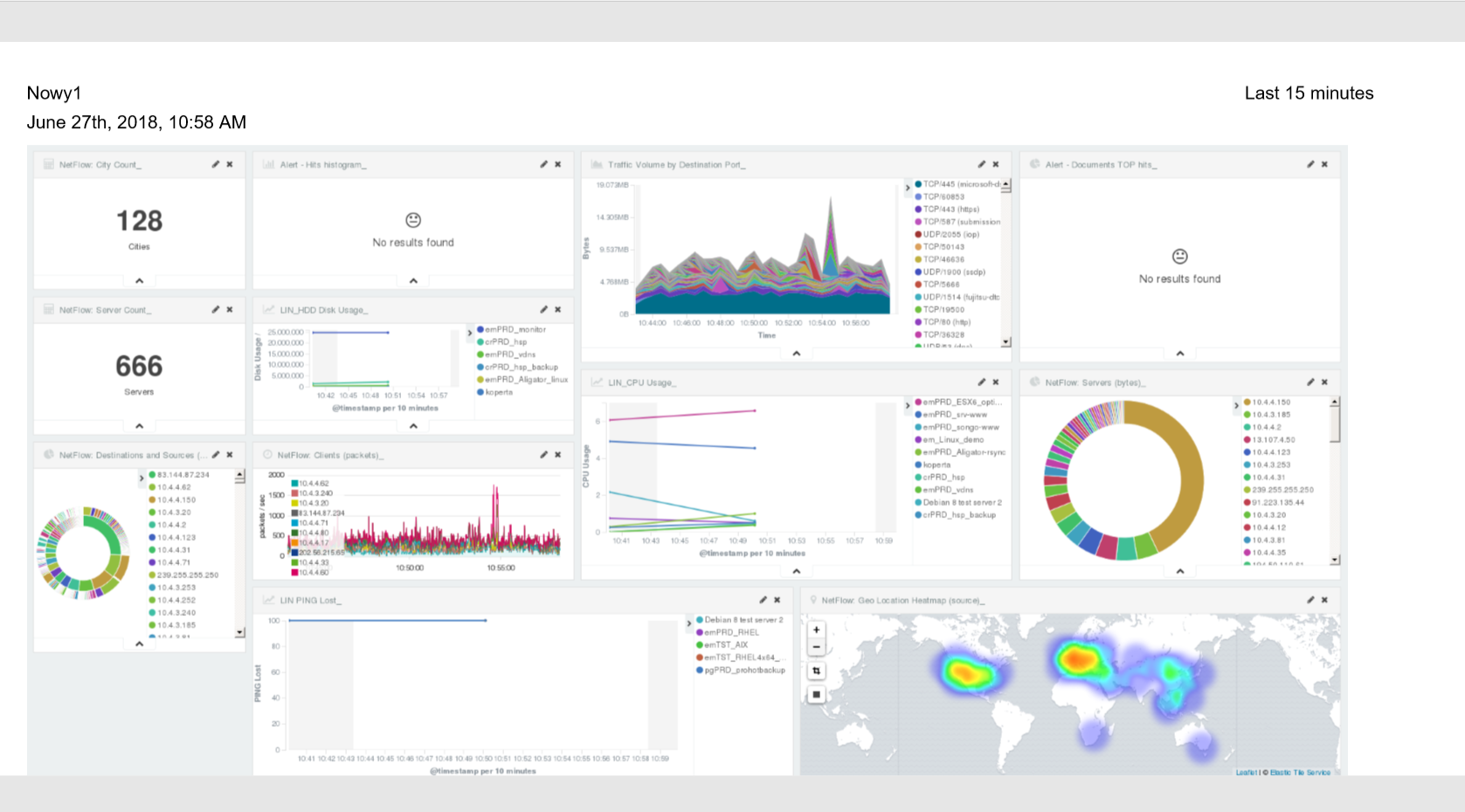

Below is an example report from the Dashboard template generated and downloaded as a PDF file.

PDF report from the table visualization¶

Data from a table visualization can be exported as a PDF report.

To export a table visualization data, follow these steps:

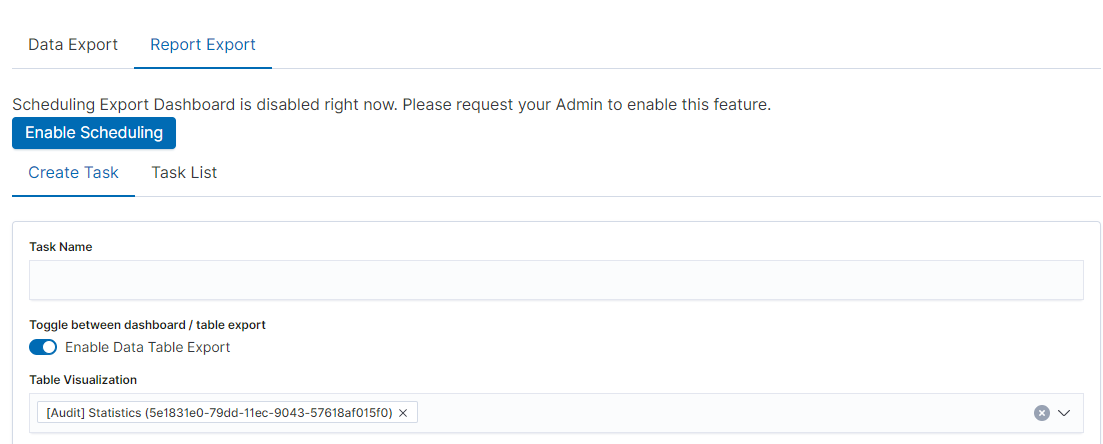

Go to the ‘Report’ module and then to the ‘Report Export’ tab,

Add the new task name in the ‘Task Name’ field,

Toggle the switch ‘Enable Data Table Export’:

Select the table from the ‘Table Visualization’ list,

Select the time range for which the report is to be prepared,

You can select a logo from the ‘Logo’ list,

You can add a report title using the ‘Title’ field,

You can add a report comment using the ‘Comments’ field,

Select the ‘Submit’ button to start creating the report,

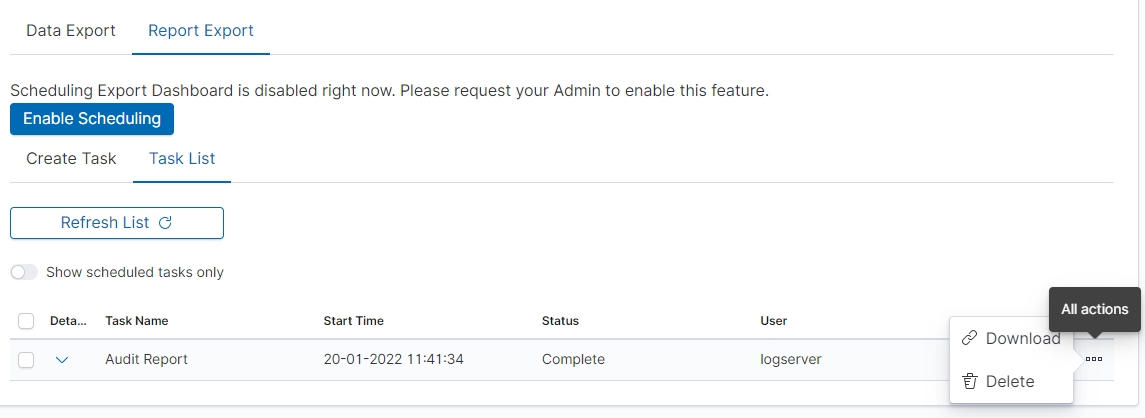

You can follow the progress in the ‘Task List’ tab,

After completing the task, the status will change to ‘Complete’ and you can download the PDF report via ‘Action’ -> ‘Download’:

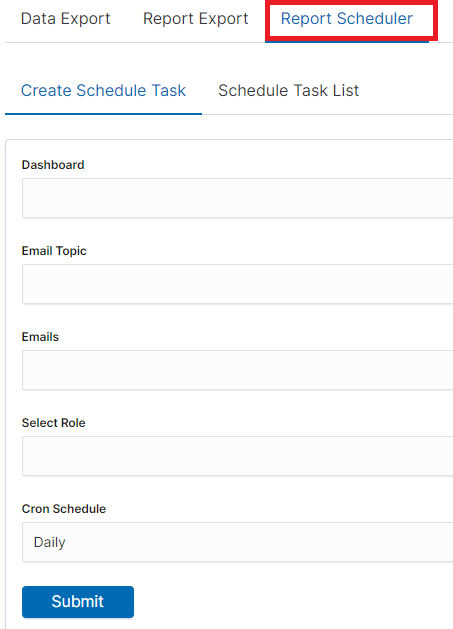

Scheduler Report (Schedule Export Dashboard)¶

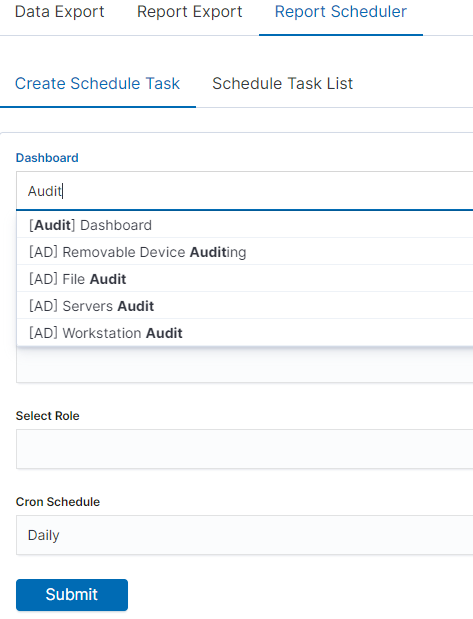

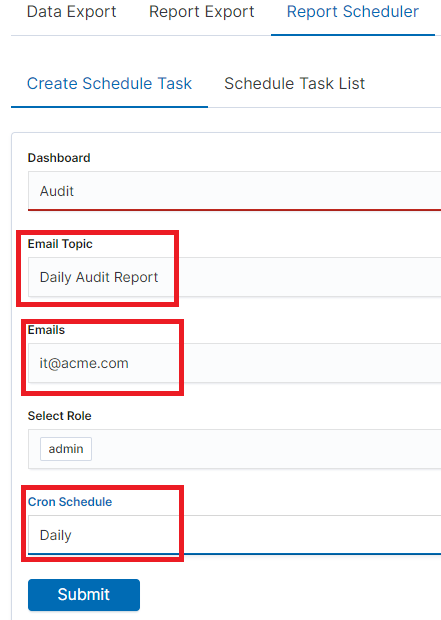

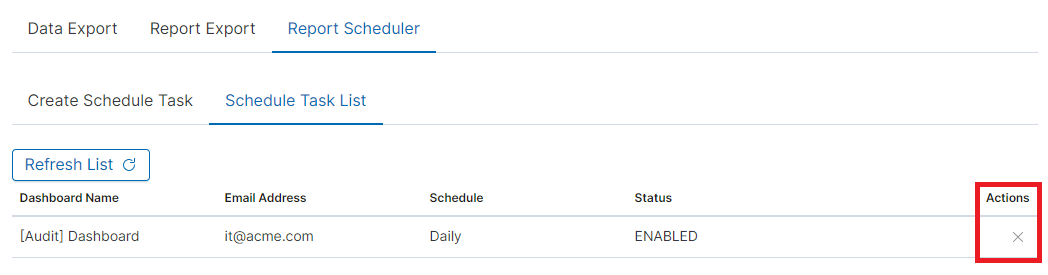

In the Report selection, we have the option of setting the Scheduler which from the Dashboard template can generate a report at time intervals. To do this, go to the Schedule Export Dashboard tab.

Scheduler Report (Schedule Export Dashboard)

In this tab mark the saved Dashboard.

Note: The default time period of the dashboard is last 15 minutes.

Please refer to Discovery > Time settings and refresh to change the time period of your dashboard.

In the Email Topic field, enter the Message title, in the Email field enter the email address to which the report should be sent. From the drop-down list choose at what frequency you want the report to be generated and sent. The action configured in this way is confirmed with the Submit button.

The defined action goes to the list and will generate a report to the e-mail address, with the cycle we set, until we cannot cancel it with the Cancel button.

User roles and object management¶

Users, roles, and settings¶

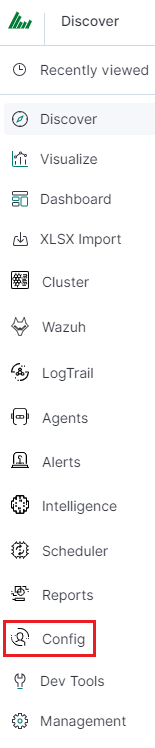

ITRS Log Analytics allows to you manage users and permission for indexes and methods used by them. To do this click the “Config” button from the main menu bar.

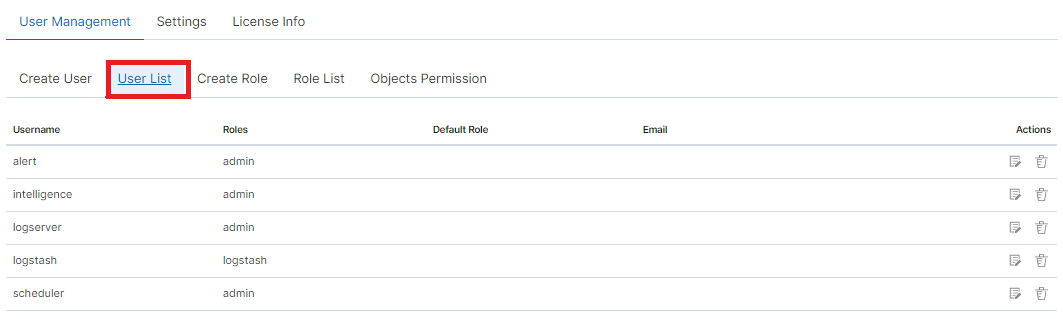

A new window will appear with three main tabs: „User Management”, „Settings” and „License Info”.

From the „User Management” level we have access to the following possibilities: Creating a user in „Create User”, displaying users in „User List”, creating new roles in „Create Role” and displaying existing roles in „List Role”.

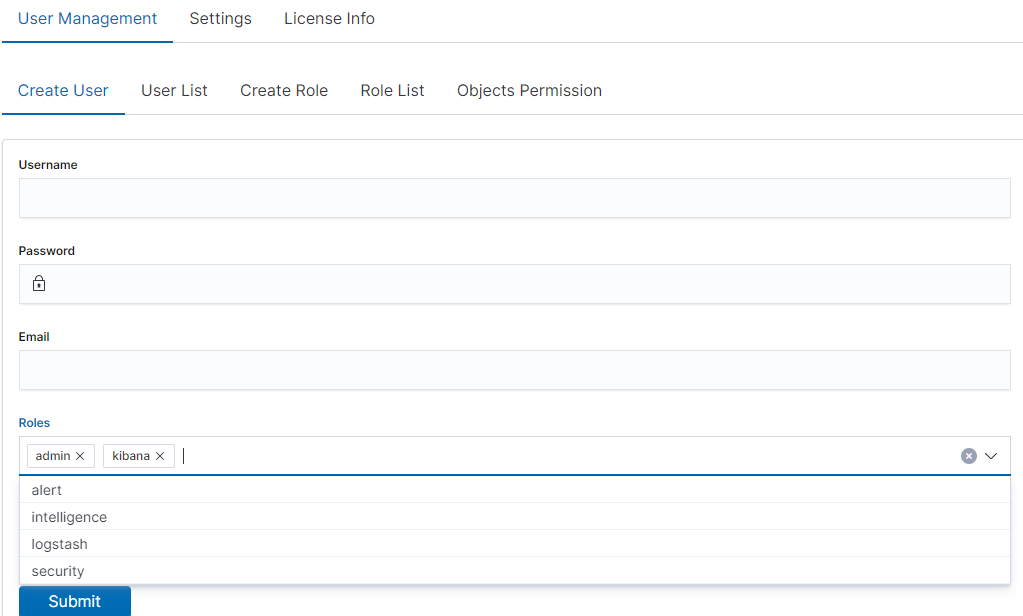

Creating a User (Create User)¶

Creating user¶

To create a new user click on the Config icon and you immediately enter the administration panel, where the first tab is to create a new user (Create User).

In the wizard that opens, we enter a unique username (Username field), and password for the user (field Password) and assign a role (field Role). In this field, we have the option of assigning more than one role. Until we select a role in the Roles field, the Default Role field remains empty. When we mark several roles, these roles appear in the Default Role field. In this field, we have the opportunity to indicate which role for a new user will be the default role with which the user will be associated in the first place when logging in. The default role field has one more important task - it binds all users with the field/role set in one group. When one of the users of this group creates the Visualization or the Dashboard it will be available to other users from this role(group). Creating the account is confirmed with the Submit button.

User’s modification and deletion, (User List)¶

Once we have created users, we can display their list. We do it in the next tab (User List).

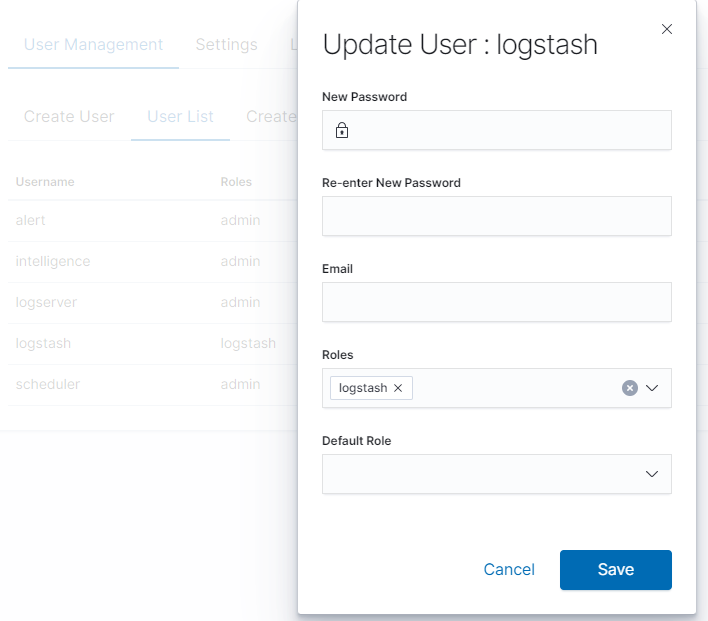

In this view, we get a list of user accounts with assigned roles and we have two buttons: Delete and Update. The first of these is the ability to delete a user account. Under the Update button is a drop-down menu in which we can change the previous password to a new one (New password), change the password (Re-enter New Password), change the previously assigned roles (Roles), to other (we can take the role assigned earlier and give a new one, extend user permissions with new roles). The introduced changes are confirmed with the Submit button.

We can also see the current user settings and clicking the Update button collapses the previously expanded menu.

Create, modify, and delete a role (Create Role), (Role List)¶

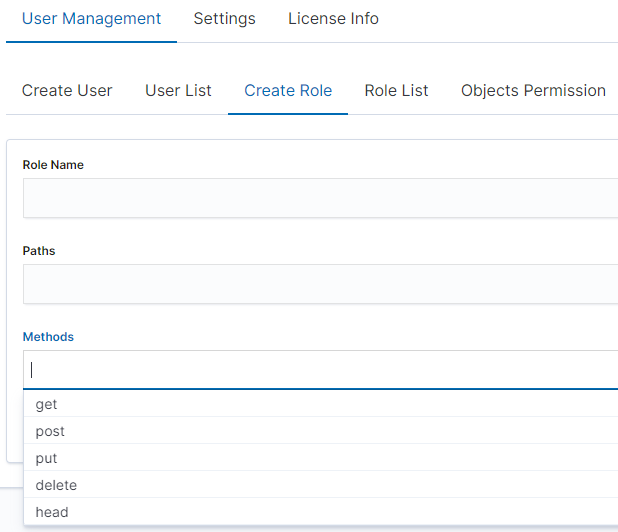

In the Create Role tab, we can define a new role with permissions that we assign to a pattern or several index patterns.

For example, we use the syslog2* index pattern. We give this name in the Paths field. We can provide one or more index patterns, their names should be separated by a comma. In the next Methods field, we select one or many methods that will be assigned to the role. Available methods:

- PUT - sends data to the server

- POST - sends a request to the server for a change

- DELETE - deletes the index/document

- GET - gets information about the index /document

- HEAD - is used to check if the index /document exists

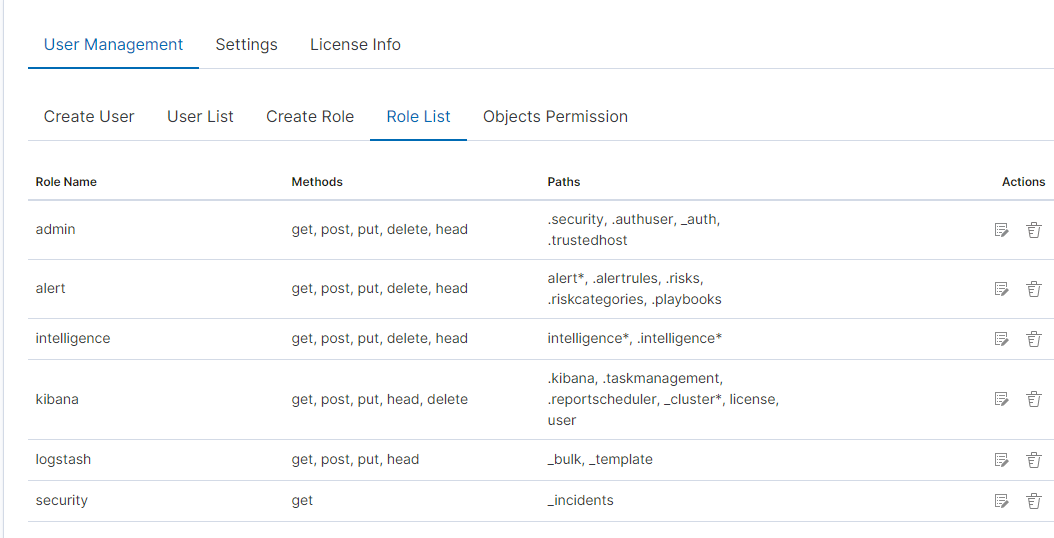

In the role field, enter the unique name of the role. We confirm the addition of a new role with the Submit button. To see if a new role has been added, go to the net Role List tab.

As we can see, the new role has been added to the list. With the Delete button we have the option of deleting it, while under the Update button, we have a drop-down menu thanks to which we can add or remove an index pattern and add or remove a method. When we want to confirm the changes, we choose the Submit button. Pressing the Update button again will close the menu.

Fresh installation of the application has sewn solid roles, which grant users special rights:

- admin - this role gives unlimited permissions to administer/manage the application

- alert - a role for users who want to see the Alert module

- kibana - a role for users who want to see the application GUI

- Intelligence - a role for users who are to see the Intelligence moduleObject access permissions (Objects permissions)

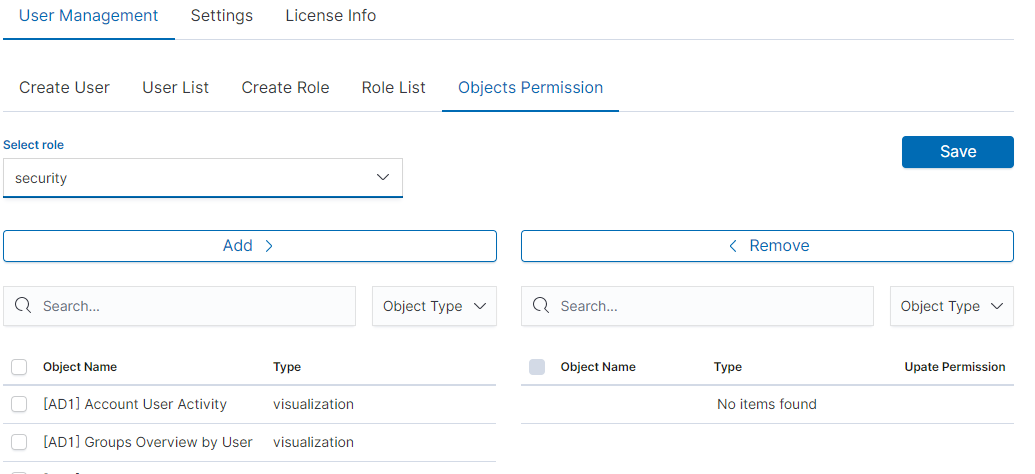

In the User Manager tab, we can parameterize access to the newly created role as well as existing roles. In this tab, we can indicate to which object in the application the role has access.

Example:

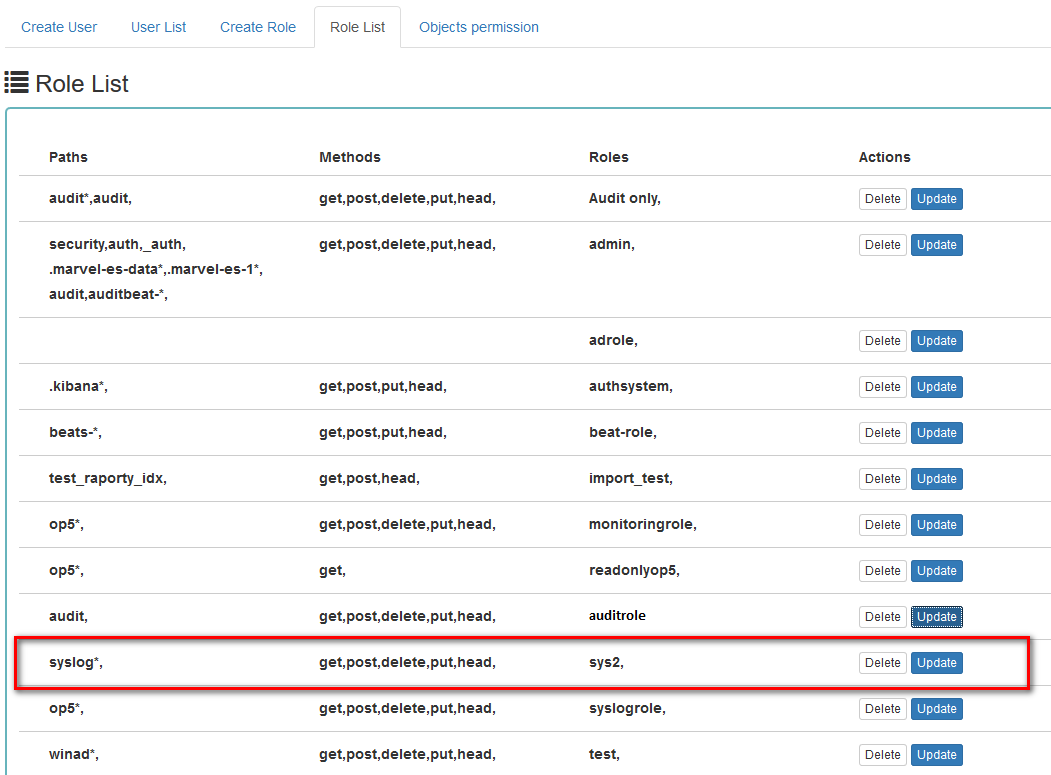

In the Role List tab, we have a role called sys2, it refers to all index patterns beginning with syslog* and the methods get, post, delete, put and head are assigned.

When we go to the Object permission tab, we have the option to choose the sys2 role in the drop-down list choose a role:

After selecting, we can see that we already have access to the objects: two index patterns syslog2* and ITRS Log Analytics-* and on a dashboard Windows Events. There are also appropriate read or update permissions.

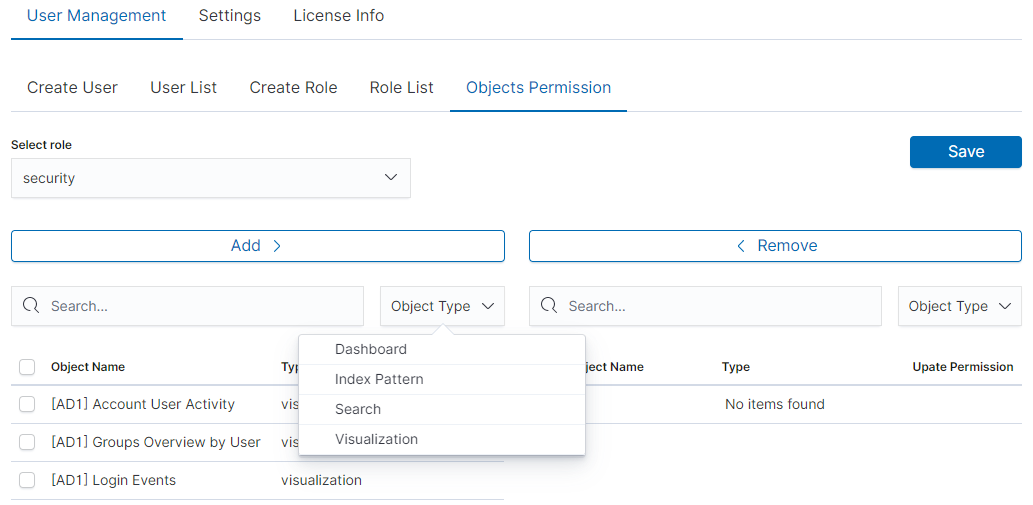

From the list, we have the opportunity to choose another object that we

can add to the role. We have the ability to quickly find this object

in the search engine (Find) and narrow the object class in

the drop-down field “Select object type”. The object type is associated with saved previous documents in the sections Dashboard, Index pattern,

Search, and Visualization.

By buttons,  we have the ability to add or remove or

object, and the Save button to save the selection.

we have the ability to add or remove or

object, and the Save button to save the selection.

Default user and passwords¶

| Address | User | Password | Role | Description | Usage |

|---|---|---|---|---|---|

| https://localhost:5601 | logserver | logserver | logserver | A built-in superuser account | |

| alert | alert | alert | A built-in account for the Alert module | ||

| intelligence | intelligece | intelligence | A built-in account for the Intelligence module | authorizing communication with elasticsearch server | |

| scheduler | scheduler | scheduler | A built-in account for the Scheduler module | ||

| logstash | logstash | logstash | A built-in account for authorized comuunication form Logstash | ||

| cerebro | system acconut only | A built-in account for authorized comuunication from Cerebro moudule |

Changing the password for the system account¶

Account Logserver

- Update /etc/kibana/kibana.ymlUpdate password in _/_opt/license-service/license-service.conf* file:

elasticsearch_connection: hosts: ["10.4.3.185:9200"] username: logserver password: "new_logserver_password" https: true

- Update the password in the curator configuration file: /usr/share/kibana/curator/curator.yml

http_auth: logserver:"new_logserver_password

Account Intelligence

Update /opt/ai/bin/conf.cfg

vi /opt/ai/bin/conf.cfg password=new_intelligence_password

Account Alert

Update file /opt/alert/config.yaml

vi /opt/alert/config.yaml es_password: alert

Account Scheduler

Update /etc/kibana/kibana.yml

vi /etc/kibana/kibana.yml elastscheduler.password: "new_scheduler_password"

Account Logstash

Update the Logstash pipeline configuration files (*.conf) in the output sections:

vi /etc/logstash/conf.d/*/*.conf output { elasticsearch { hosts => ["localhost:9200"] index => "syslog-%{+YYYY.MM}" user => "logstash" password => "new_password" } }

Account License

Update file /opt/license-service/license-service.conf

elasticsearch_connection: hosts: ["127.0.0.1:9200"] username: license password: "new_license_password"

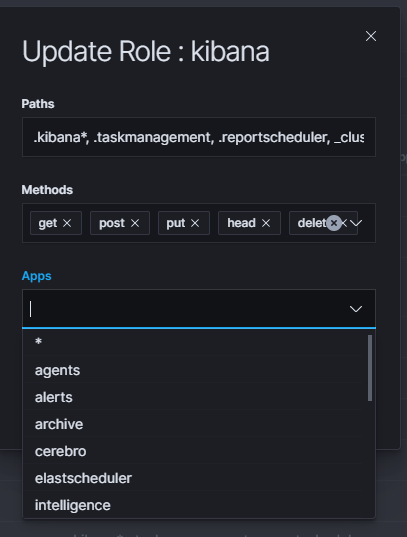

Module Access¶

You can restrict access to specific modules for a user role. For example: the user can only use the Discovery, Alert, and Cerebro modules, the other modules should be inaccessible to the user.

You can do this by editing the roles in the Role List and selecting the application from the Apps list. After saving, the user has access only to specific modules.

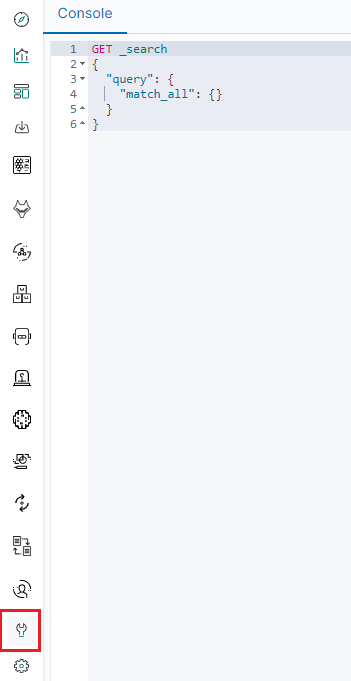

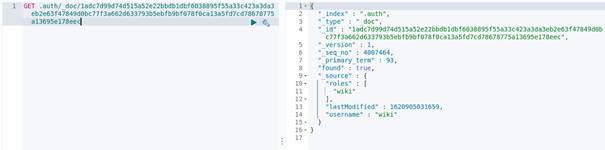

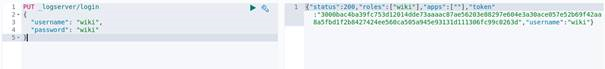

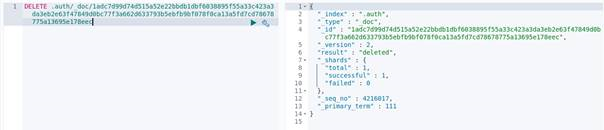

Manage API keys¶

The system allows you to manage, create, and delete API access keys from the level of the GUI management application.

Examples of implementation:

From the main menu select the “Dev Tools” button:

List of active keys:

Details of a single key:

Create a new key:

Deleting the key:

Separate data from one index to different user groups¶

We can Separate data from one index to different user groups using aliases. For example, in one index we have several tags:

To separate the data, you must first set up an alias on the appropriate tag.

Then assume a pattern index on the above alias. Finally, we can assign the appropriate role to the new index pattern.

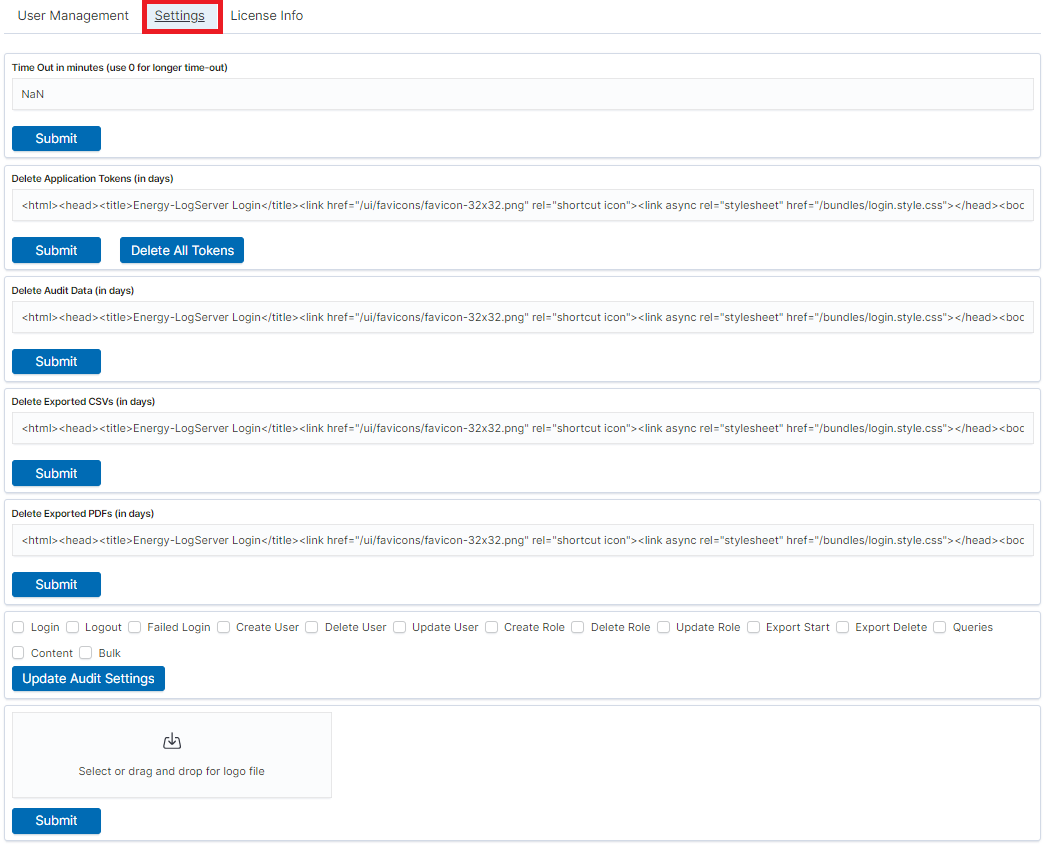

Settings¶

General Settings¶

The Settings tab is used to set the audit on different activities or events and consists of several fields:

- Time Out in minutes field - this field defines the time after how many minutes the application will automatically log you off

- Delete Application Tokens (in days) - in this field, we specify after what time the data from the audit should be deleted

- Delete Audit Data (in days) field - in this field, we specify after what time the data from the audit should be deleted

- The next fields are checkboxes in which we specify what kind of events are to be logged (saved) in the audit index. The events that can be monitored are: logging (Login), logging out (Logout), creating a user (Create User), deleting a user (Delete User), updating user (Update User), creating a role (Create Role), deleting a role (Delete Role), update of the role (Update Role), start of export (Export Start), delete of export (Export Delete), queries (Queries), result of the query (Content), if attempt was made to perform a series of operation (Bulk)

- Delete Exported CSVs (in days) field - in this field, we specify after which time exported files with CSV extension have to be removed

- Delete Exported PDFs (in days) field - in this field, we specify after which time exported files with PDF extension have to be removed

Each field is assigned the “Submit” button thanks to which we can confirm the changes.

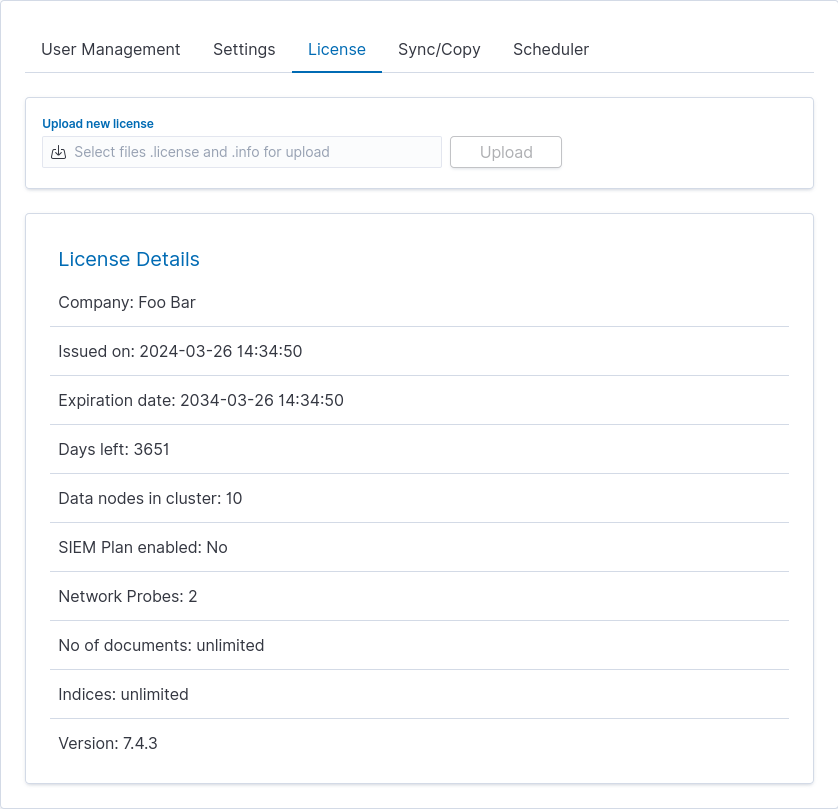

License (License Info)¶

The License Information tab consists of several non-editable information fields.

Also, if you are logged in as an administrator you will be able to upload new license from here.

These fields contain information:

- Company - who owns the license, in this case, Foo Bar.

- Issued on - license creation date

- Expiration date - when the license will expire

- Days left - how many days left before it will expire

- Data nodes in cluster - how many nodes we can put in one cluster - in this case, 10

- SIEM Plan enabled - is SIEM Plan covered by the license

- Network Probes - count of NetworkProbes covered by the license

- No of documents - license can be limited by docs count

- Indices - license can be limited by index pattern names

- Version - version of the product

Renew license¶

To change the ITRS Log Analytics license files on a running system, use the “Upload new license” option at the top of the “License” tab.

- Select the provided license files, e.g.: es_123.info, es_123.license.

- Click upload.

- Files will be uploaded and verified. New license information will appear.

- Verify new license information and click “Submit” when ready.

- It will install itself in the cluster - no need to manually put it on all nodes.

Special accounts¶

At the first installation of the ITRS Log Analytics application, apart from the administrative account (logserver), special applications are created in the application: alert, intelligence, and scheduler.

- Alert Account - this account is connected to the Alert Module which is designed to track events written to the index for the previously defined parameters. If these are met the information action is started (more on the action in the Alert section)

- Intelligence Account - this account is related to the module of artificial intelligence which is designed to track events and learn the network based on previously defined rules artificial intelligence based on one of the available algorithms (more on operation in the Intelligence chapter)

- Scheduler Account - the scheduler module is associated with this account, which corresponds to, among others for generating reports

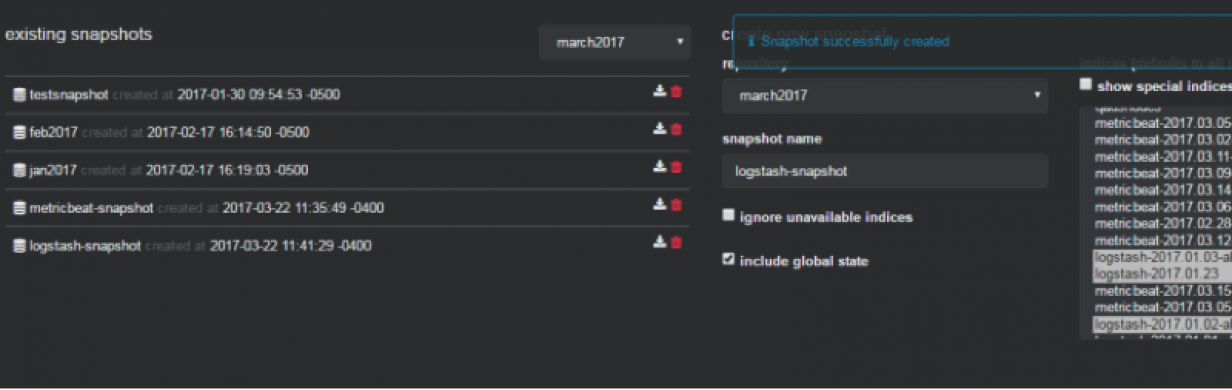

Backup/Restore¶

Backing up¶

The backup bash script is located on the hosts with Elasticsearch in the location: /usr/share/elasticsearch/utils/configuration-backup.sh.

The script is responsible for backing up the basic data in the Logserver system (these data are the system indexes found in Elasticsearch of those starting with a dot ‘.’ in the name), the configuration of the entire cluster, the set of templates used in the cluster and all the components.

These components include the Logstash configuration located in /etc/logstash and the Kibana configuration located in /etc/kibana.

All data is stored in the /tmp folder and then packaged using the /usr/bin/tar utility to tar.gz format with the exact date and time of execution in the target location, then the files from /tmp are deleted.

crontab

It is recommended to configure crontab.

- Before executing the following commands, you need to create a crontab file, set the path to backup, and direct them there.

In the below example, the task was configured on hosts with the Elasticsearch module on the root.

# crontab -l #Printing the Crontab file for the currently logged in user

0 1 * * * /bin/bash /usr/share/elasticsearch/utils/configuration-backup.sh

- The client-node host saves the backup in the /archive/configuration-backup/ folder.

- Receiver-node hosts save the backup in the /root/backup/ folder.

Restoration from backup¶

To restore the data, extract the contents of the created archive, e.g.

# tar -xzf /archive/configuration-backup/backup_name-000000-00000.tar.gz -C /tmp/restore

Then display the contents and select the files to restore (this will look similar to the following):

# ls -al /tmp/restore/00000-11111/

drwxr-xr-x 2 root root 11111 01-08 10:29 .

drwxr-xr-x 3 root root 2222 01-08 10:41 ..

-rw-r--r-- 1 root root 3333333 01-08 10:28 .file1.json

-rw-r--r-- 1 root root 4444 01-08 10:28 .file_number2.json

-rw-r--r-- 1 root root 5555 01-08 10:29 .file3.json

-rw-r--r-- 1 root root 666 01-08 10:29 .file4.json

-rw-r--r-- 1 root root 7777 01-08 10:29 .file5.json

-rw-r--r-- 1 root root 87 01-08 10:29 .file6.json

-rw-r--r-- 1 root root 1 01-08 10:29 file6.json

-rw-r--r-- 1 root root 11 01-08 10:29 .file7.json

-rw-r--r-- 1 root root 1234 01-08 10:29 file8.tar.gz

-rw-r--r-- 1 root root 0000 01-08 10:29 .file9.json

To restore any of the system indexes, e.g. .security, execute the commands:

# /usr/share/kibana/elasticdump/elasticdump --output="http://logserver:password@127.0.0.1:9200/.kibana" --input="/root/restore/20210108-102848/.security.json" –type=data

# /usr/share/kibana/elasticdump/elasticdump --output="http://logserver:password@127.0.0.1:9200/.kibana" --input="/root/restore/20210108-102848/.security_mapping.json" --type=mapping

To restore any of the configurations e.g. kibana/logstash/elastic/wazuh, follow the steps below:

# systemctl stop kibana

# tar -xvf /tmp/restore/20210108-102848/kibana_conf.tar.gz -C / --overwrite

# systemctl start kibana

To restore any of the templates, perform the following steps for each template:

- Select from the

templates.jsonfile the template you are interested in, omitting its name - Move it to a new

jsonfile, e.g.test.json - Load by specifying the name of the target template in the link

# curl -s -XPUT -H 'Content-Type: application/json' -u logserver '127.0.0.1:9200/_template/test -d@/root/restore/20210108-102848/test.json

To restore the cluster settings, execute the following command:

# curl -s -XPUT -H 'Content-Type: application/json' -u logserver '127.0.0.1:9200/_cluster/settings' -d@/root/restore/20210108-102848/cluster_settings.json

Audit actions¶

AUTHORIZATION PLUGIN¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| USER_[CREATE|UPDATE|DELETE] | _logserver/accounts | whole body with diff |

| ROLE_[CREATE|UPDATE|DELETE] | _logserver/constraints | whole body with diff |

| BULK | _bulk | whole body if enabled |

| QUERY | * | whole body if enabled |

| OBJECTS | .kibana | whole body if enabled |

Paths excluded from auditing

//_nodes*/_stats*/.auth/.authconfig*

CONFIG¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| TOKENS DELETED | post:/api/setting/job/deletealltokens | empty |

| SETTINGS TOKENDELETE | put:/api/setting/tokendelete | payload.value |

| SETTINGS TIMEOUT | put:/api/setting/ttl | payload.value |

| SETTINGS AUDIT SELECTION | put:/api/setting/auditselection | payload.value1 |

| SETTINGS AUDIT EXCLUSION | put:/api/setting/auditexclusion | payload |

| SETTINGS ALERT EXCLUDE FIELDS | put:/api/setting/alert_exclude_fields | payload.value1 |

| SETTINGS AUTH DOMAINS | put:/api/setting/auth_domains | payload.default_domain |

REPORTS¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| DATA EXPORT CREATED | post:/api/reports/data/export | payload.taskName |

| MANUAL DATA EXPORT CREATED | post:/api/reports/data/export_manual | payload.user |

| DATA EXPORT EDITED | put:/api/reports/data/export | payload.taskName |

| DASHBOARD REPORT EXPORT CREATED | post:/api/reports/dashboard/export | payload.taskName |

| DASHBOARD REPORT EXPORT EDITED | put:/api/reports/dashboard/export | payload.taskName |

| DATA TABLE REPORT EXPORT CREATED | post:/api/reports/table/export | payload.taskName |

| DATA TABLE REPORT EXPORT EDITED | put:/api/reports/table/export | payload.taskName |

| SCHEDULED TASK ENABLED | put:/api/reports/scheduler/enable | payload.id |

| SCHEDULED TASK DISABLED | put:/api/reports/scheduler/disable | payload.id |

| TASKS DELETED | delete:/api/reports | payload.objs |

| SETTINGS PDF_EXPIRY | post:/api/reports/settings/pdf | payload.pdfExpiry |

| SETTINGS CSV_EXPIRY | post:/api/reports/settings/csv | payload.csvExpiry |

| REPORT UPLOAD LOGO | post:/api/reports/settings/logos | payload.fileName |

| ONGOING TASK STOP | post:/api/reports/stop | params.taskId |

INDEX MANAGEMENT¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| ACTION CREATED | post:/api/index_management/action | payload.name |

| ACTION EDITED | put:/api/index_management/action | payload.name |

| ACTION START NOW | post:/api/index_management/action/run_action | payload.id |

| ACTION DELETED | delete:/api/index_management/action | params.name |

| SYSTEM INDEX ROLLOVER CONFIGURE | post:/api/index_management/settings/rollover/.agents | params.indexName |

| SYSTEM INDEX ROLLOVER CONFIGURE | post:/api/index_management/settings/rollover/alerts | params.indexName |

| SYSTEM INDEX ROLLOVER CONFIGURE | post:/api/index_management/settings/rollover/audit | params.indexName |

ARCHIVE¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| ARCHIVAL TASK CREATED | post:/api/archive/archive_task | payload.id |

| ARCHIVAL TASK UPDATED | put:/api/archive/archive_task | params.taskId |

| ARCHIVAL TASK START NOW | post:/api/archive/archive_task/run | params.taskId |

| TASKS DELETED | delete:/api/archive/tasks | payload.objs |

| SEARCH TASK CREATED | post:/api/archive/search_task/run | payload.searchtext |

| RESTORE TASK CREATED | post:/api/archive/restore_task/run | payload.destinationIndex |

ALERTS¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| ALERT RULE CREATED | post:/api/alerts/alertrule | payload.alertrulename |

| ALERT RULE EDITED | put:/api/alerts/alertrule | payload.alertrulename |

| ALERT RULE RAN ONCE | post:/api/alerts/alertrule/runonce | payload.id |

| ALERT RULES SAVED | post:/api/alerts/alertrule/saverules | empty |

| ALERT RULE DELETED | delete:/api/alerts/alertrule | params.id |

| ALERT GROUP CREATED | post:/api/alerts/groups | empty |

| ALERT GROUP RENAMED | put:/api/alerts/groups | payload.rename |

| ALERT GROUP DELETED | delete:/api/alerts/groups | payload.groupName |

| ALERT ADDED TO GROUP | put:/api/alerts/updatealerts | empty |

| ALERT CHANGED ROLES | put:/api/alerts/alertrules/changeroles | payload.alertsList |

| ALERT MANUAL INCIDENT CREATED | post:/api/alerts/incidents/create_manual | payload.data.rule_name |

| ALERT RULE [enabled|disabled] | put:/api/alerts/alertrule/switch | payload.alertsList |

| ALERT RULE INCIDENT EDITED | put:/api/alerts/incidents | payload.id + dynamic values

|

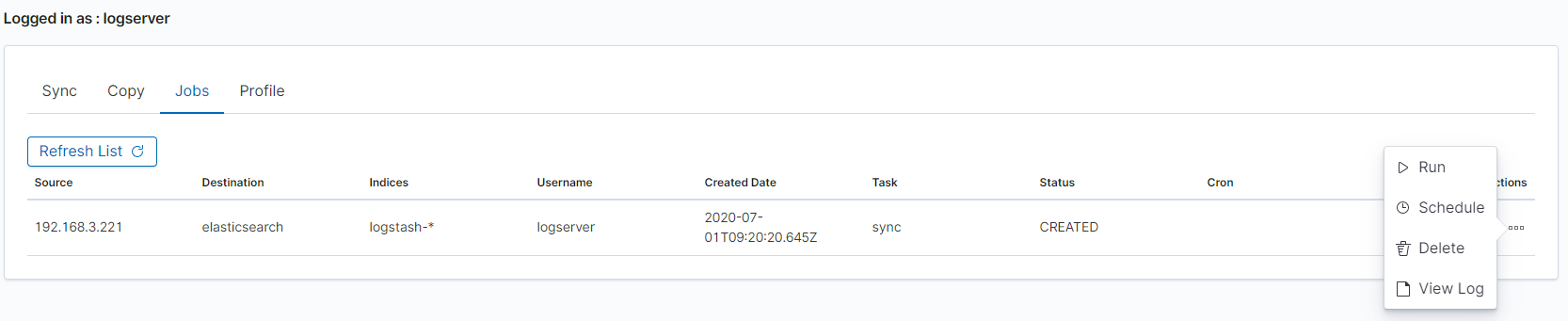

SYNC¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| SYNC PROFILE CREATED | post:/api/sync/clusterprofile | payload.host |

| SYNC SYNCHRONISED | put:/api/sync/syncTask | payload.destination |

| SYNC COPIED | post:/api/sync/copyTask | payload.destination |

| SYNC PROFILE DELETED | post:/api/sync/syncTask/delete | payload.id |

| SYNC JOB RUNED | post:/api/sync/runTask | payload.id |

AGENTS¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| AGENTS AGENT RELOADED | post:/api/agents/reloadagents | params.id |

| AGENTS MASTERAGENT RELOADED | post:/api/agents/reloadmasteragent | params.id |

| AGENTS DELETED | delete:/api/agents | params.uid |

| AGENTS FILE CREATED | post:/api/agents/templates/file | payload.file.name |

| AGENTS FILE DELETED | delete:/api/agents/files | empty |

| AGENTS FILE EDITED | put:/api/agents/files | payload.name |

| AGENTS TEMPLATE CREATED | post:/api/agents/templates | payload.template.name |

| AGENTS TEMPLATE EDITED | put:/api/agents/templates | payload.template.name |

| AGENTS TEMPLATE DELETED | delete:/api/agents/templates | payload.params.id |

| AGENTS BEATS [started|restarted|stoped] | post:/api/agents/manage/*

|

[params.beatname|payload] |

INTELLIGENCE¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| INTELLIGENCE RULE STOPED | get:/api/intelligence/intelligence_airules_set_stoprule | query.rule_uid |

| INTELLIGENCE RULE STARTED | get:/api/intelligence/intelligence_airules_set_startrule | query.rule_uid |

| INTELLIGENCE RULE DELETED | get:/api/intelligence/intelligence_airules_set_deleterule | query.rule_uid |

| INTELLIGENCE RULE [created|edited] | get:/api/intelligence/intelligence_modelcreation_set_formdata | query.rule_name |

NETWORK-PROBE¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

| NETWORK-PROBE FILE CREATED | post:/api/network-probe/files | payload.file.path |

| NETWORK-PROBE FILE DELETED | delete:/api/network-probe/files | payload.filePath |

| NETWORK-PROBE FILE REREGISTERED | post:/api/network-probe/files/register | payload.host.ip |

| NETWORK PROBE SERVICES [stoped|started|restarted] | post:/api/network-probe/services | payload.services.names |

| NETWORK-PROBE PIPELINES ENABLED | post:/api/network-probe/{probeId}/pipelines/enable | params.hostId, payload.pipelinesIds |

| NETWORK-PROBE PIPELINES DISABLED | post:/api/network-probe/{probeId}/pipelines/disable | params.hostId, payload.pipelinesIds |

| NETWORK-PROBE PIPELINES RELOADED | post:/api/network-probe/pipelines/reload | empty |

ELASTSCHEDULER¶

| ACTION TYPE | PATH | FROM REQUEST |

|---|---|---|

[ARCHIVE|INDEX_MANAGEMENT|SYNC] ACTION [enabled|disabled] |

put:/api/elastscheduler/schedulerjob | payload.name |

| SCHEDULER ACTION [enabled|disabled] | put:/api/elastscheduler/schedulerjob | payload.name |

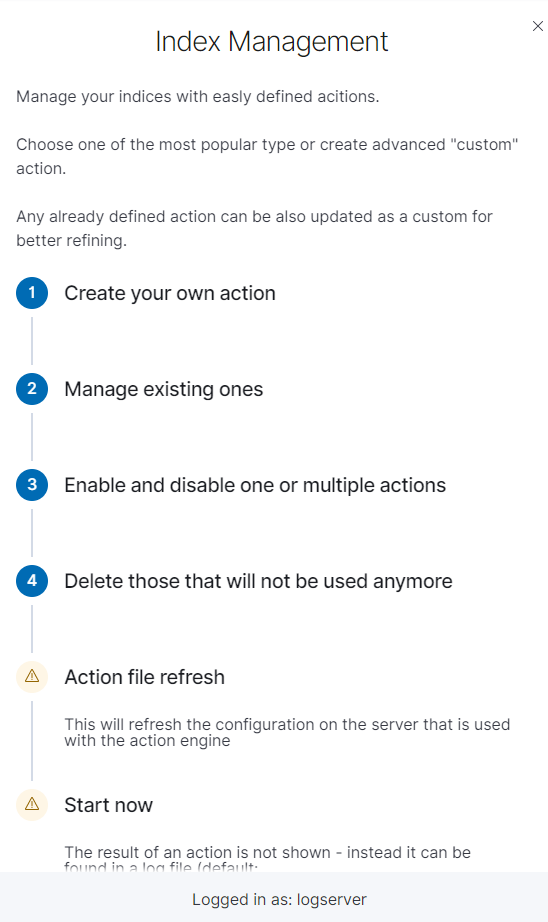

Index management¶

Note Before using the Index Management module is necessary to set an appropriate password for the Log Server user in the following file: /usr/share/kibana/curator/curator.yml*

The Index Management module allows you to manage indexes and perform activities such as:

- Closing indexes,

- Delete indexes,

- Performing a merge operation for index,

- Shrink index shards,

- Index rollover.

The Index Management module is accessible through the main menu tab.

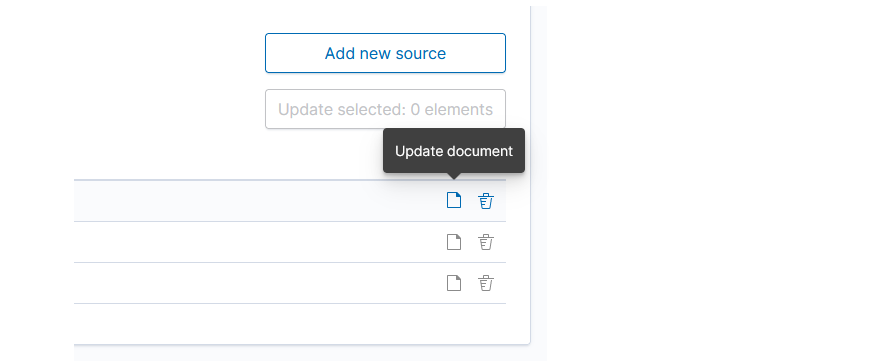

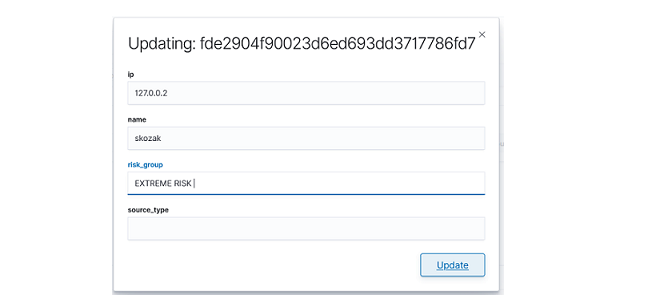

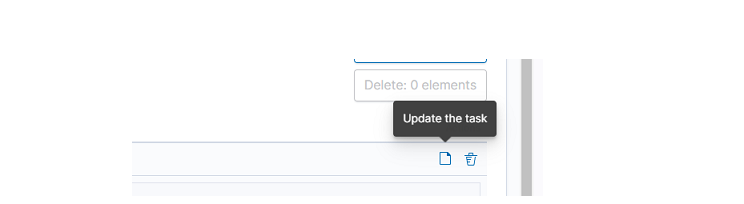

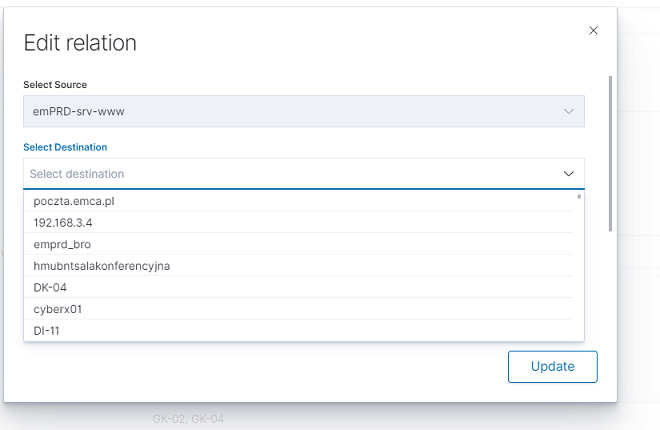

The main module window allows you to create new Create Task tasks, view and manage created tasks, that is:

- Update,

- Custom update,

- Delete,

- Start now,

- Disable / Enable.

Note Use the Help button

By using the Help button you can get a detailed description of the current actions

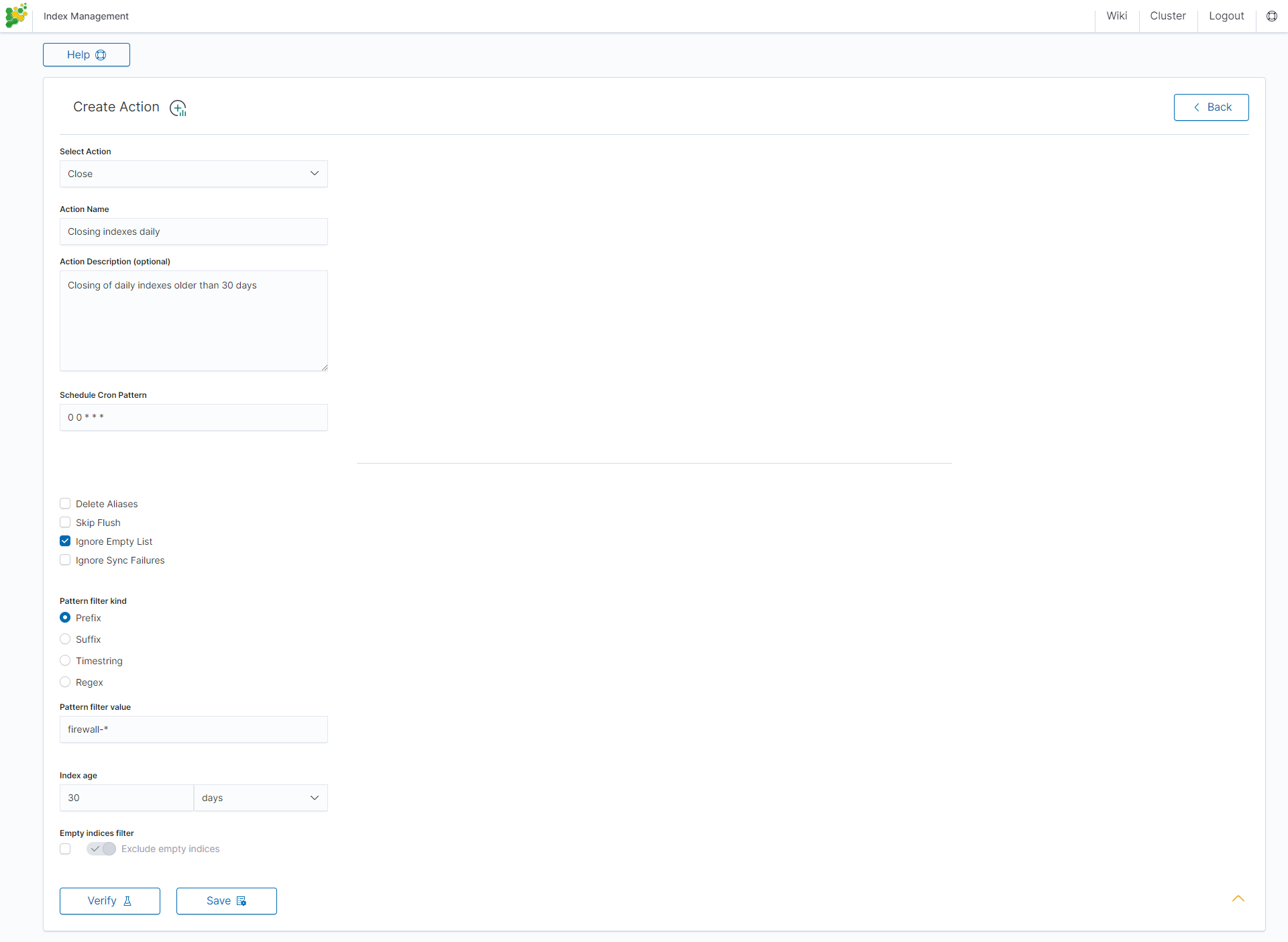

Close action¶

This action closes the selected indices and optionally deletes associated aliases beforehand.

Settings required:

- Action Name

- Schedule Cron Pattern - it sets when the task is to be executed, to decode cron format use the online tool: https://crontab.guru,

- Pattern filter kind - it sets the index filtertype for the task,

- Pattern filter value - it sets the value for the index filter,

- Index age - it sets the index age for the task.

Optional settings:

- Timeout override

- Ignore Empty List

- Continue if exception

- Closed indices filter

- Empty indices filter

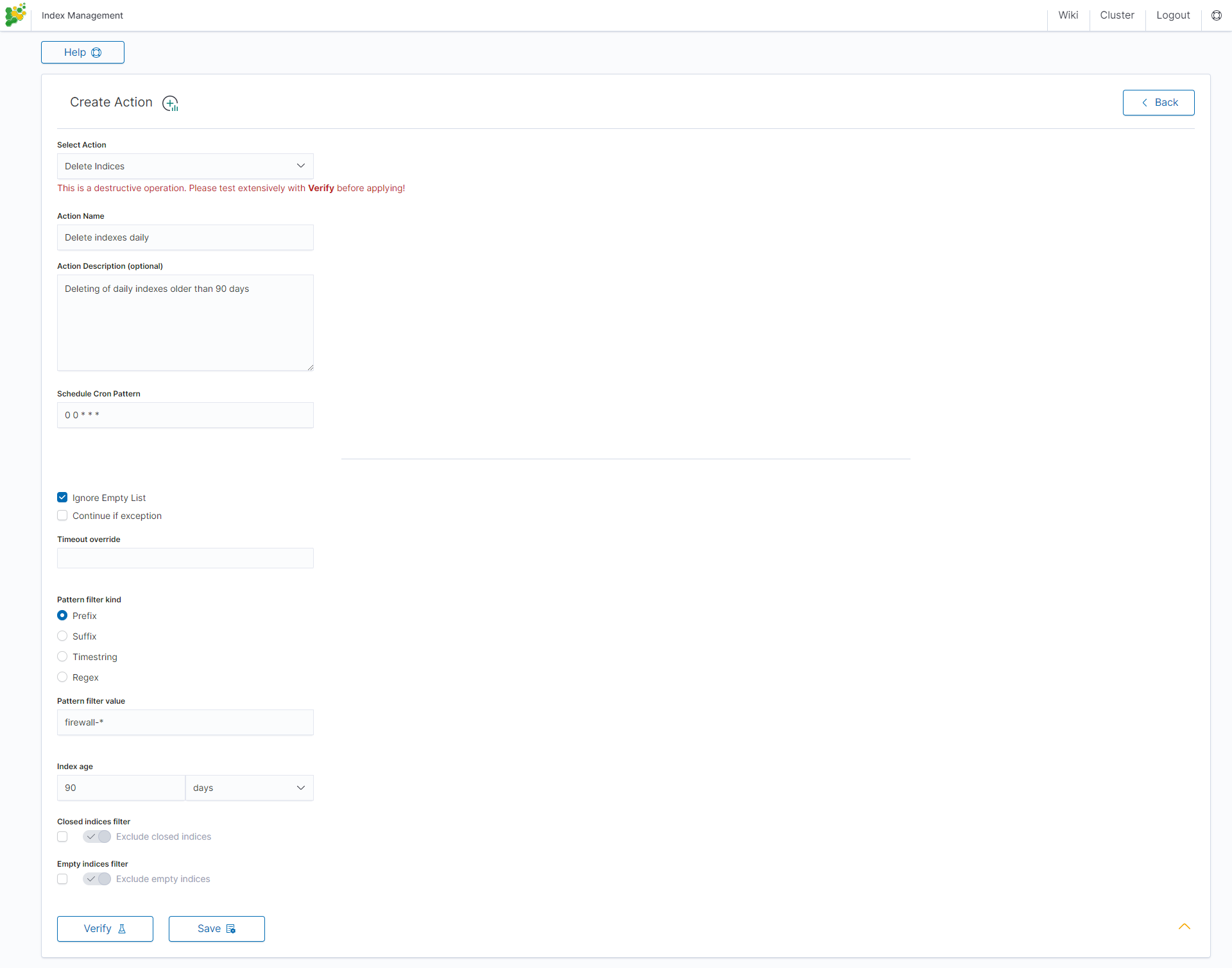

Delete action¶

This action deletes the selected indices.

Settings required:

- Action Name

- Schedule Cron Pattern - it sets when the task is to be executed, to decode cron format use the online tool: https://crontab.guru/,

- Pattern filter kind - it sets the index filtertype for the task,

- Pattern filter value - it sets the value for the index filter,

- Index age - it sets the index age for the task.

Optional settings:

- Delete Aliases

- Skip Flush

- Ignore Empty List

- Ignore Sync Failures

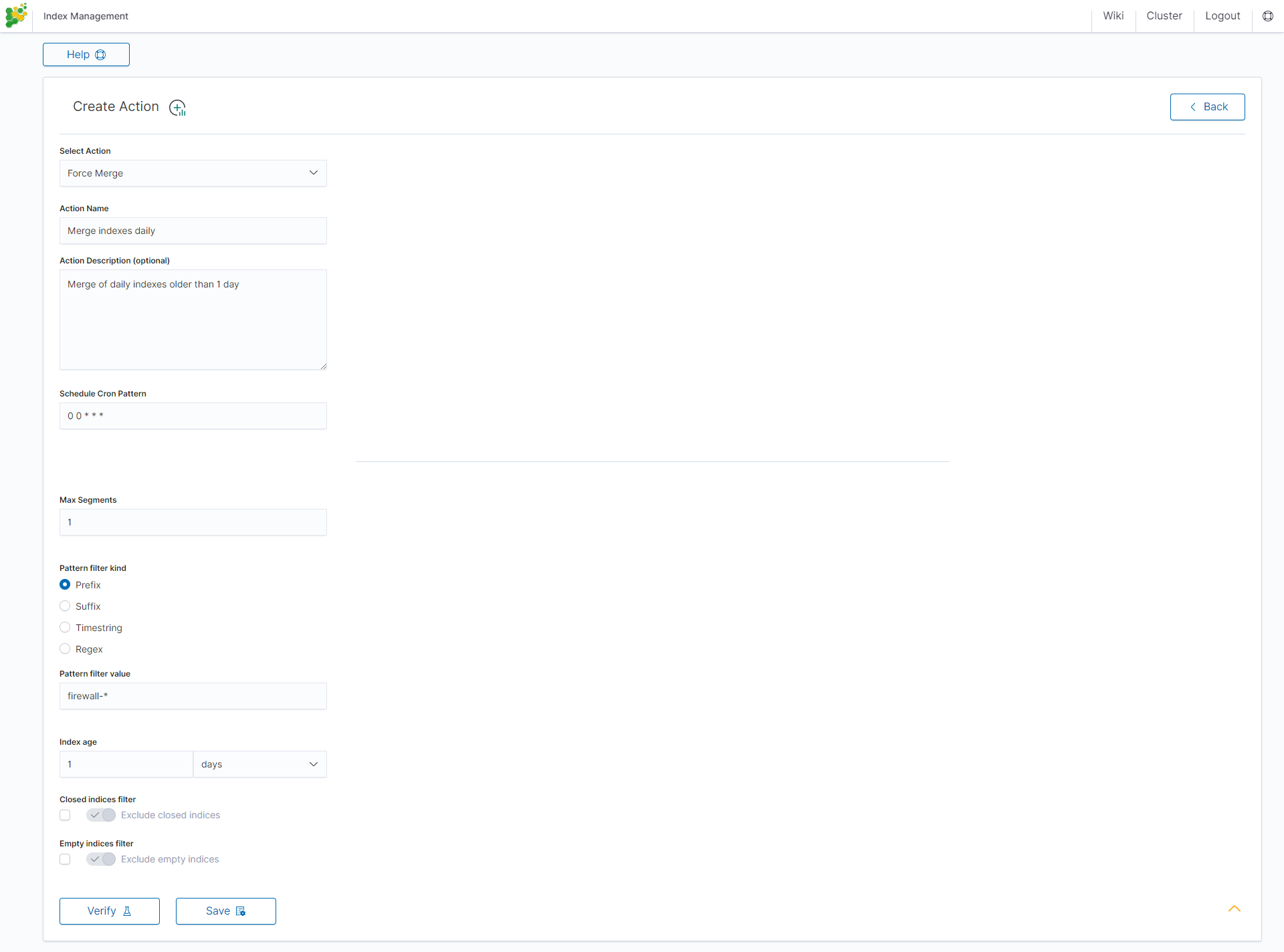

Force Merge action¶

This action performs a Force Merge on the selected indices, merging them in the specific number of segments per shard.

Settings required:

- Action Name

- Schedule Cron Pattern - it sets when the task is to be executed, to decode cron format use the online tool: https://crontab.guru/,

- Max Segments - it sets the number of segments for the shard,

- Pattern filter kind - it sets the index filtertype for the task,

- Pattern filter value - it sets the value for the index filter,

- Index age - it sets the index age for the task.

Optional settings:

- Ignore Empty List

- Ignore Sync Failures

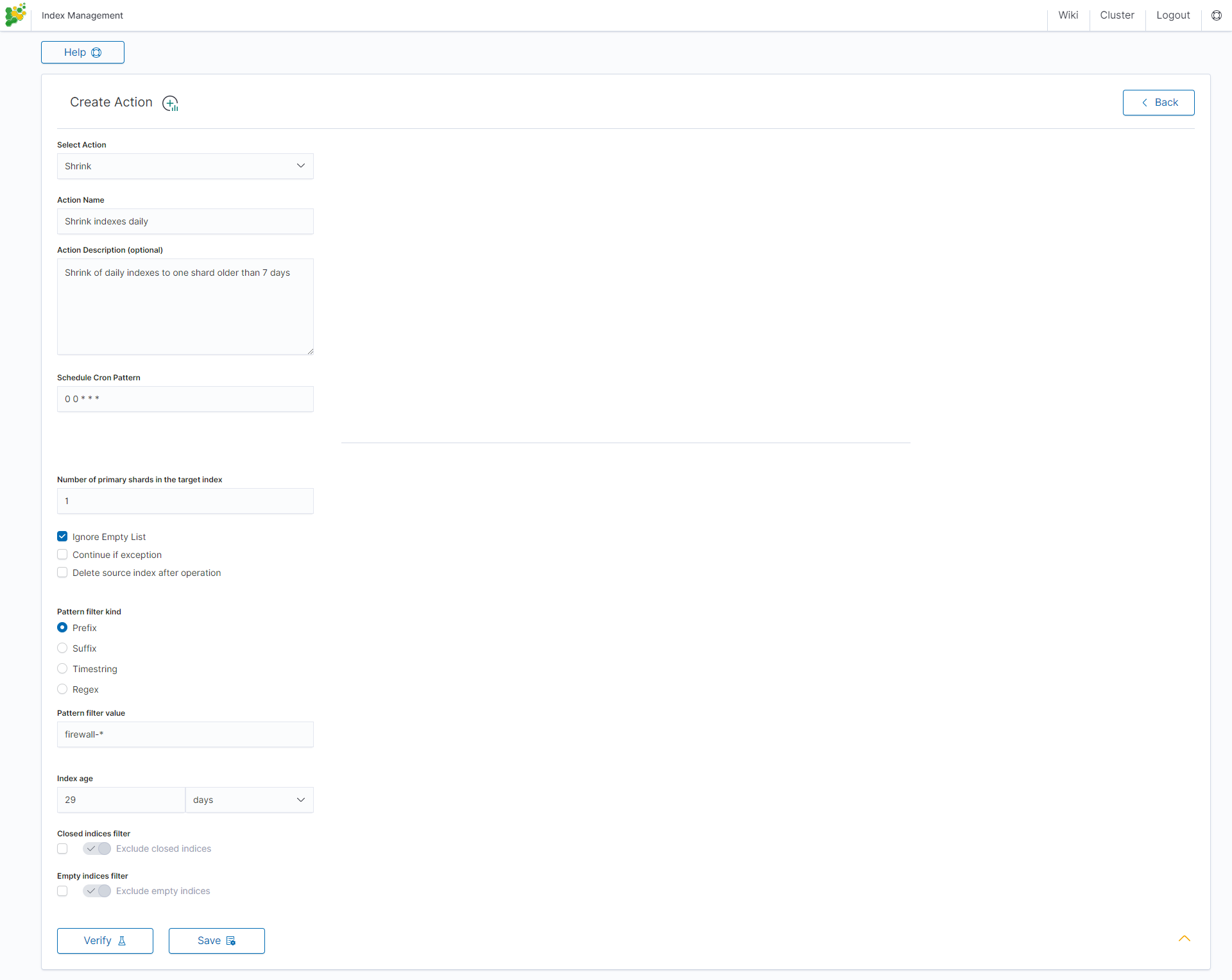

Shrink action¶

Shrinking an index is a good way to reduce the total shard count in your cluster.

Several conditions need to be met in order for index shrinking to take place:

- The index must be marked as read-only

- A (primary or replica) copy of every shard in the index must be relocated to the same node

- The cluster must have health green

- The target index must not exist

- The number of primary shards in the target index must be a factor of the number of primary shards in the source index.

- The source index must have more primary shards than the target index.

- The index must not contain more than 2,147,483,519 documents in total across all shards that will be shrunk into a single shard on the target index as this is the maximum number of docs that can fit into a single shard.

- The node handling the shrink process must have sufficient free disk space to accommodate a second copy of the existing index.

The task will try to meet these conditions. If it is unable to meet them all, it will not perform a shrink operation.

Settings required:

- Action Name

- Schedule Cron Pattern - it sets when the task is to be executed, to decode cron format use the online tool: https://crontab.guru/,

- Number of primary shards in the target index - it sets the number of shared for the target index,

- Pattern filter kind - it sets the index filtertype for the task,

- Pattern filter value - it sets the value for the index filter,

- Index age - it sets the index age for the task.

Optional settings:

- Ignore Empty List

- Continue if exception

- Delete source index after operation

- Closed indices filter

- Empty indices filter

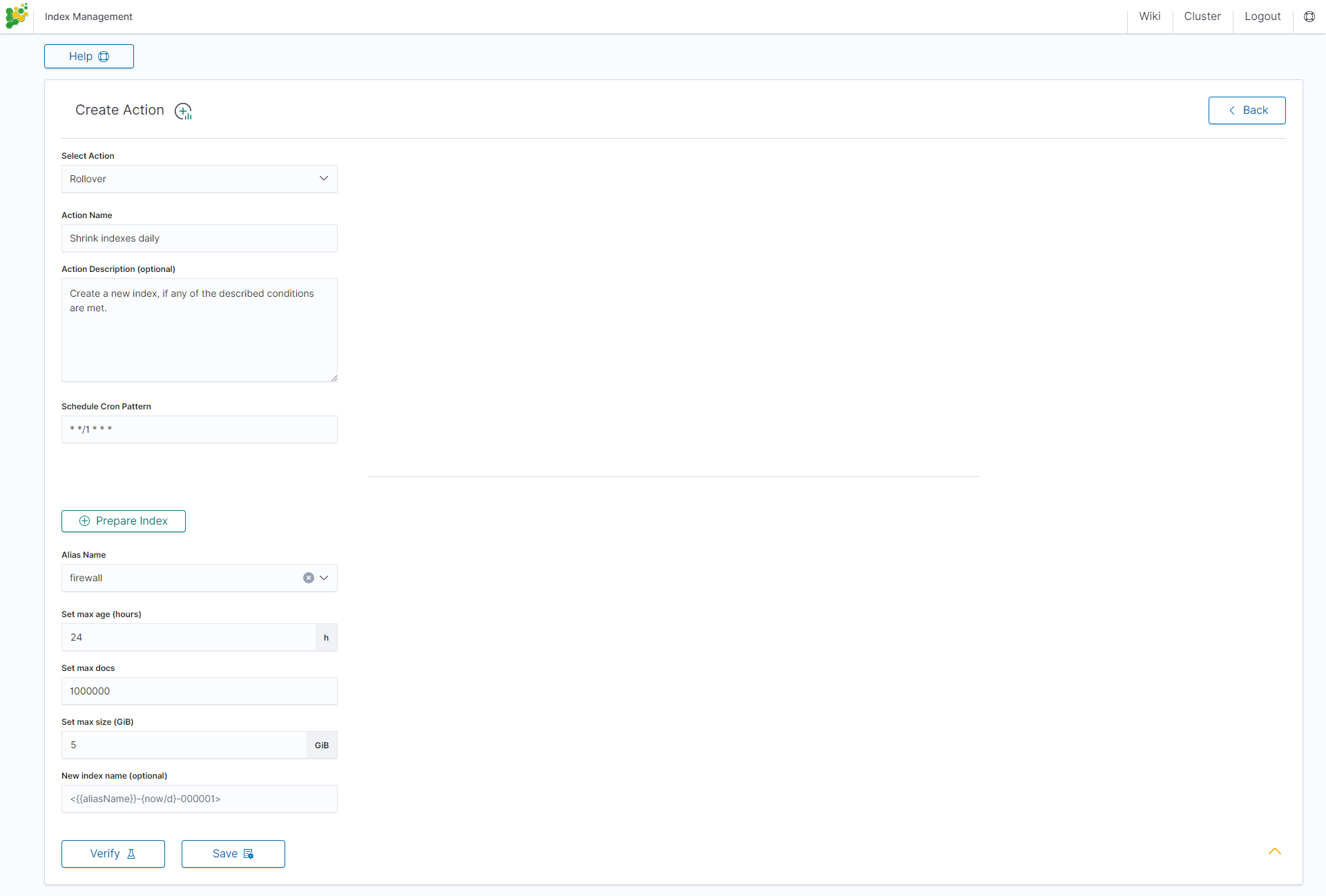

Rollover action¶

This action uses the Elasticsearch Rollover API to create a new index if any of the described conditions are met.

Settings required:

- Action Name

- Schedule Cron Pattern - it sets when the task is to be executed, to decode cron format use the online tool: https://crontab.guru/,

- Alias Name - it sets an alias for the index,

- Set max age (hours) - it sets an age for the index after then index will rollover,

- Set max docs - it sets a number of documents for the index after which the index will rollover,

- Set max size (GiB) - it sets index size in GB after which the index will rollover.

Optional settings:

- New index name (optional)

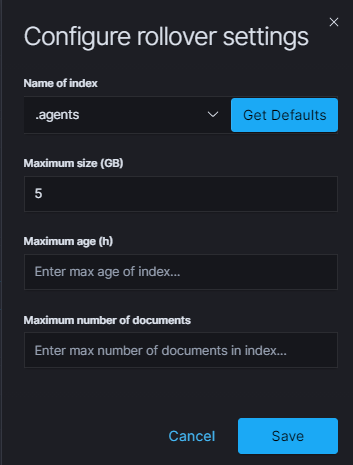

Index rollover¶

Using the rollover function, you can make changes to removing documents from the audit, .agents, and alert* indexes.

You can configure the rollover by going to the Config module, then clicking the Settings tab, going to the Index rollover settings section, and clicking the Configure button:

You can set the following retention parameters for the above indexes:

- Maximum size (GB);

- Maximum age (h);

- Maximum number of documents.

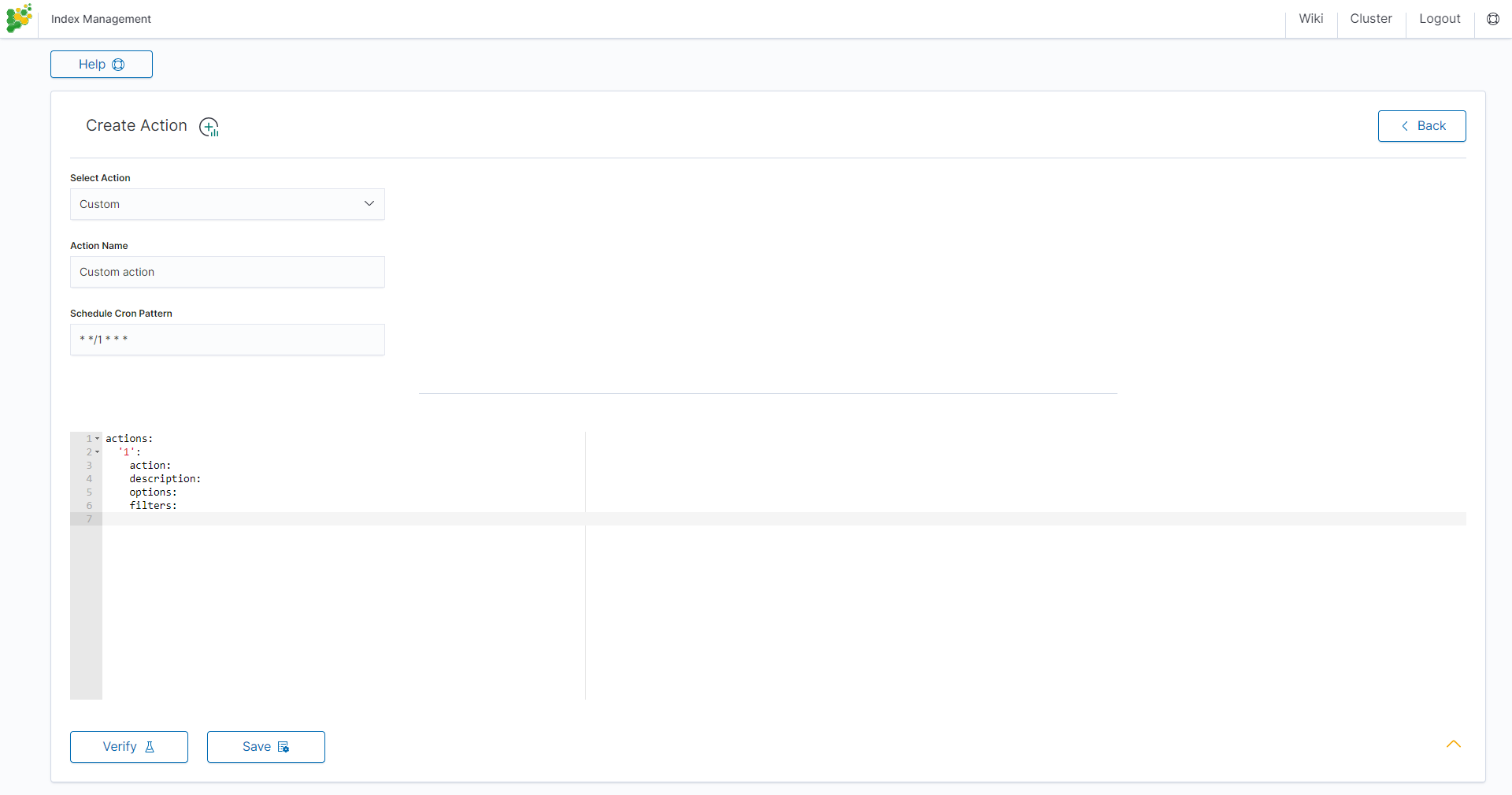

Custom action¶

Additionally, the module allows you to define your own actions in line with the Curator documentation: https://www.elastic.co/guide/en/elasticsearch/client/curator/current/actions.html

To create a Custom action, select Custom from Select Action, enter a name in the Action Name field, and set the schedule in the Schedule Cron Pattern field. In the edit field, enter the definition of a custom action:

Custom Action examples:

Open index¶

actions:

1:

action: open

description: >-

Open indices older than 30 days but younger than 60 days (based on index

name), for logstash- prefixed indices.

options:

timeout_override:

continue_if_exception: False

disable_action: True

filters:

- filtertype: pattern

kind: prefix

value: logstash-

exclude:

- filtertype: age

source: name

direction: older

timestring: '%Y.%m.%d'

unit: days

unit_count: 30

exclude:

- filtertype: age

source: name

direction: younger

timestring: '%Y.%m.%d'

unit: days

unit_count: 60

exclude:

Replica reduce¶

actions:

1:

action: replicas

description: >-

Reduce the replica count to 0 for logstash- prefixed indices older than

10 days (based on index creation_date)

options:

count: 0

wait_for_completion: False

timeout_override:

continue_if_exception: False

disable_action: True

filters:

- filtertype: pattern

kind: prefix

value: logstash-

exclude:

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 10

exclude:

Index allocation¶

actions:

1:

action: allocation

description: >-

Apply shard allocation routing to 'require' 'tag=cold' for hot/cold node

setup for logstash- indices older than 3 days, based on index_creation

date

options:

key: tag

value: cold

allocation_type: require

disable_action: True

filters:

- filtertype: pattern

kind: prefix

value: logstash-

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 3

Cluster routing¶

actions:

1:

action: cluster_routing

description: >-

Disable shard routing for the entire cluster.

options:

routing_type: allocation

value: none

setting: enable

wait_for_completion: True

disable_action: True

2:

action: (any other action details go here)

...

3:

action: cluster_routing

description: >-

Re-enable shard routing for the entire cluster.

options:

routing_type: allocation

value: all

setting: enable

wait_for_completion: True

disable_action: True

Preinstalled actions¶

Close-Daily¶

This action closes the selected indices older than 93 days and optionally deletes associated aliases beforehand. For example, if it is today 21 December this action will close or optionally delete every index older than 30 September of the same year, action starts every day at 01:00 AM.

Action type: CLOSE Action name: Close-Daily Action Description (optional): Close daily indices older than 90 days Schedule Cron Pattern : 0 1 * * * Delete Aliases: enabled Skip Flush: disabled Ignore Empty List: enabled Ignore Sync Failures: enabled Pattern filter kind: Timestring Pattern filter value: %Y.%m$ Index age: 93 days Empty indices filter: disable

Close-Monthly¶

This action closes the selected indices older than 93 days (3 months)and optionally deletes associated aliases beforehand. If it today is 21 December, this action will close or optionally delete every index older than Oktober the same year, the action starts every day at 01:00 AM.

Action type: CLOSE Action name: Close-Daily Action Description (optional): Close daily indices older than 93 days Schedule Cron Pattern: 0 1 * * * Delete Aliases: enabled Skip Flush: disabled Ignore Empty List: enabled Ignore Sync Failures: enabled Pattern filter kind: Timestring Pattern filter value: %Y.%m$ Index age: 93 days Empty indices filter: disable

Disable-Refresh-Older-Than-Days¶

This action disables the daily refresh of indices older than 2 days. the action is performed daily at 01:00.

Action type: CUSTOM Action name: Disable-Refresh-Older-Than-Days Schedule Cron Pattern: 0 1 * * *

YAML:

actions:

'1':

action: index_settings

description: Disable refresh for older daily indices

options:

index_settings:

index:

refresh_interval: -1

ignore_unavailable: False

ignore_empty_list: true

preserve_existing: False

filters:

- filtertype: pattern

kind: timestring

value: '%Y.%m.%d$'

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 2

Disable-Refresh-Older-Than-Month¶

This action forces the daily merge of indices older than one month. The action is performed daily at 01:00.

Action type: CUSTOM Action name: Disable-Refresh-Older-Than-Month Schedule Cron Pattern: 0 1 * * *

YAML:

actions:

'1':

action: index_settings

description: Disable refresh for older monthly indices

options:

index_settings:

index:

refresh_interval: -1

ignore_unavailable: False

ignore_empty_list: true

preserve_existing: False

filters:

- filtertype: pattern

kind: timestring

value: '%Y.%m$'

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 32

Force-Merge-Older-Than-Days¶

This action forces the daily merge of indices older than two days. The action is performed daily at 01:00.

Action type: CUSTOM Action name: Force-Merge-Older-Than-Days Schedule Cron Pattern: 0 1 * * *

YAML:

actions:

'1':

action: forcemerge

description: Force merge on older daily indices

options:

max_num_segments: 1

ignore_empty_list: true

continue_if_exception: false

delay: 60

filters:

- filtertype: pattern

kind: timestring

value: '%Y.%m.%d$'

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 2

- filtertype: forcemerged

max_num_segments: 1

exclude: True

Force-Merge-Older-Than-Months¶

This action forces the daily merge of indices older than one month. The action is performed daily at 01:00.

Action type: CUSTOM Action name: Force-Merge-Older-Than-Months Schedule Cron Pattern: 0 1 * * *

YAML:

actions:

'1':

action: forcemerge

description: Force merge on older monthly indices

options:

max_num_segments: 1

ignore_empty_list: true

continue_if_exception: false

delay: 60

filters:

- filtertype: pattern

kind: timestring

value: '%Y.%m$'

- filtertype: age

source: creation_date

direction: older

unit: days

unit_count: 32

- filtertype: forcemerged

max_num_segments: 1

exclude: True

Logtrail-default-delete¶

This action leaves only two last indices from each logtrail rollover index ( allows for up to 10GB of data). The action is performed daily at 03:30.

Action type: CUSTOM Action name: Logtrail-default-delete Schedule Cron Pattern: 30 3 * * *

YAML:

actions:

'1':

action: delete_indices

description: >-

Leave only two last indices from each logtrail rollover index - allows for up to

10GB data.

options:

ignore_empty_list: true

continue_if_exception: true

filters:

- filtertype: count

count: 2

pattern: '^logtrail-(.*?)-\d{4}.\d{2}.\d{2}-\d+$'

reverse: true

Logtrail-default-rollover¶

This action rollover default Logtrail indices. The action is performed every 5 minutes.

Action type: CUSTOM Action name: Logtrail-default-rollover Schedule Cron Pattern: 5 * * * *

YAML:

actions:

'1':

action: rollover

description: >-

This action works on default logtrail indices. It is recommended to enable

it.

options:

name: logtrail-alert

conditions:

max_size: 5GB

continue_if_exception: true

allow_ilm_indices: true

'2':

action: rollover

description: >-

This action works on default logtrail indices. It is recommended to enable

it.

options:

name: logtrail-elasticsearch

conditions:

max_size: 5GB

continue_if_exception: true

allow_ilm_indices: true

'3':

action: rollover

description: >-

This action works on default logtrail indices. It is recommended to enable

it.

options:

name: logtrail-kibana

conditions:

max_size: 5GB

continue_if_exception: true

allow_ilm_indices: true

'4':

action: rollover

description: >-

This action works on default logtrail indices. It is recommended to enable

it.

options:

name: logtrail-logstash

conditions:

max_size: 5GB

continue_if_exception: true

allow_ilm_indices: true

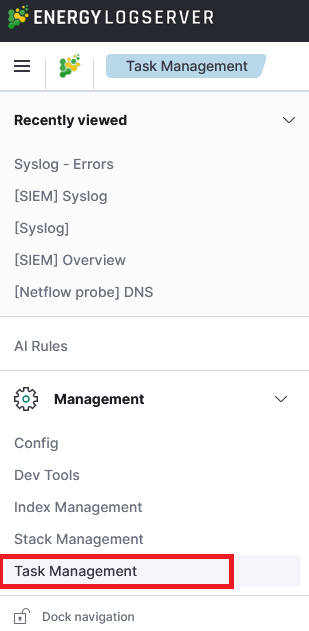

Task Management¶

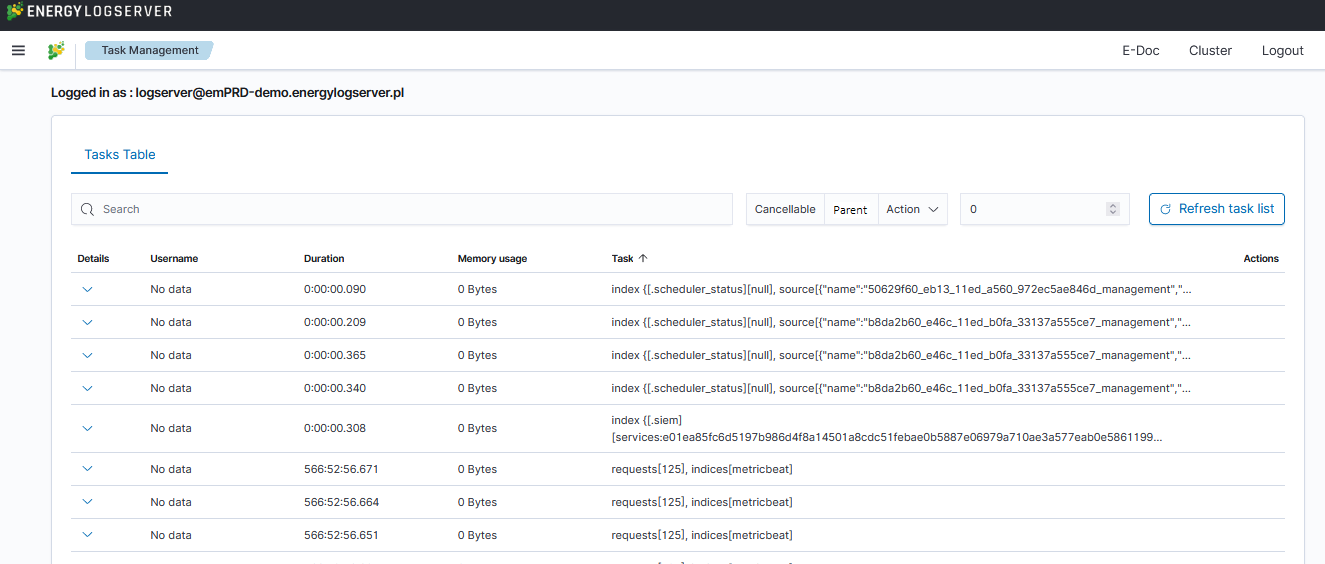

Energy Logserver provides the ability to manage tasks. Table with running tasks is located in Task Management section.

Long Running Query¶

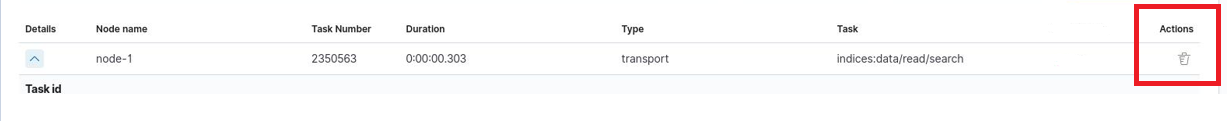

If query is cancellable trash icon is available in Actions column. (Note: Not every query can be canceled)

Searchbar and filtering¶

It is possible to search for running task using searchbar. There are provided four types of filtering:

Cancellable- It filters only cancellable tasks.Parent- This option filters only parent tasks.Action- It filters by action ( Sample action:indices:data/write/bulk).Running time in seconds- When checked, only shows tasks that take longer than a specified number of seconds.

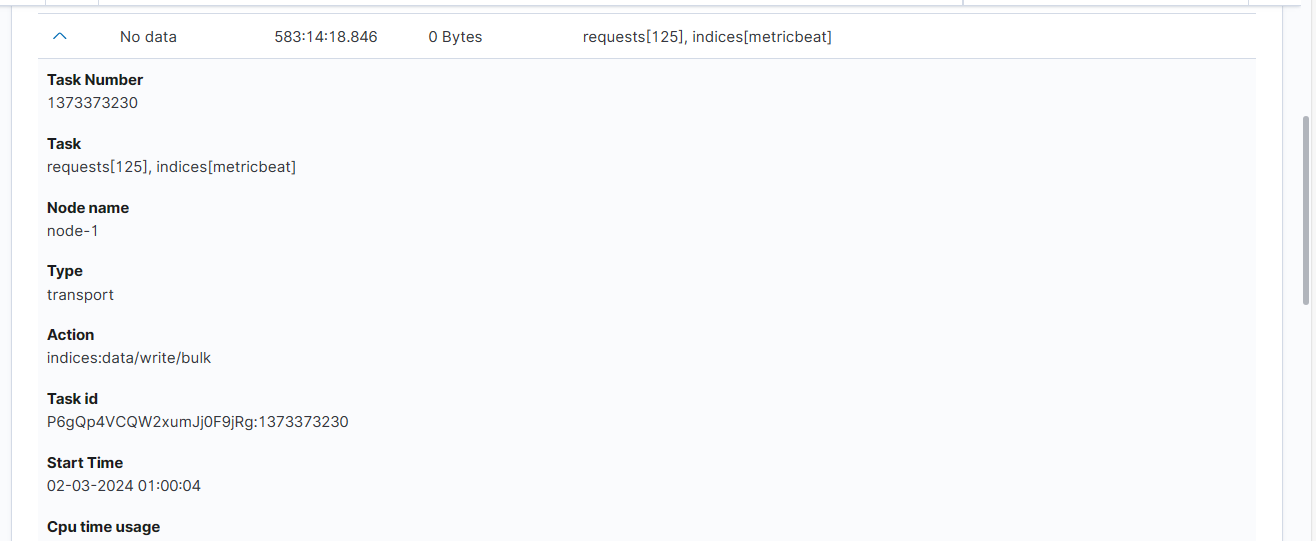

Task Details¶

Expanding the details column provides more information about a given task. (Note: Depending on the type of task, different information may be provided.)

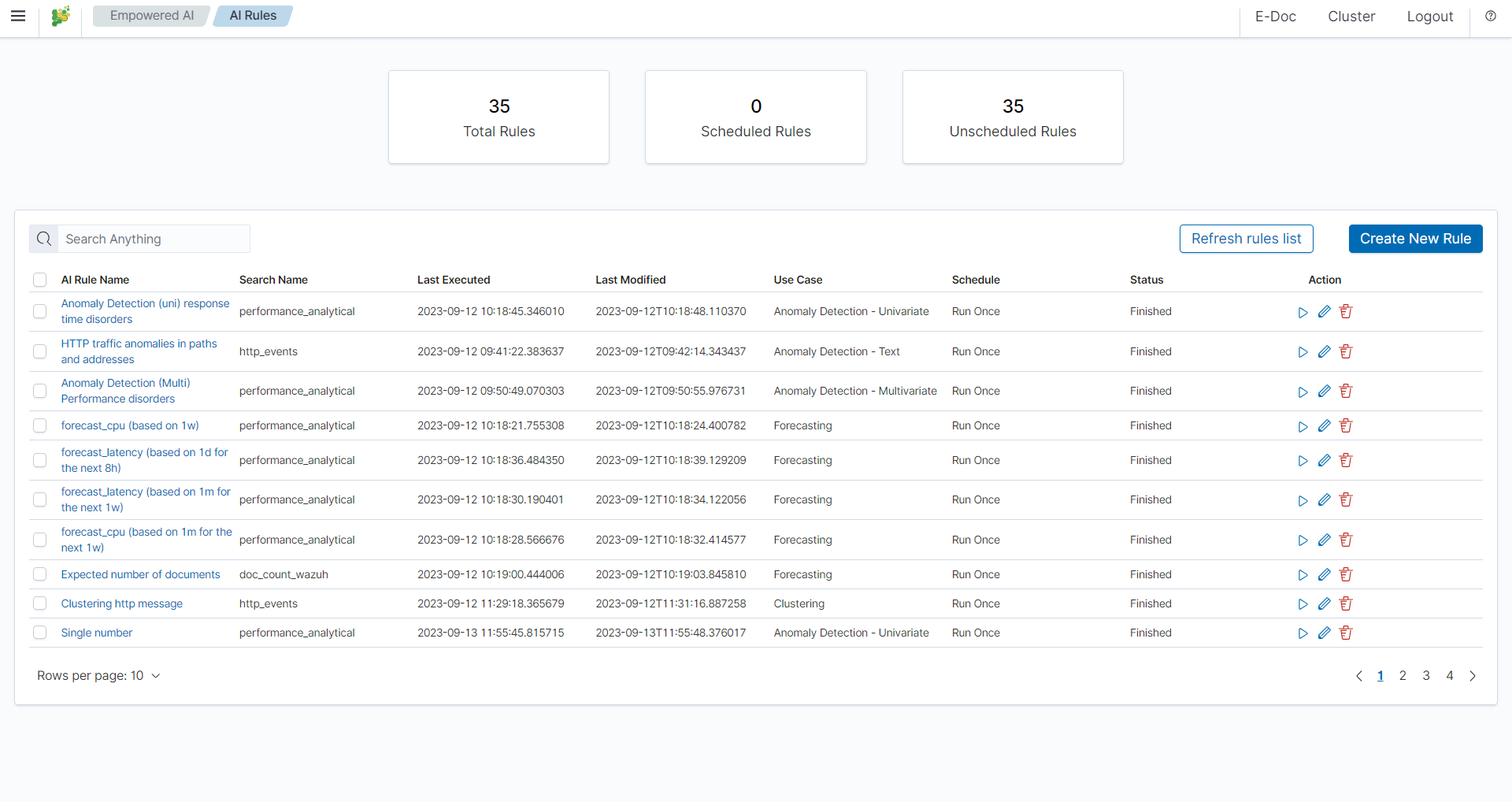

Empowered AI¶

Empowered AI is a module of ITRS Log Analytics containing mathematical algorithms for data science.

It is licenced extention for SIEM deplyment. Main purpose of the Empowered AI is to help SOC teams see that data which are difficult to detect with regular approach. Advance mathematical sorting, grouping, forecastig enriched with statistics create a new outlook of security posture.

Empowered AI is an ongoing project. Our team of mathematicians, data scientists and security analysts continue their work addressing more and more new usecases.

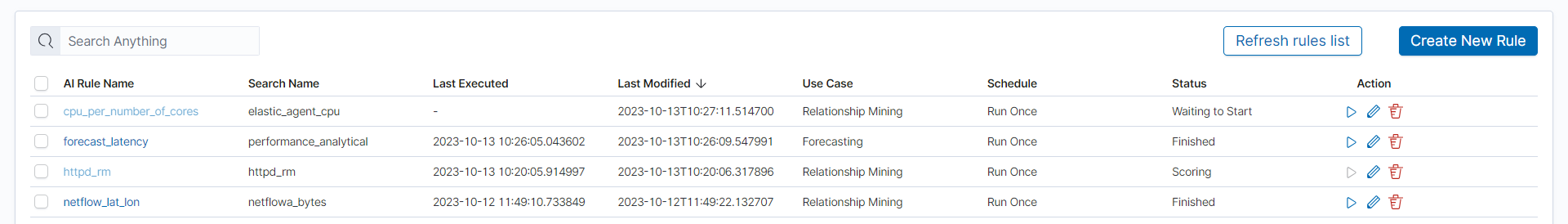

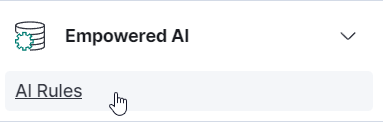

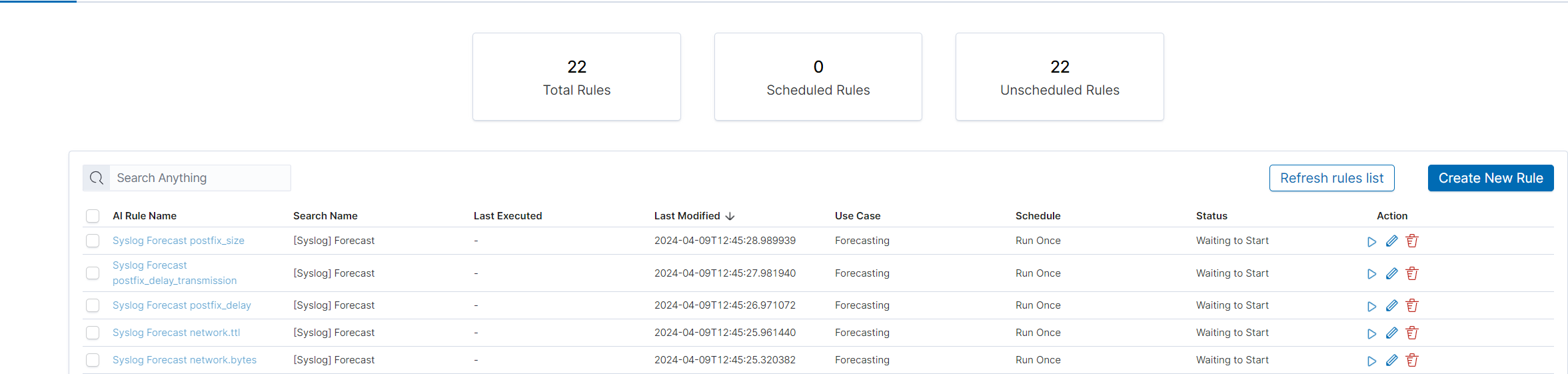

AI Rules¶

In the Empowered AI section you will find a summary of the existing rules. At the top, you’ll find the total number of rules and the number of scheduled and unscheduled rules. Here is the search field and buttons Refresh rules list and Create New Rule Below is the table. It contains AI Rule Name, Search/Index Name - data source, Last Executed - date, Last Modified - date, selected Use Case, Schedule - scheduling frequency, Status and Action icons.

Status¶

The rule has one of the following statuses:

- Waiting to start -

Run oncerule starts by clicking symbol play. - Scheduled - the scheduled rule starts automatically.

- Scoring

- Building

- Finished - click on the

AI Rule Nameto get the forecast results previewAI Rules>Performance. - Error - check error details in the results preview

Performance>AI Rule Configuration>Exceptions.

Actions¶

Icons of actions:

- Play – run or rerun the rule,

- Stop – unschedule periodic rule, after this action rule type changes to Run Once,

- Pencil - edit the rule’s configuration,

- Bin – delete the rule.

Prepare your data set¶

Save your Discover search so you can use it the same way as in visualizations and dashboards.

Create New Rule¶

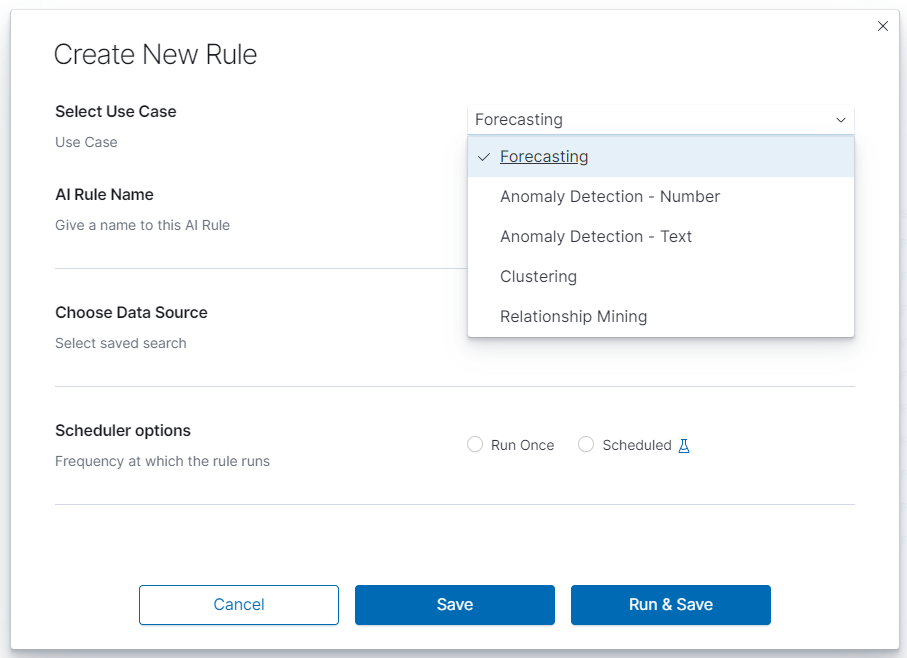

To create a rule choose ``Empowered AI>AI Rules>Create New Rule. In pop-up form Select Use Case. The Empowered AI module contains AI models for various use cases: Forecasting, Anomaly Detection - Number, Anomaly Detection - Text, Clustering and Relationship Mining.

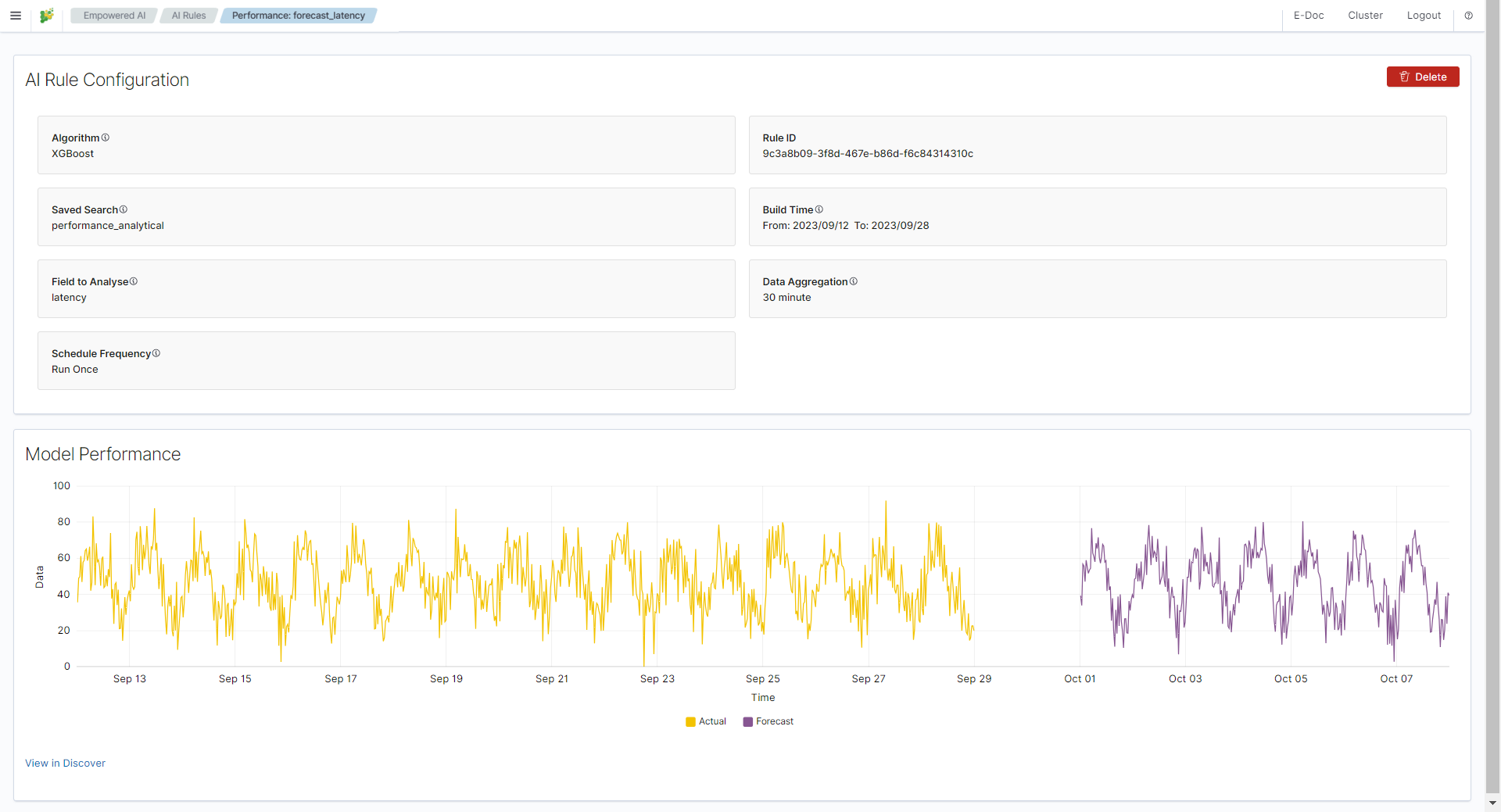

Performance¶

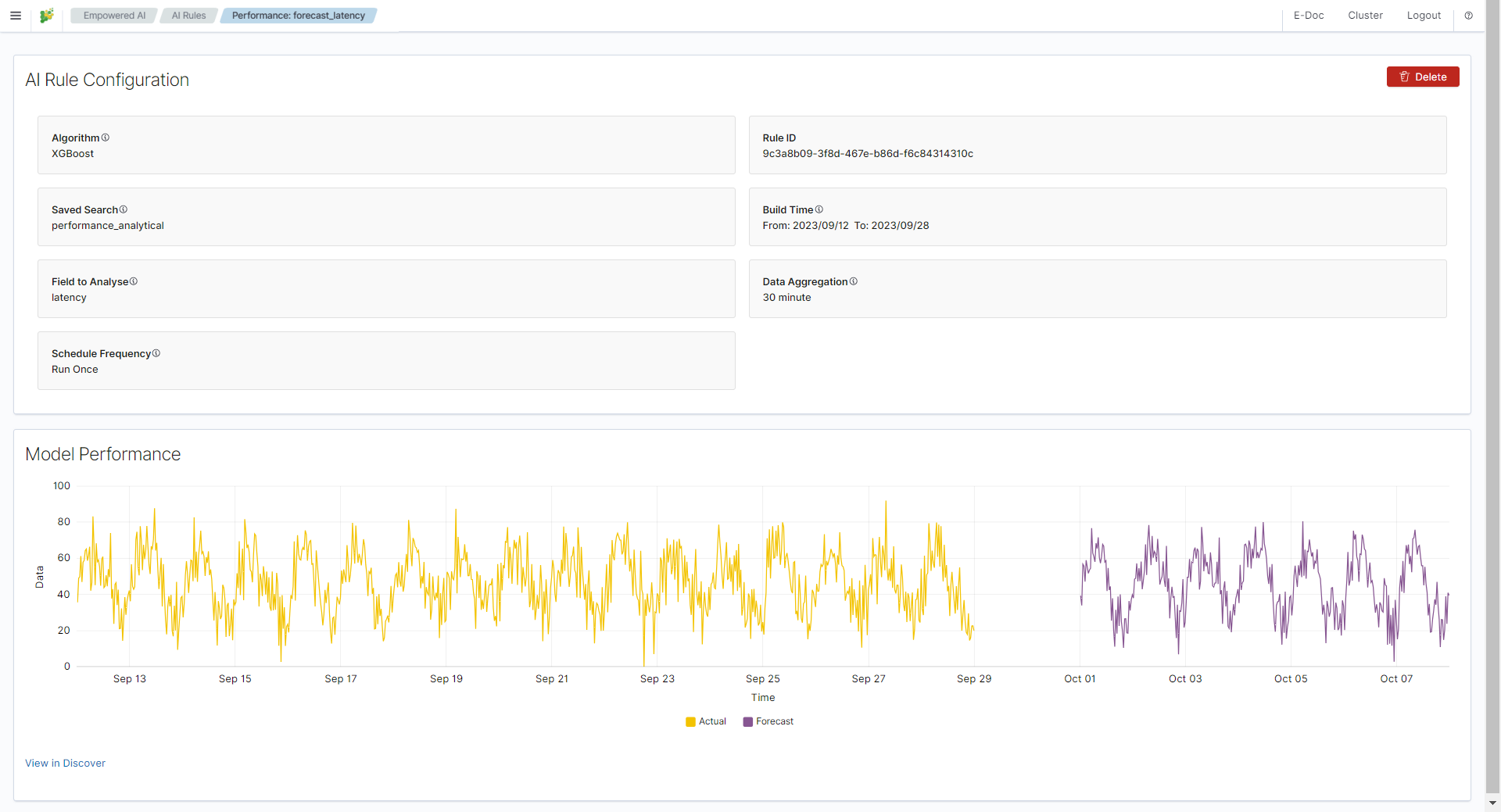

To see the result of the finished rule click on the link in the column AI Rule Name. Here we have AI Rule Configuration and Model Performance.

In AI Rule Configuration you will find detailed configuration parameters and information about the training data set. Exception details will also appear here in case of misconfiguration. Model Performance is the presentation of data and analysis results. Depending on the use case, these are charts, relationship visualizations, and tables.

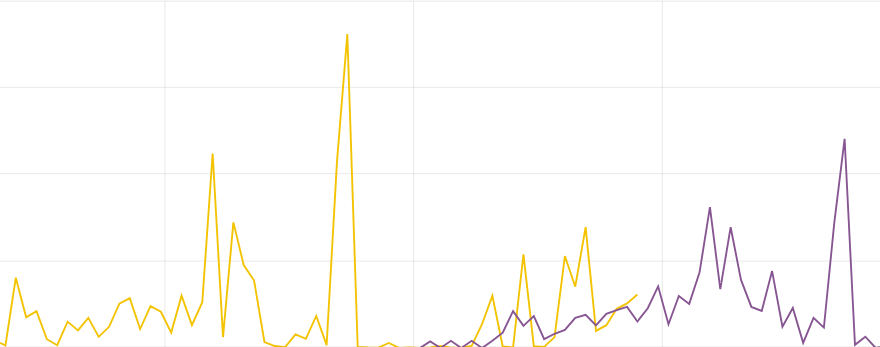

Forecasting¶

Predicting future conditions is a crucial aspect of decision-making in any organization. To anticipate upcoming events, historical data analysis is indispensable. ITRS Log Analytics Empowered AI Module suggests utilizing the XGBoost algorithm, also known as eXtreme Gradient Boosting, for forecasting your environmental variables in the near future.

XGBoost is an advanced machine learning algorithm that excels at handling datasets with multiple variables. This algorithm can accurately model complex relationships within data, enabling precise predictions of future events. XGBoost is a popular choice in the field of Data Science, particularly for solving regression and classification problems, thanks to its efficiency and effectiveness.

Create a rule¶

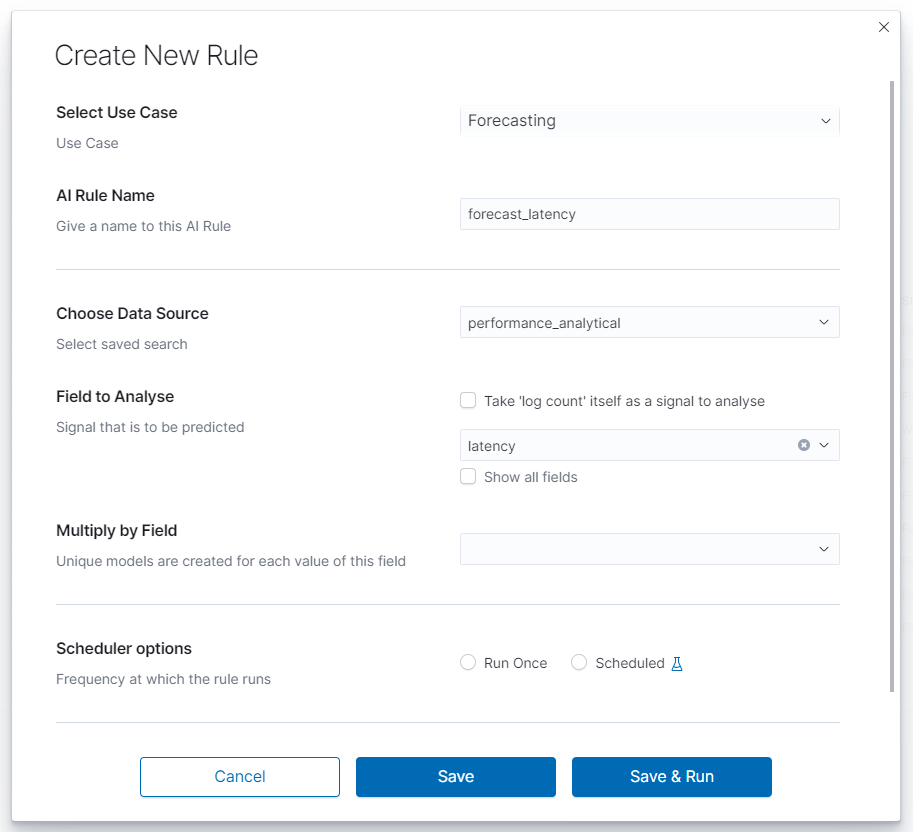

To create a rule choose Empowered AI>AI Rules>Create New Rule. In pop-up form Select Use Case>Forecasting and insert the rule’s name.

Choose data¶

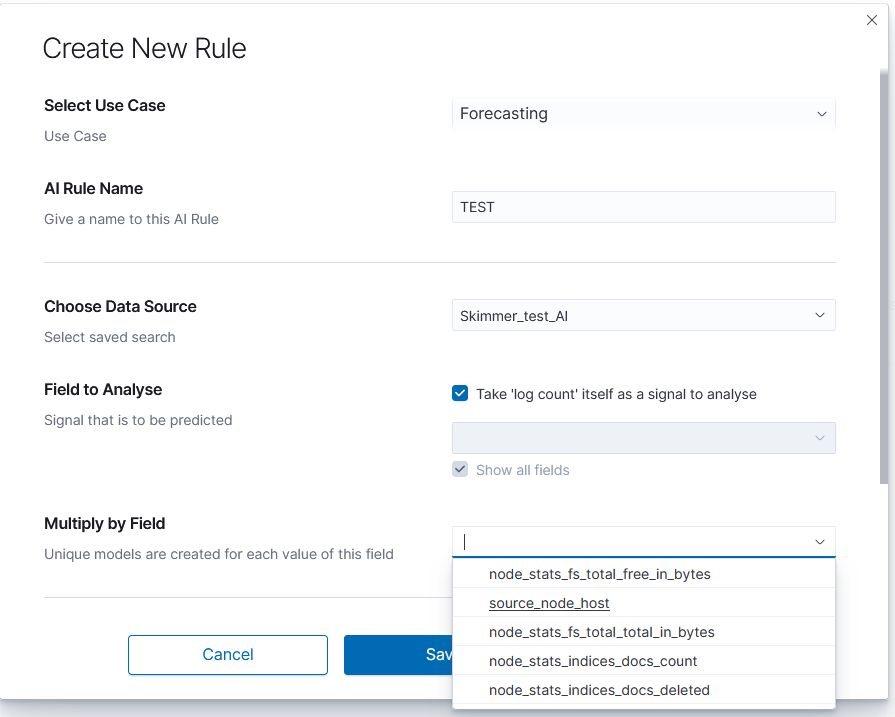

Choose Data Source is a drop-down list with your saved data, choose one of them. After loading the source, we can select Field to Analyse - the id-field to be predicted. The system default loads headers of the appropriate data type for the specified use case. You can also choose the checkbox displaying all the fields. The proper mapping is necessary for appropriate header recognition. As numerical data, you can also use the number of documents instead of field values. To do that, choose the checkbox. Multiply by Field allows you to obtain separate forecasts e.g., for several (or all) hosts, users and domains, in one rule.

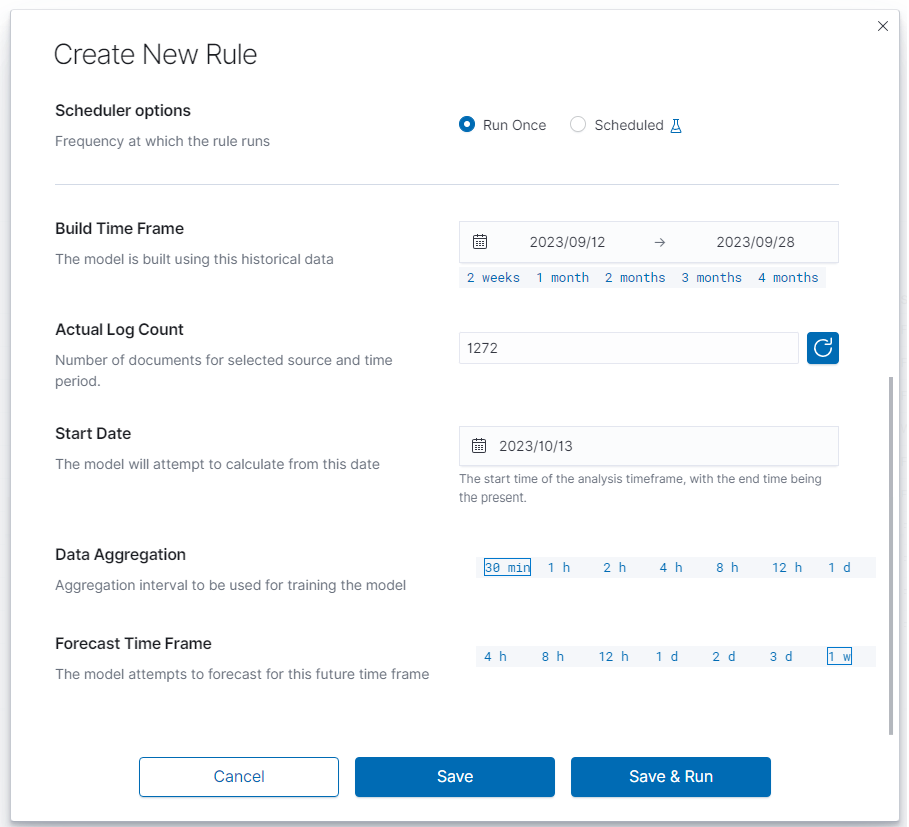

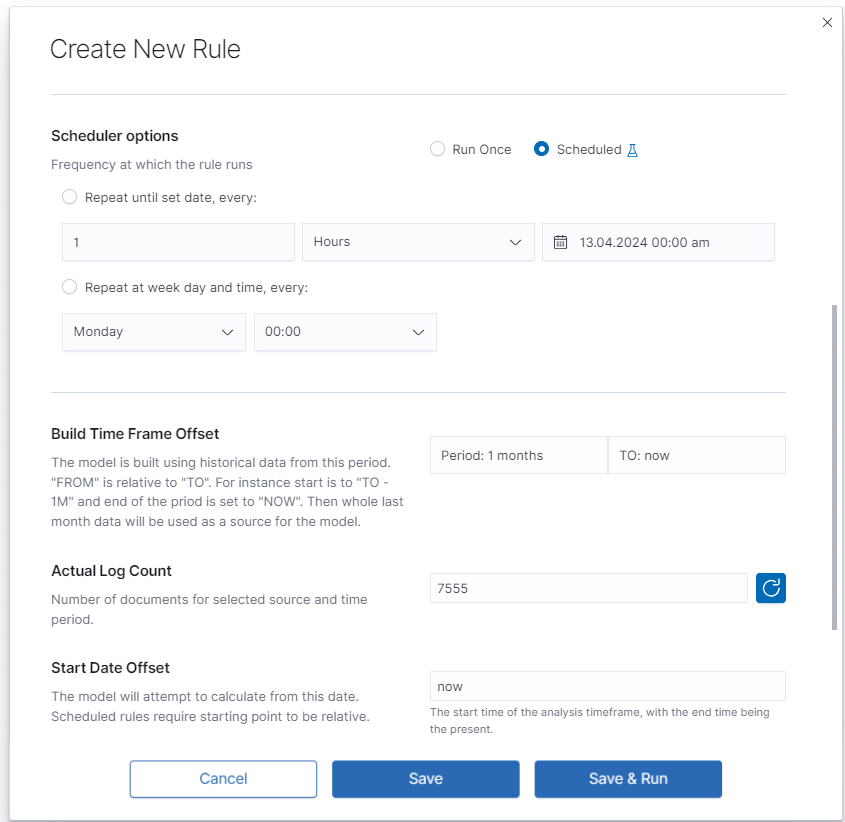

Scheduler options¶

In this field, you can choose the frequency at which the rule runs Run Once or Scheduled.

Run Once¶

Range of dates you choose from the interactive calendars, click on icons.

Build Time Frame is a piece of data used to create and train the model. More training data allows the algorithm to obtain more accurate signal patterns and trend information. If there is too little and incomplete data, the forecast may not be accurate.

Start Date is the starting point of predictions.

Scheduled¶

Scheduled rules require dates to be a relative distance from now. You choose number and time unit: minutes, hours, days, weeks, months. Build Time Frame Offset is historical data used to build the model. Period: time selection is time distance before end point TO: now - time selection of the data set. `Start Date Offset is the starting point of forecast. It must be set inside the build time frame offset.

Data Aggregation and Forecast Time Frame¶

The data set is divided into small intervals specified in the Data Aggregation> 30 min | 1 h | 2 h | 4 h | 8 h | 12 h | 1 d parameter. The algorithm uses this unit as the training and reporting frequency. Forecast Time Frame> 4 h | 8 h | 12 h | 1 d | 2 d | 3 d | 1 w is the period of the requested forecast. A shorter time gives a better estimation. Value of forecast time frame must be a multiplicity of time data aggregation.

Launch¶

You can find the newly created rule in the table with the Waiting to start status. Click Play and wait for a moment. Refresh the rules list. If it has the status Finished, click on the rule’s name to see the results.

Results¶

Difference Multi Pattern - alert rule¶

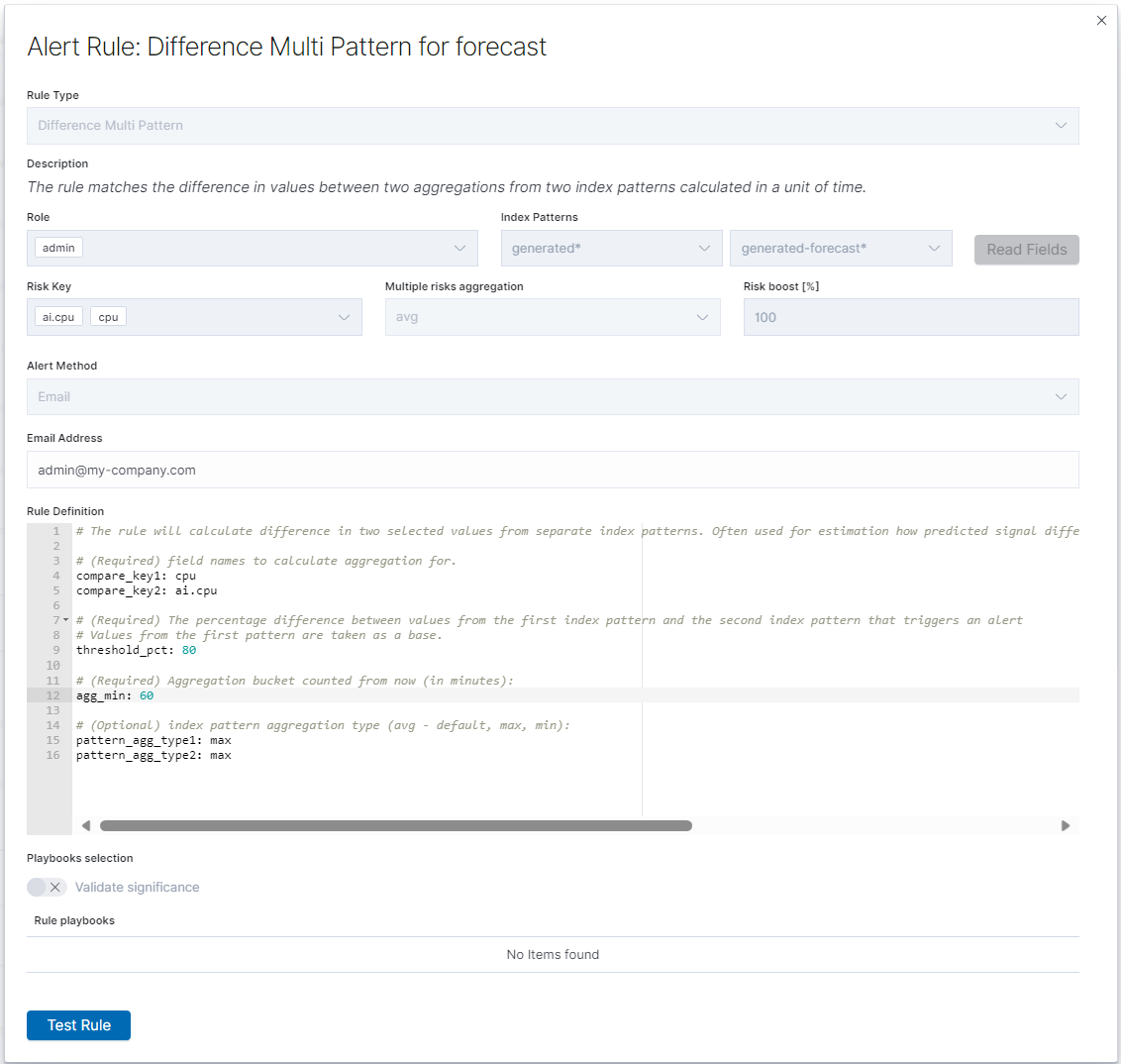

Users of SIEM Plan can leverage Empowered AI in alert rules. For forecasting, a special rule called Multi Pattern Difference has been created. In the form Create Alert Rule, we compare two patterns, index_name-* and index_name-forecast-*, along with the fields used in the forecast, field_name and ai.field_name. It’s crucial to note that the agg_min value should not be lower than the aggregation window used in the forecast; it must be a multiple thereof.

Fill in the remaining fields following the convention used for other alert rules.

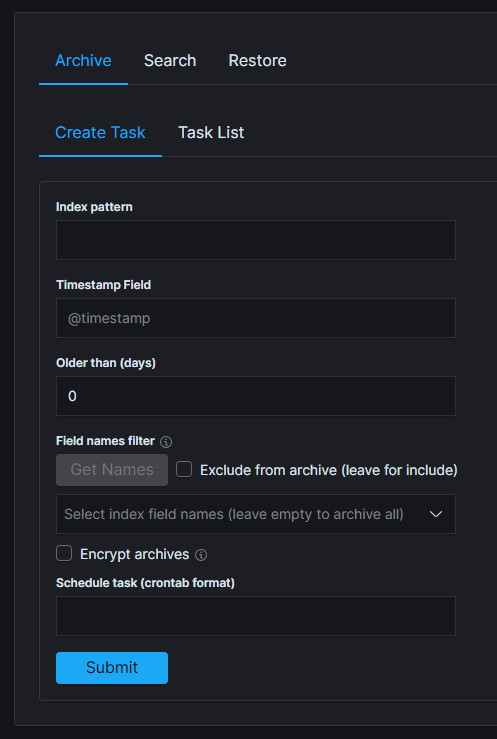

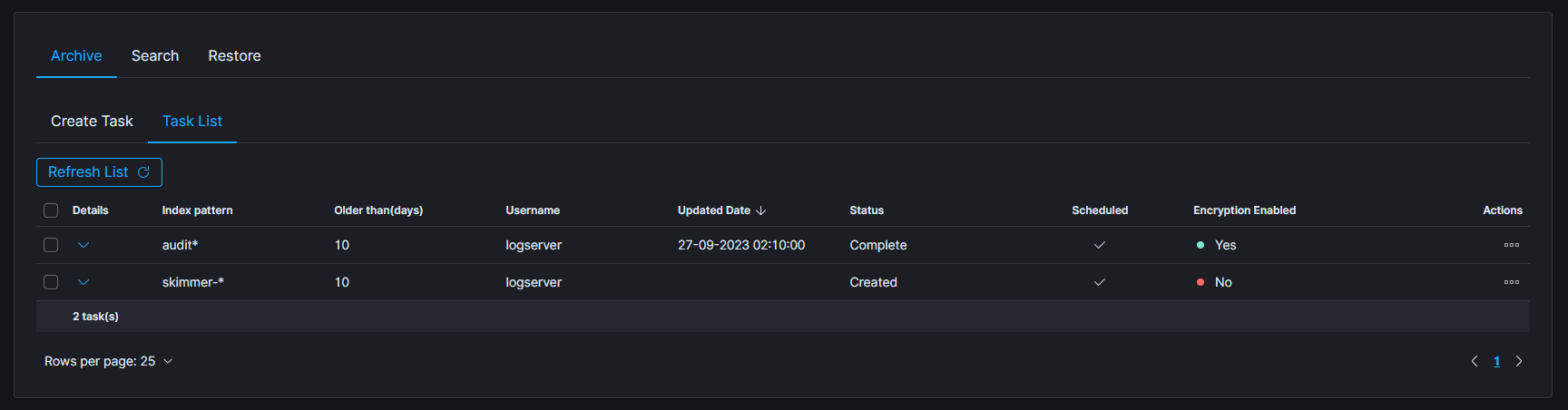

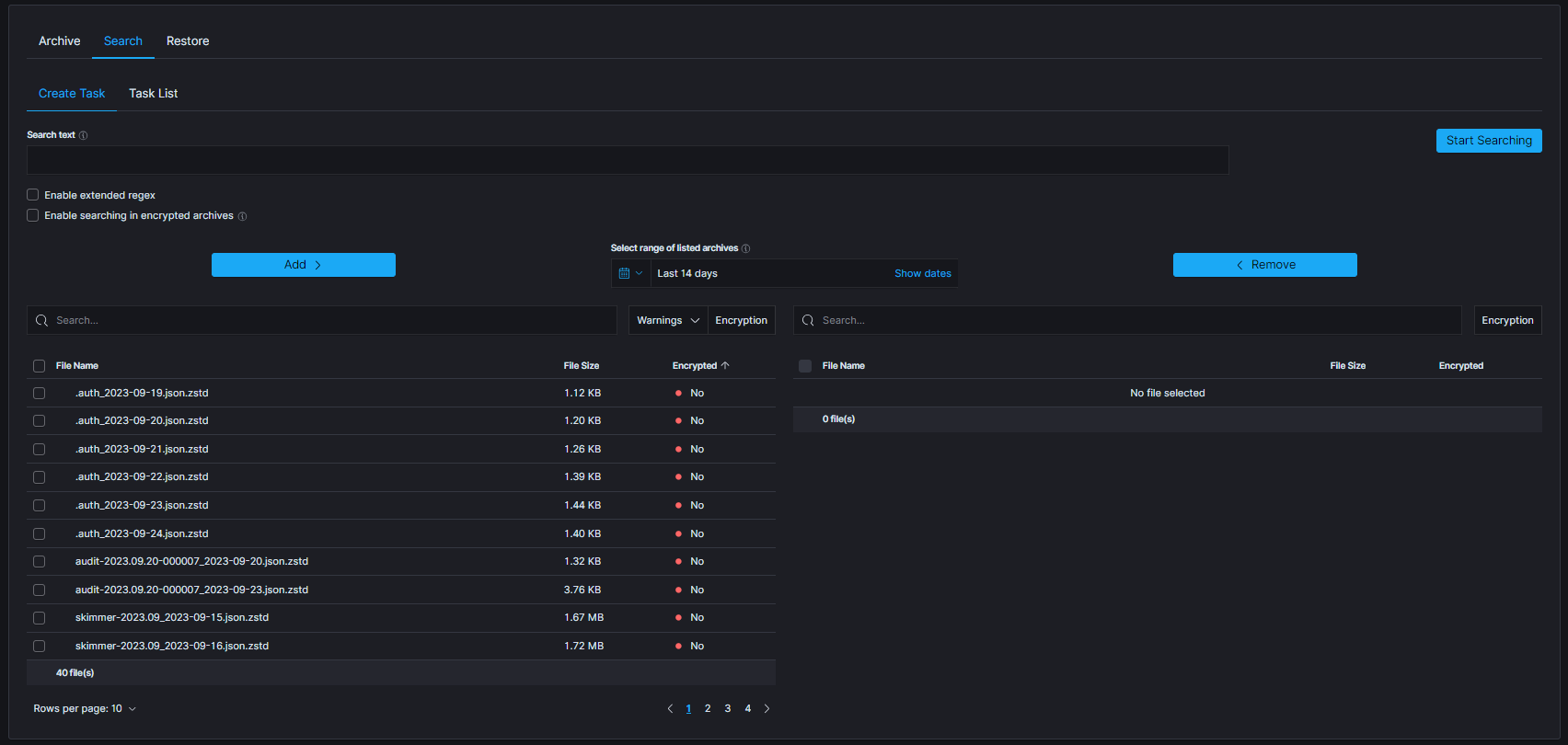

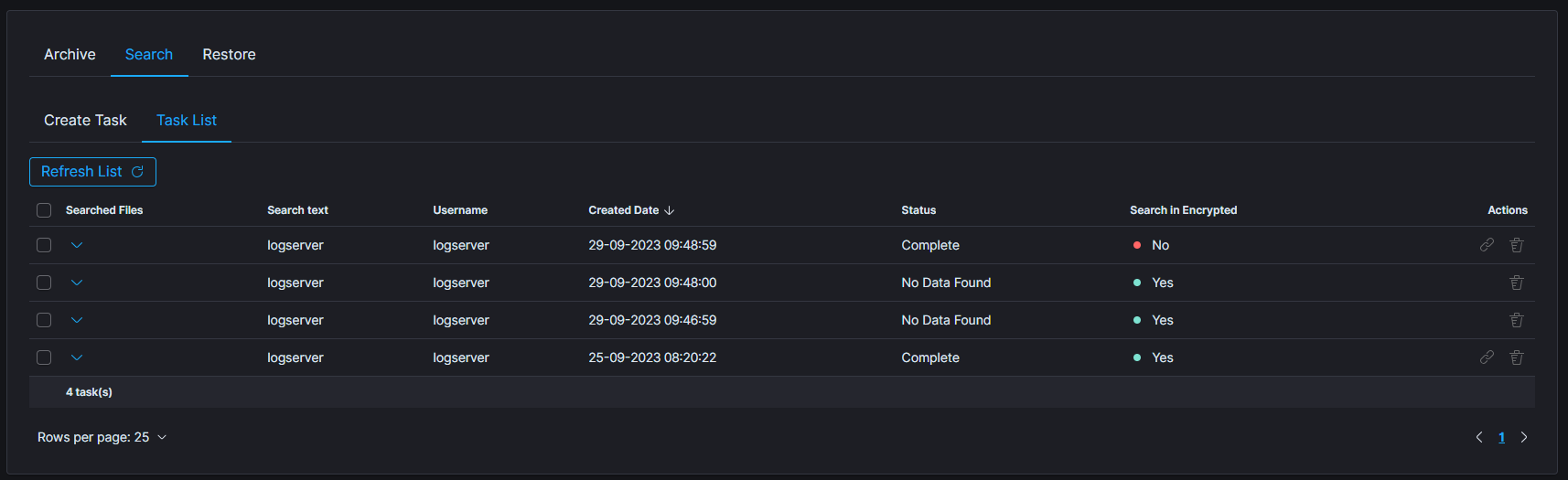

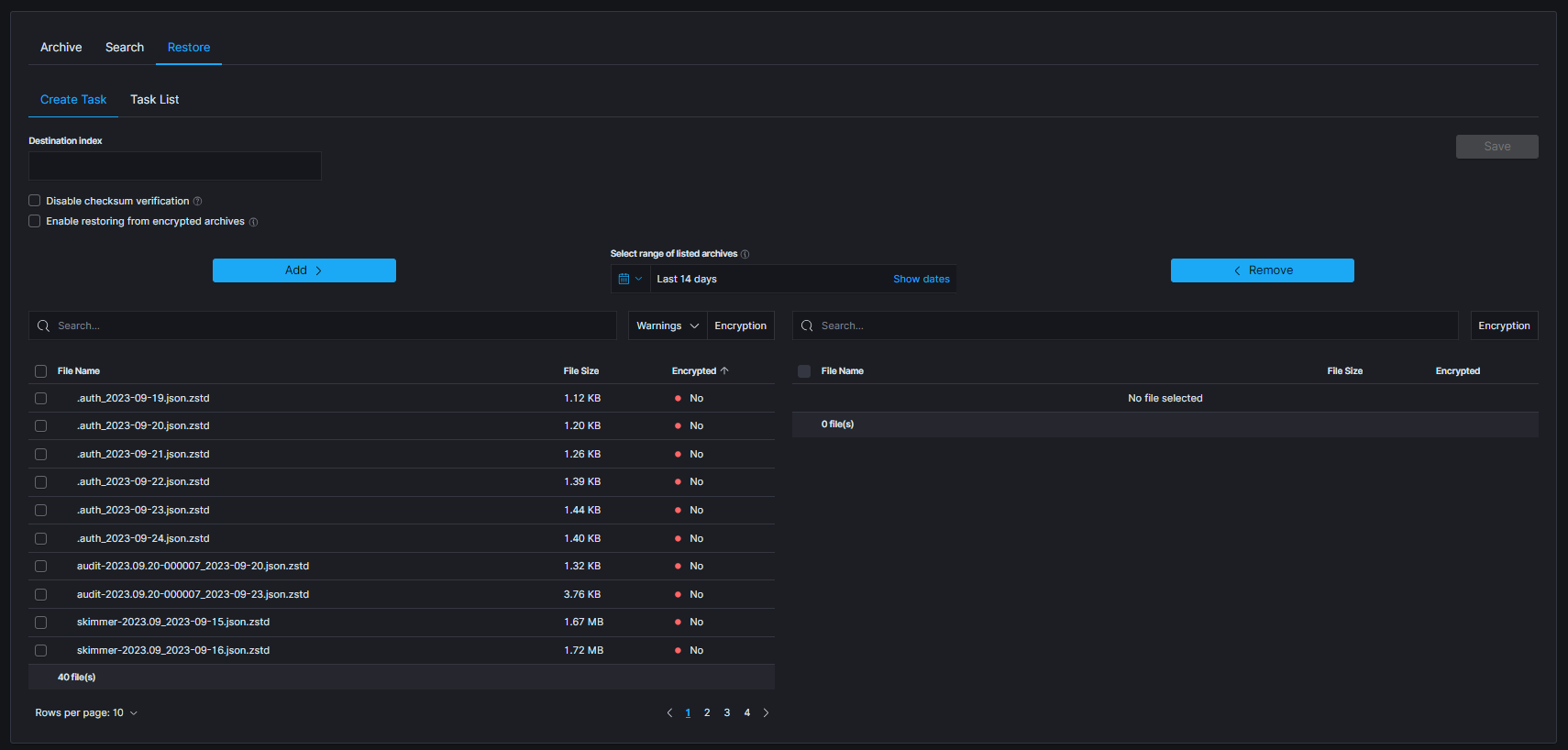

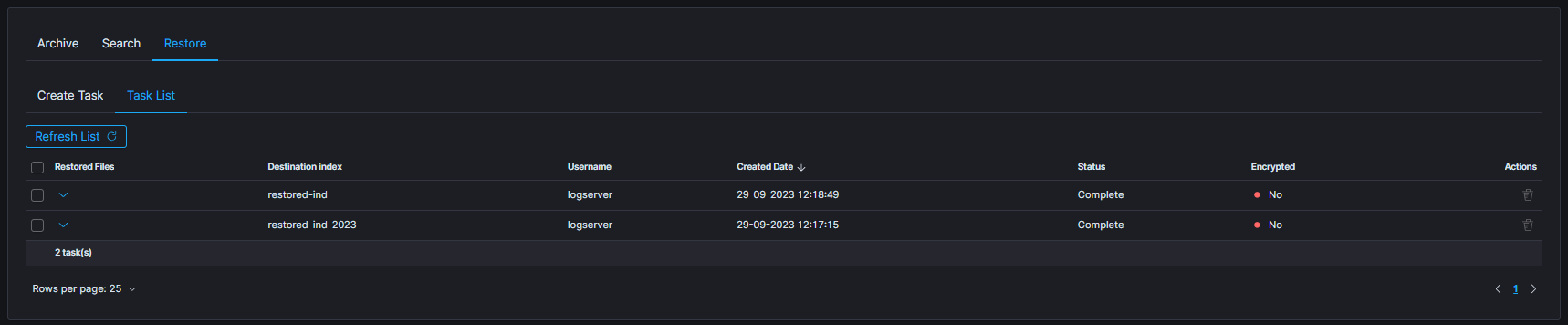

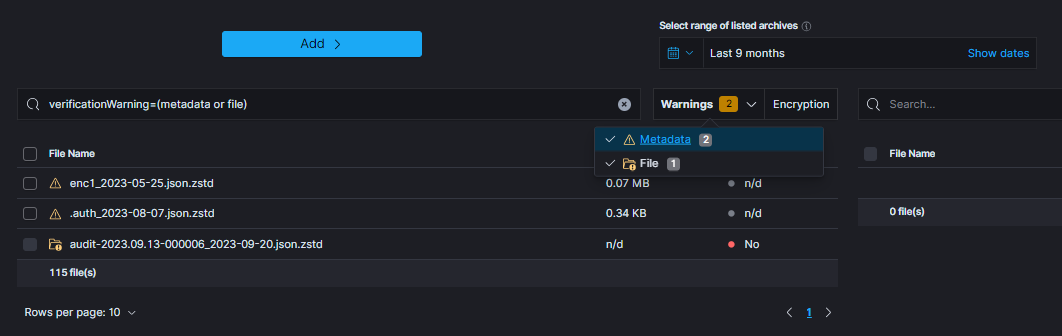

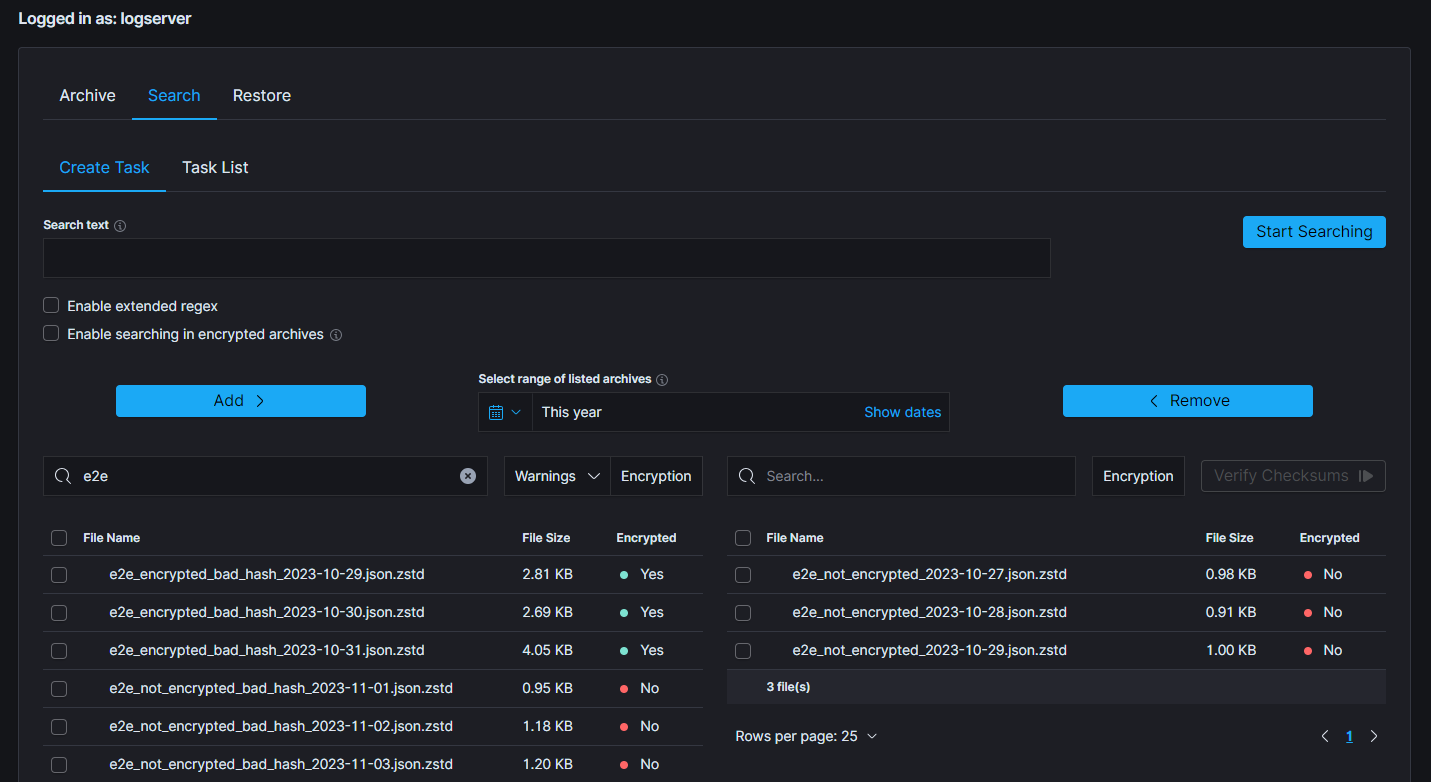

Default AI Forecasting Rules¶